Simon Flügel

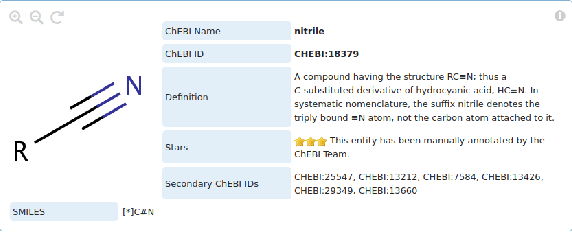

A semantic loss for ontology classification

May 03, 2024Abstract:Deep learning models are often unaware of the inherent constraints of the task they are applied to. However, many downstream tasks require logical consistency. For ontology classification tasks, such constraints include subsumption and disjointness relations between classes. In order to increase the consistency of deep learning models, we propose a semantic loss that combines label-based loss with terms penalising subsumption- or disjointness-violations. Our evaluation on the ChEBI ontology shows that the semantic loss is able to decrease the number of consistency violations by several orders of magnitude without decreasing the classification performance. In addition, we use the semantic loss for unsupervised learning. We show that this can further improve consistency on data from a distribution outside the scope of the supervised training.

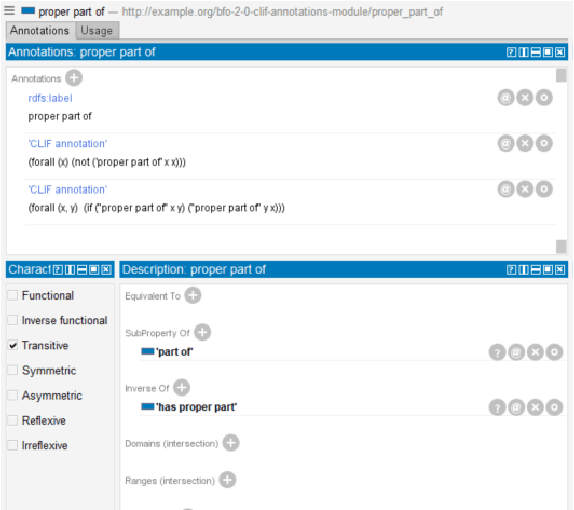

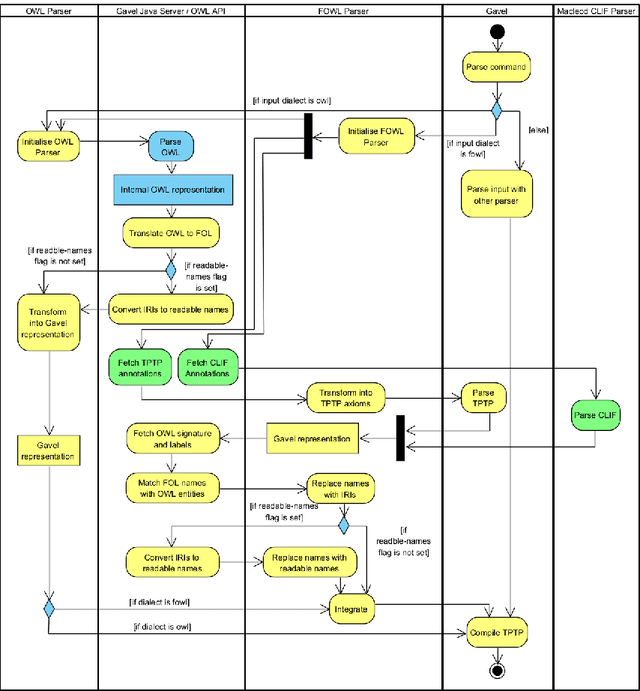

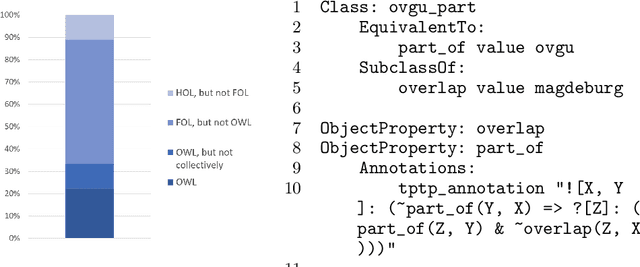

When one Logic is Not Enough: Integrating First-order Annotations in OWL Ontologies

Oct 07, 2022

Abstract:In ontology development, there is a gap between domain ontologies which mostly use the web ontology language, OWL, and foundational ontologies written in first-order logic, FOL. To bridge this gap, we present Gavel, a tool that supports the development of heterogeneous 'FOWL' ontologies that extend OWL with FOL annotations, and is able to reason over the combined set of axioms. Since FOL annotations are stored in OWL annotations, FOWL ontologies remain compatible with the existing OWL infrastructure. We show that for the OWL domain ontology OBI, the stronger integration with its FOL top-level ontology BFO via our approach enables us to detect several inconsistencies. Furthermore, existing OWL ontologies can benefit from FOL annotations. We illustrate this with FOWL ontologies containing mereotopological axioms that enable new meaningful inferences. Finally, we show that even for large domain ontologies such as ChEBI, automatic reasoning with FOL annotations can be used to detect previously unnoticed errors in the classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge