Tiffany Yau

PePScenes: A Novel Dataset and Baseline for Pedestrian Action Prediction in 3D

Dec 14, 2020

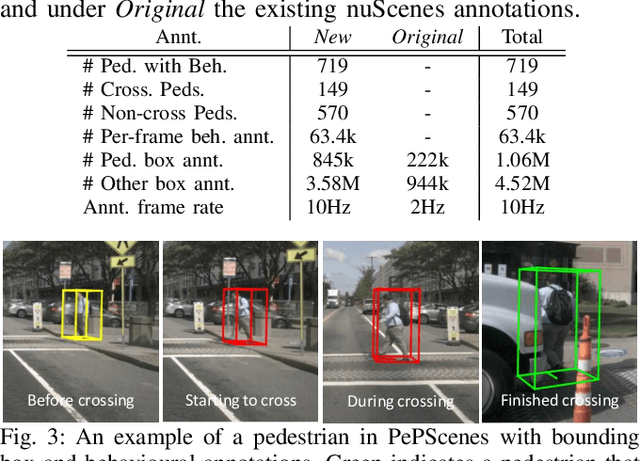

Abstract:Predicting the behavior of road users, particularly pedestrians, is vital for safe motion planning in the context of autonomous driving systems. Traditionally, pedestrian behavior prediction has been realized in terms of forecasting future trajectories. However, recent evidence suggests that predicting higher-level actions, such as crossing the road, can help improve trajectory forecasting and planning tasks accordingly. There are a number of existing datasets that cater to the development of pedestrian action prediction algorithms, however, they lack certain characteristics, such as bird's eye view semantic map information, 3D locations of objects in the scene, etc., which are crucial in the autonomous driving context. To this end, we propose a new pedestrian action prediction dataset created by adding per-frame 2D/3D bounding box and behavioral annotations to the popular autonomous driving dataset, nuScenes. In addition, we propose a hybrid neural network architecture that incorporates various data modalities for predicting pedestrian crossing action. By evaluating our model on the newly proposed dataset, the contribution of different data modalities to the prediction task is revealed. The dataset is available at https://github.com/huawei-noah/PePScenes.

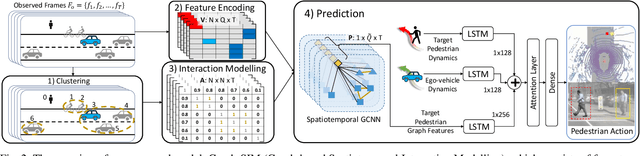

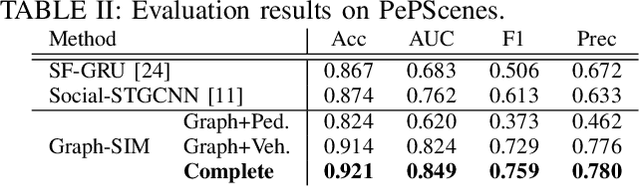

Graph-SIM: A Graph-based Spatiotemporal Interaction Modelling for Pedestrian Action Prediction

Dec 03, 2020

Abstract:One of the most crucial yet challenging tasks for autonomous vehicles in urban environments is predicting the future behaviour of nearby pedestrians, especially at points of crossing. Predicting behaviour depends on many social and environmental factors, particularly interactions between road users. Capturing such interactions requires a global view of the scene and dynamics of the road users in three-dimensional space. This information, however, is missing from the current pedestrian behaviour benchmark datasets. Motivated by these challenges, we propose 1) a novel graph-based model for predicting pedestrian crossing action. Our method models pedestrians' interactions with nearby road users through clustering and relative importance weighting of interactions using features obtained from the bird's-eye-view. 2) We introduce a new dataset that provides 3D bounding box and pedestrian behavioural annotations for the existing nuScenes dataset. On the new data, our approach achieves state-of-the-art performance by improving on various metrics by more than 10% in comparison to existing methods. Upon publishing of this paper, our dataset will be made publicly available.

Multi-Modal Hybrid Architecture for Pedestrian Action Prediction

Nov 16, 2020

Abstract:Pedestrian behavior prediction is one of the major challenges for intelligent driving systems in urban environments. Pedestrians often exhibit a wide range of behaviors and adequate interpretations of those depend on various sources of information such as pedestrian appearance, states of other road users, the environment layout, etc. To address this problem, we propose a novel multi-modal prediction algorithm that incorporates different sources of information captured from the environment to predict future crossing actions of pedestrians. The proposed model benefits from a hybrid learning architecture consisting of feedforward and recurrent networks for analyzing visual features of the environment and dynamics of the scene. Using the existing 2D pedestrian behavior benchmarks and a newly annotated 3D driving dataset, we show that our proposed model achieves state-of-the-art performance in pedestrian crossing prediction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge