Tie Luo

Unlocking Neural Transparency: Jacobian Maps for Explainable AI in Alzheimer's Detection

Apr 04, 2025Abstract:Alzheimer's disease (AD) leads to progressive cognitive decline, making early detection crucial for effective intervention. While deep learning models have shown high accuracy in AD diagnosis, their lack of interpretability limits clinical trust and adoption. This paper introduces a novel pre-model approach leveraging Jacobian Maps (JMs) within a multi-modal framework to enhance explainability and trustworthiness in AD detection. By capturing localized brain volume changes, JMs establish meaningful correlations between model predictions and well-known neuroanatomical biomarkers of AD. We validate JMs through experiments comparing a 3D CNN trained on JMs versus on traditional preprocessed data, which demonstrates superior accuracy. We also employ 3D Grad-CAM analysis to provide both visual and quantitative insights, further showcasing improved interpretability and diagnostic reliability.

Enabling Heterogeneous Adversarial Transferability via Feature Permutation Attacks

Mar 26, 2025

Abstract:Adversarial attacks in black-box settings are highly practical, with transfer-based attacks being the most effective at generating adversarial examples (AEs) that transfer from surrogate models to unseen target models. However, their performance significantly degrades when transferring across heterogeneous architectures -- such as CNNs, MLPs, and Vision Transformers (ViTs) -- due to fundamental architectural differences. To address this, we propose Feature Permutation Attack (FPA), a zero-FLOP, parameter-free method that enhances adversarial transferability across diverse architectures. FPA introduces a novel feature permutation (FP) operation, which rearranges pixel values in selected feature maps to simulate long-range dependencies, effectively making CNNs behave more like ViTs and MLPs. This enhances feature diversity and improves transferability both across heterogeneous architectures and within homogeneous CNNs. Extensive evaluations on 14 state-of-the-art architectures show that FPA achieves maximum absolute gains in attack success rates of 7.68% on CNNs, 14.57% on ViTs, and 14.48% on MLPs, outperforming existing black-box attacks. Additionally, FPA is highly generalizable and can seamlessly integrate with other transfer-based attacks to further boost their performance. Our findings establish FPA as a robust, efficient, and computationally lightweight strategy for enhancing adversarial transferability across heterogeneous architectures.

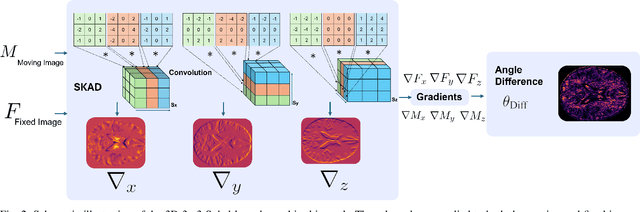

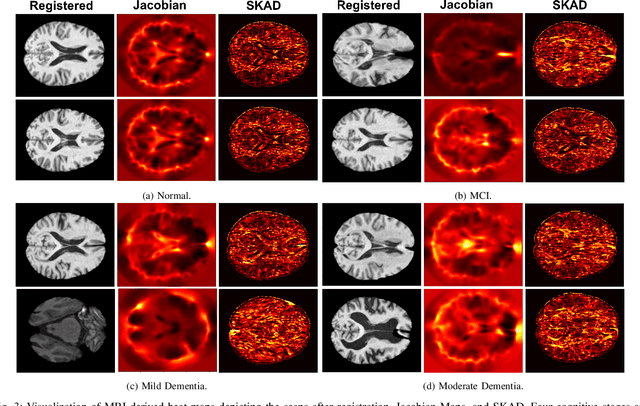

Efficient Brain Imaging Analysis for Alzheimer's and Dementia Detection Using Convolution-Derivative Operations

Nov 20, 2024

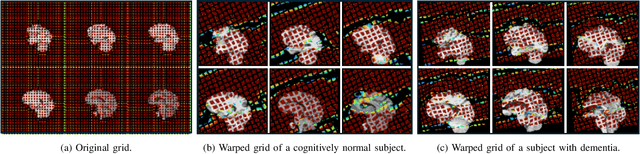

Abstract:Alzheimer's disease (AD) is characterized by progressive neurodegeneration and results in detrimental structural changes in human brains. Detecting these changes is crucial for early diagnosis and timely intervention of disease progression. Jacobian maps, derived from spatial normalization in voxel-based morphometry (VBM), have been instrumental in interpreting volume alterations associated with AD. However, the computational cost of generating Jacobian maps limits its clinical adoption. In this study, we explore alternative methods and propose Sobel kernel angle difference (SKAD) as a computationally efficient alternative. SKAD is a derivative operation that offers an optimized approach to quantifying volumetric alterations through localized analysis of the gradients. By efficiently extracting gradient amplitude changes at critical spatial regions, this derivative operation captures regional volume variations Evaluation of SKAD over various medical datasets demonstrates that it is 6.3x faster than Jacobian maps while still maintaining comparable accuracy. This makes it an efficient and competitive approach in neuroimaging research and clinical practice.

Adversarial-Robust Transfer Learning for Medical Imaging via Domain Assimilation

Feb 25, 2024

Abstract:In the field of Medical Imaging, extensive research has been dedicated to leveraging its potential in uncovering critical diagnostic features in patients. Artificial Intelligence (AI)-driven medical diagnosis relies on sophisticated machine learning and deep learning models to analyze, detect, and identify diseases from medical images. Despite the remarkable performance of these models, characterized by high accuracy, they grapple with trustworthiness issues. The introduction of a subtle perturbation to the original image empowers adversaries to manipulate the prediction output, redirecting it to other targeted or untargeted classes. Furthermore, the scarcity of publicly available medical images, constituting a bottleneck for reliable training, has led contemporary algorithms to depend on pretrained models grounded on a large set of natural images -- a practice referred to as transfer learning. However, a significant {\em domain discrepancy} exists between natural and medical images, which causes AI models resulting from transfer learning to exhibit heightened {\em vulnerability} to adversarial attacks. This paper proposes a {\em domain assimilation} approach that introduces texture and color adaptation into transfer learning, followed by a texture preservation component to suppress undesired distortion. We systematically analyze the performance of transfer learning in the face of various adversarial attacks under different data modalities, with the overarching goal of fortifying the model's robustness and security in medical imaging tasks. The results demonstrate high effectiveness in reducing attack efficacy, contributing toward more trustworthy transfer learning in biomedical applications.

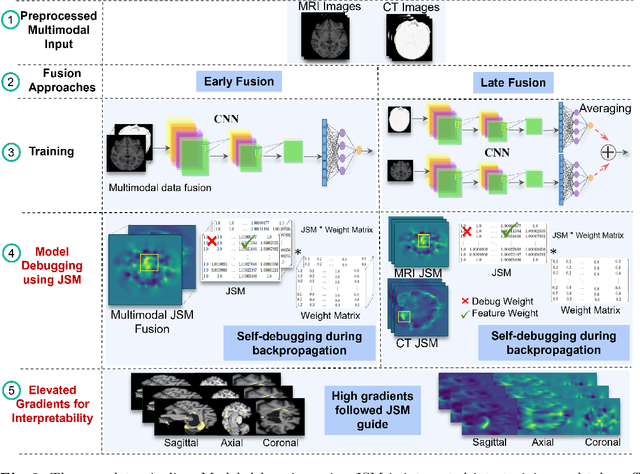

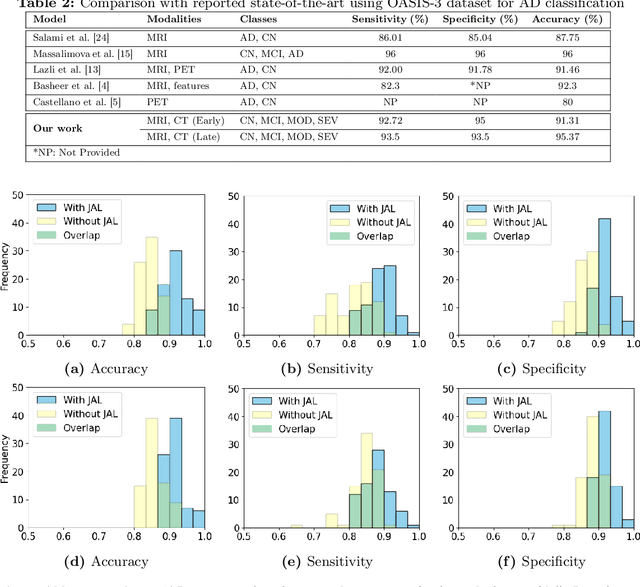

Unmasking Dementia Detection by Masking Input Gradients: A JSM Approach to Model Interpretability and Precision

Feb 25, 2024

Abstract:The evolution of deep learning and artificial intelligence has significantly reshaped technological landscapes. However, their effective application in crucial sectors such as medicine demands more than just superior performance, but trustworthiness as well. While interpretability plays a pivotal role, existing explainable AI (XAI) approaches often do not reveal {\em Clever Hans} behavior where a model makes (ungeneralizable) correct predictions using spurious correlations or biases in data. Likewise, current post-hoc XAI methods are susceptible to generating unjustified counterfactual examples. In this paper, we approach XAI with an innovative {\em model debugging} methodology realized through Jacobian Saliency Map (JSM). To cast the problem into a concrete context, we employ Alzheimer's disease (AD) diagnosis as the use case, motivated by its significant impact on human lives and the formidable challenge in its early detection, stemming from the intricate nature of its progression. We introduce an interpretable, multimodal model for AD classification over its multi-stage progression, incorporating JSM as a modality-agnostic tool that provides insights into volumetric changes indicative of brain abnormalities. Our extensive evaluation including ablation study manifests the efficacy of using JSM for model debugging and interpretation, while significantly enhancing model accuracy as well.

Stitching Satellites to the Edge: Pervasive and Efficient Federated LEO Satellite Learning

Jan 28, 2024

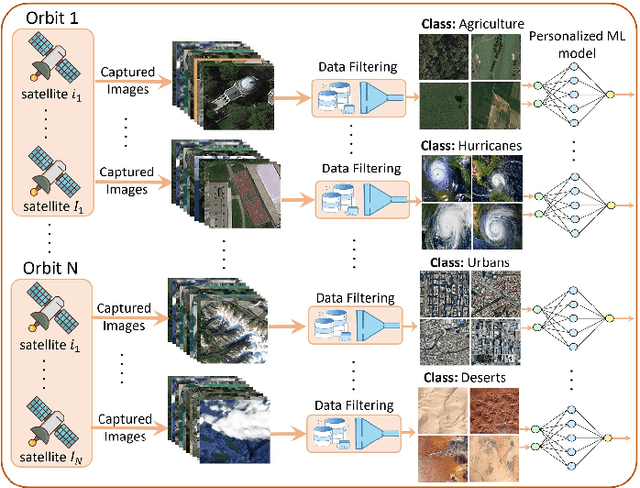

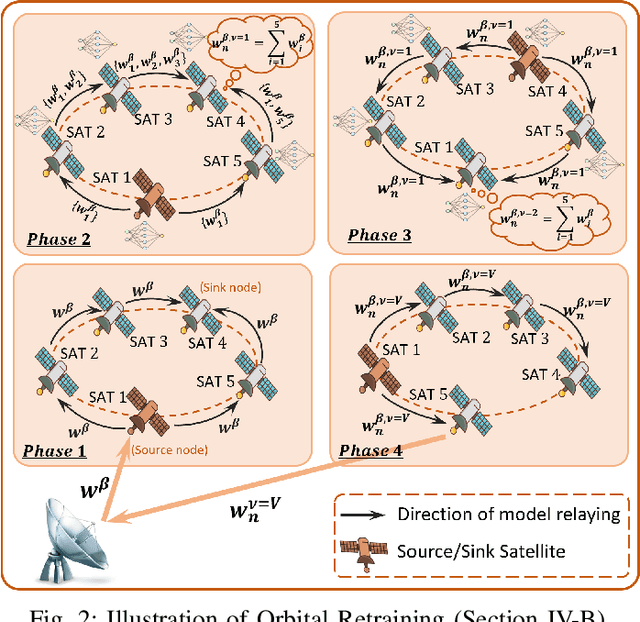

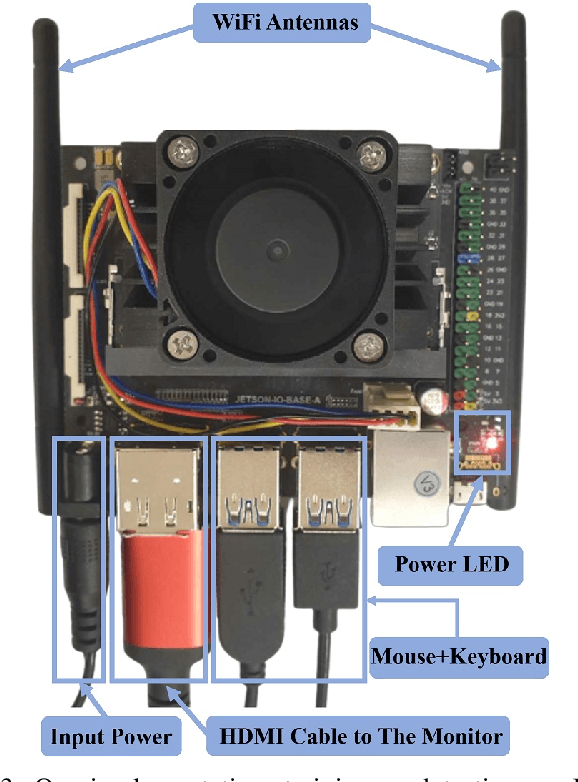

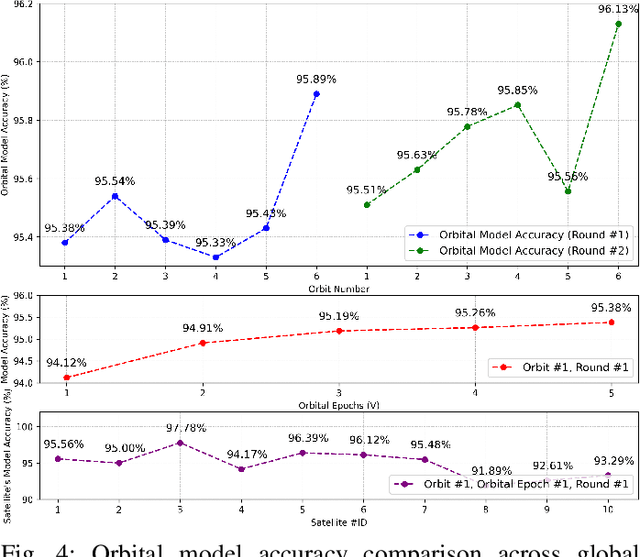

Abstract:In the ambitious realm of space AI, the integration of federated learning (FL) with low Earth orbit (LEO) satellite constellations holds immense promise. However, many challenges persist in terms of feasibility, learning efficiency, and convergence. These hurdles stem from the bottleneck in communication, characterized by sporadic and irregular connectivity between LEO satellites and ground stations, coupled with the limited computation capability of satellite edge computing (SEC). This paper proposes a novel FL-SEC framework that empowers LEO satellites to execute large-scale machine learning (ML) tasks onboard efficiently. Its key components include i) personalized learning via divide-and-conquer, which identifies and eliminates redundant satellite images and converts complex multi-class classification problems to simple binary classification, enabling rapid and energy-efficient training of lightweight ML models suitable for IoT/edge devices on satellites; ii) orbital model retraining, which generates an aggregated "orbital model" per orbit and retrains it before sending to the ground station, significantly reducing the required communication rounds. We conducted experiments using Jetson Nano, an edge device closely mimicking the limited compute on LEO satellites, and a real satellite dataset. The results underscore the effectiveness of our approach, highlighting SEC's ability to run lightweight ML models on real and high-resolution satellite imagery. Our approach dramatically reduces FL convergence time by nearly 30 times, and satellite energy consumption down to as low as 1.38 watts, all while maintaining an exceptional accuracy of up to 96%.

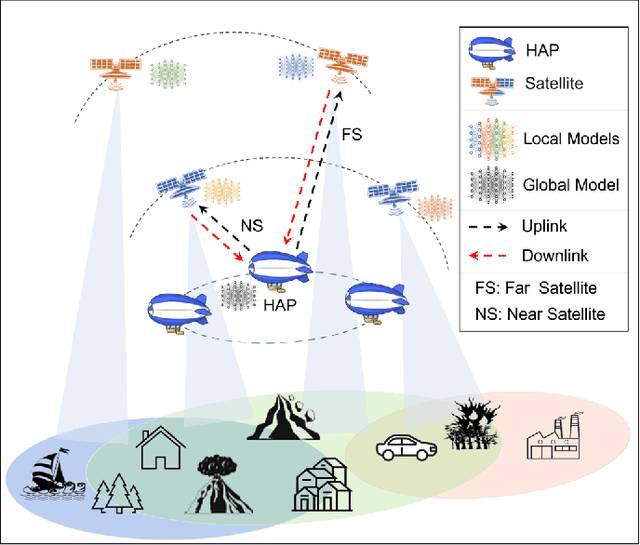

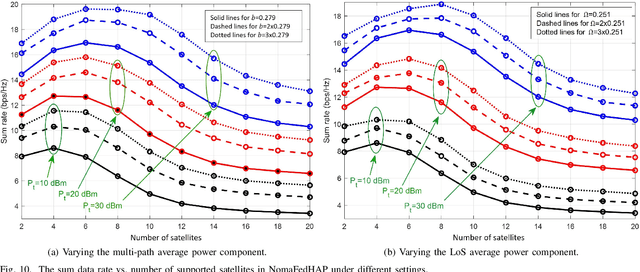

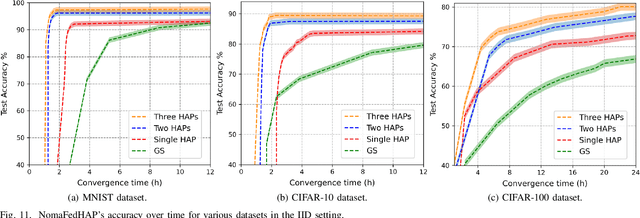

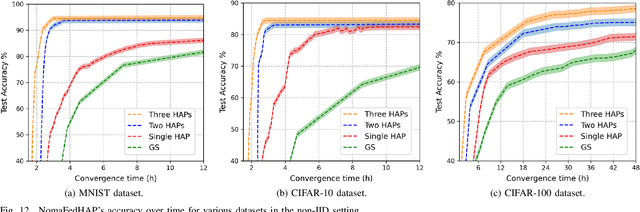

Communication-Efficient Federated Learning for LEO Constellations Integrated with HAPs Using Hybrid NOMA-OFDM

Jan 01, 2024

Abstract:Space AI has become increasingly important and sometimes even necessary for government, businesses, and society. An active research topic under this mission is integrating federated learning (FL) with satellite communications (SatCom) so that numerous low Earth orbit (LEO) satellites can collaboratively train a machine learning model. However, the special communication environment of SatCom leads to a very slow FL training process up to days and weeks. This paper proposes NomaFedHAP, a novel FL-SatCom approach tailored to LEO satellites, that (1) utilizes high-altitude platforms (HAPs) as distributed parameter servers (PS) to enhance satellite visibility, and (2) introduces non-orthogonal multiple access (NOMA) into LEO to enable fast and bandwidth-efficient model transmissions. In addition, NomaFedHAP includes (3) a new communication topology that exploits HAPs to bridge satellites among different orbits to mitigate the Doppler shift, and (4) a new FL model aggregation scheme that optimally balances models between different orbits and shells. Moreover, we (5) derive a closed-form expression of the outage probability for satellites in near and far shells, as well as for the entire system. Our extensive simulations have validated the mathematical analysis and demonstrated the superior performance of NomaFedHAP in achieving fast and efficient FL model convergence with high accuracy as compared to the state-of-the-art.

CR-SAM: Curvature Regularized Sharpness-Aware Minimization

Dec 23, 2023Abstract:The capacity to generalize to future unseen data stands as one of the utmost crucial attributes of deep neural networks. Sharpness-Aware Minimization (SAM) aims to enhance the generalizability by minimizing worst-case loss using one-step gradient ascent as an approximation. However, as training progresses, the non-linearity of the loss landscape increases, rendering one-step gradient ascent less effective. On the other hand, multi-step gradient ascent will incur higher training cost. In this paper, we introduce a normalized Hessian trace to accurately measure the curvature of loss landscape on {\em both} training and test sets. In particular, to counter excessive non-linearity of loss landscape, we propose Curvature Regularized SAM (CR-SAM), integrating the normalized Hessian trace as a SAM regularizer. Additionally, we present an efficient way to compute the trace via finite differences with parallelism. Our theoretical analysis based on PAC-Bayes bounds establishes the regularizer's efficacy in reducing generalization error. Empirical evaluation on CIFAR and ImageNet datasets shows that CR-SAM consistently enhances classification performance for ResNet and Vision Transformer (ViT) models across various datasets. Our code is available at https://github.com/TrustAIoT/CR-SAM.

LRS: Enhancing Adversarial Transferability through Lipschitz Regularized Surrogate

Dec 20, 2023Abstract:The transferability of adversarial examples is of central importance to transfer-based black-box adversarial attacks. Previous works for generating transferable adversarial examples focus on attacking \emph{given} pretrained surrogate models while the connections between surrogate models and adversarial trasferability have been overlooked. In this paper, we propose {\em Lipschitz Regularized Surrogate} (LRS) for transfer-based black-box attacks, a novel approach that transforms surrogate models towards favorable adversarial transferability. Using such transformed surrogate models, any existing transfer-based black-box attack can run without any change, yet achieving much better performance. Specifically, we impose Lipschitz regularization on the loss landscape of surrogate models to enable a smoother and more controlled optimization process for generating more transferable adversarial examples. In addition, this paper also sheds light on the connection between the inner properties of surrogate models and adversarial transferability, where three factors are identified: smaller local Lipschitz constant, smoother loss landscape, and stronger adversarial robustness. We evaluate our proposed LRS approach by attacking state-of-the-art standard deep neural networks and defense models. The results demonstrate significant improvement on the attack success rates and transferability. Our code is available at https://github.com/TrustAIoT/LRS.

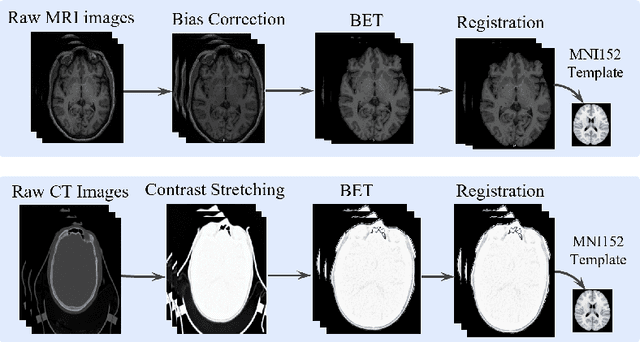

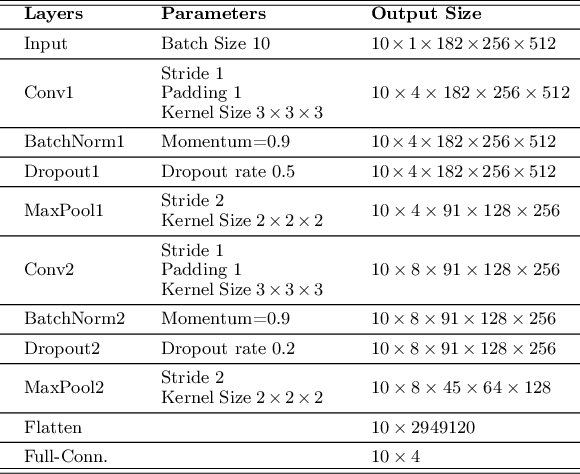

Diagnosing Alzheimer's Disease using Early-Late Multimodal Data Fusion with Jacobian Maps

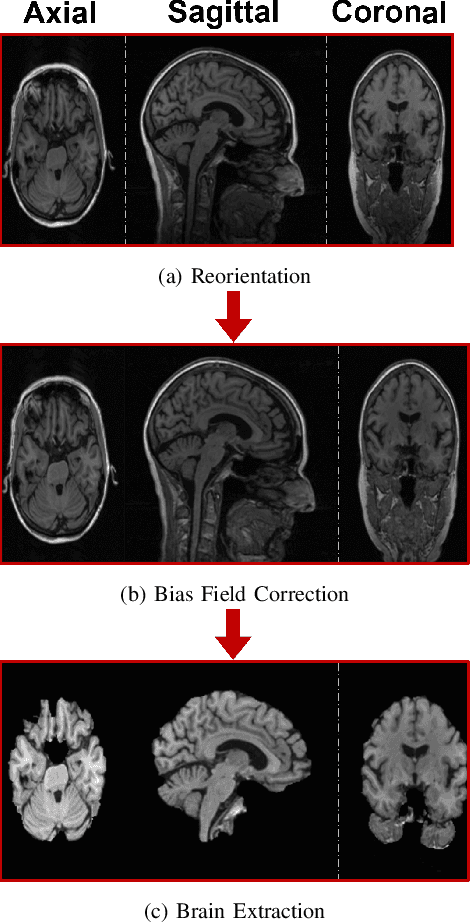

Oct 27, 2023Abstract:Alzheimer's disease (AD) is a prevalent and debilitating neurodegenerative disorder impacting a large aging population. Detecting AD in all its presymptomatic and symptomatic stages is crucial for early intervention and treatment. An active research direction is to explore machine learning methods that harness multimodal data fusion to outperform human inspection of medical scans. However, existing multimodal fusion models have limitations, including redundant computation, complex architecture, and simplistic handling of missing data. Moreover, the preprocessing pipelines of medical scans remain inadequately detailed and are seldom optimized for individual subjects. In this paper, we propose an efficient early-late fusion (ELF) approach, which leverages a convolutional neural network for automated feature extraction and random forests for their competitive performance on small datasets. Additionally, we introduce a robust preprocessing pipeline that adapts to the unique characteristics of individual subjects and makes use of whole brain images rather than slices or patches. Moreover, to tackle the challenge of detecting subtle changes in brain volume, we transform images into the Jacobian domain (JD) to enhance both accuracy and robustness in our classification. Using MRI and CT images from the OASIS-3 dataset, our experiments demonstrate the effectiveness of the ELF approach in classifying AD into four stages with an accuracy of 97.19%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge