Tianwei Lan

LLM-Driven Feature-Level Adversarial Attacks on Android Malware Detectors

Dec 24, 2025Abstract:The rapid growth in both the scale and complexity of Android malware has driven the widespread adoption of machine learning (ML) techniques for scalable and accurate malware detection. Despite their effectiveness, these models remain vulnerable to adversarial attacks that introduce carefully crafted feature-level perturbations to evade detection while preserving malicious functionality. In this paper, we present LAMLAD, a novel adversarial attack framework that exploits the generative and reasoning capabilities of large language models (LLMs) to bypass ML-based Android malware classifiers. LAMLAD employs a dual-agent architecture composed of an LLM manipulator, which generates realistic and functionality-preserving feature perturbations, and an LLM analyzer, which guides the perturbation process toward successful evasion. To improve efficiency and contextual awareness, LAMLAD integrates retrieval-augmented generation (RAG) into the LLM pipeline. Focusing on Drebin-style feature representations, LAMLAD enables stealthy and high-confidence attacks against widely deployed Android malware detection systems. We evaluate LAMLAD against three representative ML-based Android malware detectors and compare its performance with two state-of-the-art adversarial attack methods. Experimental results demonstrate that LAMLAD achieves an attack success rate (ASR) of up to 97%, requiring on average only three attempts per adversarial sample, highlighting its effectiveness, efficiency, and adaptability in practical adversarial settings. Furthermore, we propose an adversarial training-based defense strategy that reduces the ASR by more than 30% on average, significantly enhancing model robustness against LAMLAD-style attacks.

Trust Under Siege: Label Spoofing Attacks against Machine Learning for Android Malware Detection

Mar 14, 2025Abstract:Machine learning (ML) malware detectors rely heavily on crowd-sourced AntiVirus (AV) labels, with platforms like VirusTotal serving as a trusted source of malware annotations. But what if attackers could manipulate these labels to classify benign software as malicious? We introduce label spoofing attacks, a new threat that contaminates crowd-sourced datasets by embedding minimal and undetectable malicious patterns into benign samples. These patterns coerce AV engines into misclassifying legitimate files as harmful, enabling poisoning attacks against ML-based malware classifiers trained on those data. We demonstrate this scenario by developing AndroVenom, a methodology for polluting realistic data sources, causing consequent poisoning attacks against ML malware detectors. Experiments show that not only state-of-the-art feature extractors are unable to filter such injection, but also various ML models experience Denial of Service already with 1% poisoned samples. Additionally, attackers can flip decisions of specific unaltered benign samples by modifying only 0.015% of the training data, threatening their reputation and market share and being unable to be stopped by anomaly detectors on training data. We conclude our manuscript by raising the alarm on the trustworthiness of the training process based on AV annotations, requiring further investigation on how to produce proper labels for ML malware detectors.

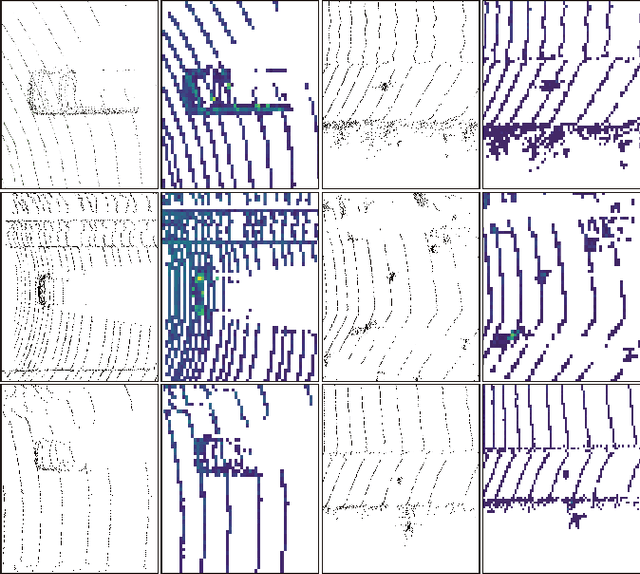

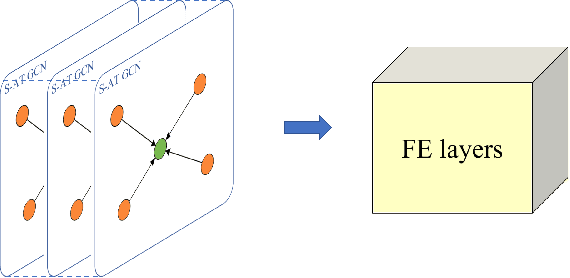

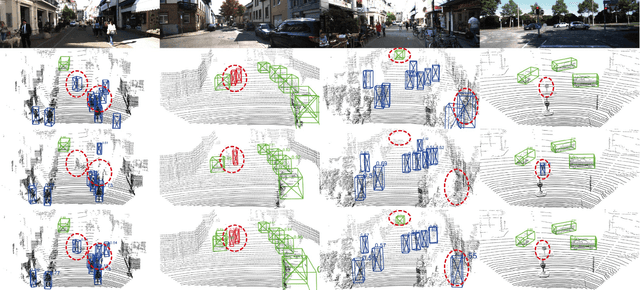

S-AT GCN: Spatial-Attention Graph Convolution Network based Feature Enhancement for 3D Object Detection

Mar 15, 2021

Abstract:3D object detection plays a crucial role in environmental perception for autonomous vehicles, which is the prerequisite of decision and control. This paper analyses partition-based methods' inherent drawbacks. In the partition operation, a single instance such as a pedestrian is sliced into several pieces, which we call it the partition effect. We propose the Spatial-Attention Graph Convolution (S-AT GCN), forming the Feature Enhancement (FE) layers to overcome this drawback. The S-AT GCN utilizes the graph convolution and the spatial attention mechanism to extract local geometrical structure features. This allows the network to have more meaningful features for the foreground. Our experiments on the KITTI 3D object and bird's eye view detection show that S-AT Conv and FE layers are effective, especially for small objects. FE layers boost the pedestrian class performance by 3.62\% and cyclist class by 4.21\% 3D mAP. The time cost of these extra FE layers are limited. PointPillars with FE layers can achieve 48 PFS, satisfying the real-time requirement.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge