Tianjian Xu

Towards Subcentimeter Accuracy Digital-Twin Tracking via An RGBD-based Transformer Model and A Comprehensive Mobile Dataset

Sep 24, 2023Abstract:The potential of digital twin technology, involving the creation of precise digital replicas of physical objects, to reshape AR experiences in 3D object tracking and localization scenarios is significant. However, enabling 3D object tracking with subcentimeter accuracy in dynamic mobile AR environments remains a formidable challenge. These scenarios often require a more robust pose estimator capable of handling the inherent sensor-level measurement noise. In this paper, recognizing the absence of comprehensive solutions in existing literature, we build upon our previous work, the Digital Twin Tracking Dataset (DTTD), to address these challenges in mobile AR settings. Specifically, we propose a transformer-based 6DoF pose estimator designed to withstand the challenges posed by noisy depth data. Simultaneously, we introduce a novel RGBD dataset captured using a cutting-edge mobile sensor, the iPhone 14 Pro, expanding the applicability of our approach to iPhone sensor data. Through extensive experimentation and in-depth analysis, we illustrate the effectiveness of our methods in the face of significant depth data errors, surpassing the performance of existing baselines. Code will be made publicly available.

The EpiBench Platform to Propel AI/ML-based Epidemic Forecasting: A Prototype Demonstration Reaching Human Expert-level Performance

Feb 04, 2021

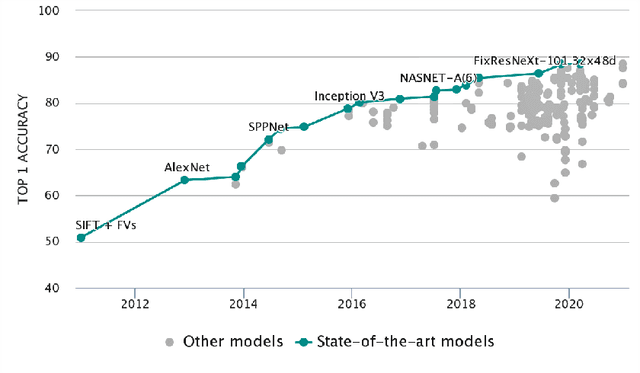

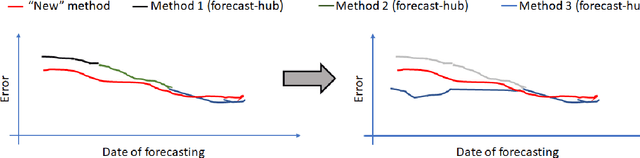

Abstract:During the COVID-19 pandemic, a significant effort has gone into developing ML-driven epidemic forecasting techniques. However, benchmarks do not exist to claim if a new AI/ML technique is better than the existing ones. The "covid-forecast-hub" is a collection of more than 30 teams, including us, that submit their forecasts weekly to the CDC. It is not possible to declare whether one method is better than the other using those forecasts because each team's submission may correspond to different techniques over the period and involve human interventions as the teams are continuously changing/tuning their approach. Such forecasts may be considered "human-expert" forecasts and do not qualify as AI/ML approaches, although they can be used as an indicator of human expert performance. We are interested in supporting AI/ML research in epidemic forecasting which can lead to scalable forecasting without human intervention. Which modeling technique, learning strategy, and data pre-processing technique work well for epidemic forecasting is still an open problem. To help advance the state-of-the-art AI/ML applied to epidemiology, a benchmark with a collection of performance points is needed and the current "state-of-the-art" techniques need to be identified. We propose EpiBench a platform consisting of community-driven benchmarks for AI/ML applied to epidemic forecasting to standardize the challenge with a uniform evaluation protocol. In this paper, we introduce a prototype of EpiBench which is currently running and accepting submissions for the task of forecasting COVID-19 cases and deaths in the US states and We demonstrate that we can utilize the prototype to develop an ensemble relying on fully automated epidemic forecasts (no human intervention) that reaches human-expert level ensemble currently being used by the CDC.

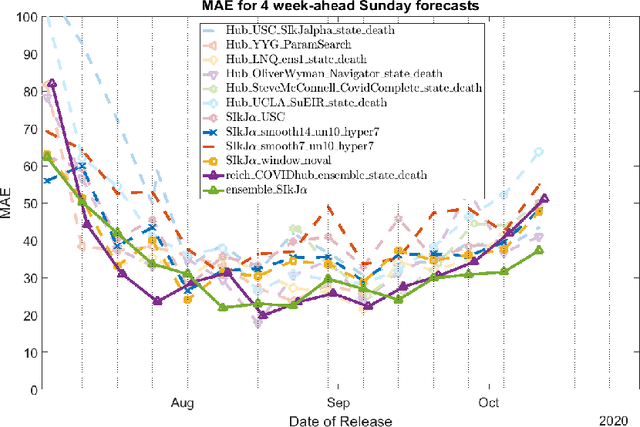

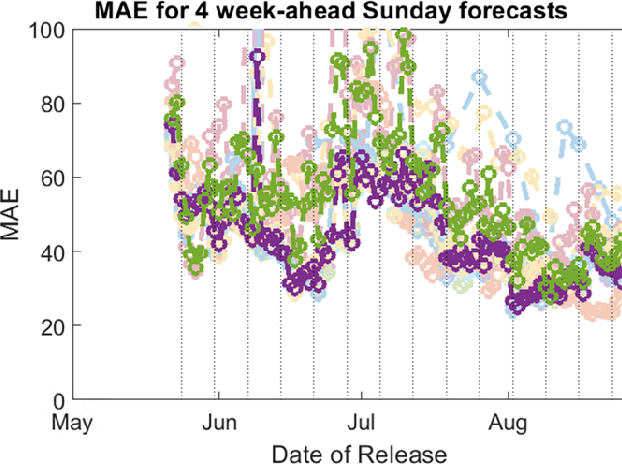

Fast and Accurate Forecasting of COVID-19 Deaths Using the SIkJ$α$ Model

Jul 13, 2020

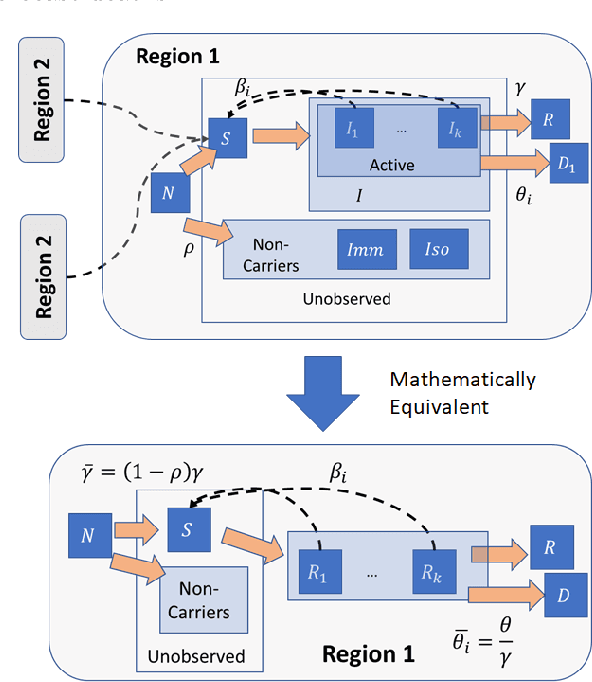

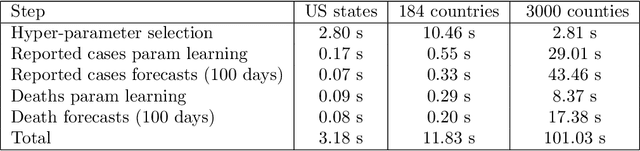

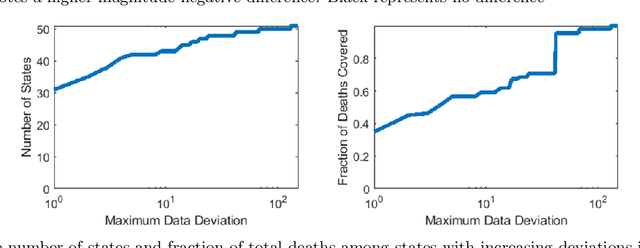

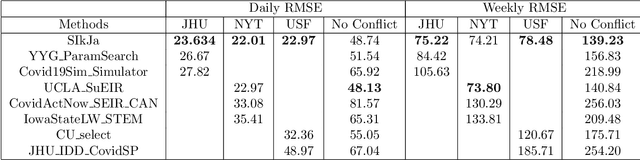

Abstract:Forecasting the effect of COVID-19 is essential to design policies that may prepare us to handle the pandemic. Many methods have already been proposed, particularly, to forecast reported cases and deaths at country-level and state-level. Many of these methods are based on traditional epidemiological model which rely on simulations or Bayesian inference to simultaneously learn many parameters at a time. This makes them prone to over-fitting and slow execution. We propose an extension to our model SIkJ$\alpha$ to forecast deaths and show that it can consider the effect of many complexities of the epidemic process and yet be simplified to a few parameters that are learned using fast linear regressions. We also present an evaluation of our method against seven approaches currently being used by the CDC, based on their two weeks forecast at various times during the pandemic. We demonstrate that our method achieves better root mean squared error compared to these seven approaches during majority of the evaluation period. Further, on a 2 core desktop machine, our approach takes only 3.18s to tune hyper-parameters, learn parameters and generate 100 days of forecasts of reported cases and deaths for all the states in the US. The total execution time for 184 countries is 11.83s and for all the US counties ($>$ 3000) is 101.03s.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge