Thomas Kobber Panum

Exploring Adversarial Robustness of Deep Metric Learning

Feb 14, 2021

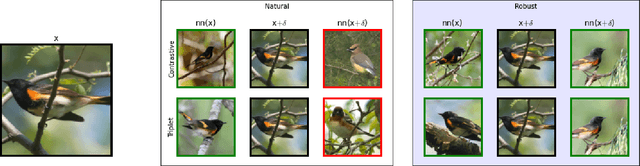

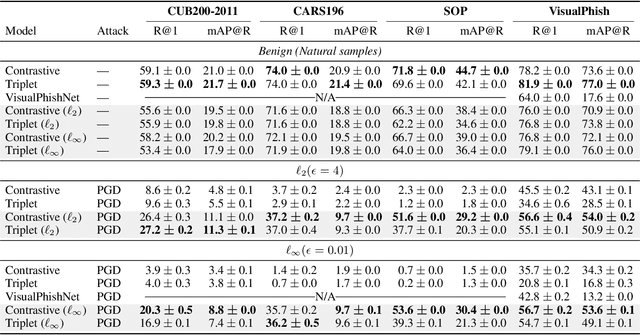

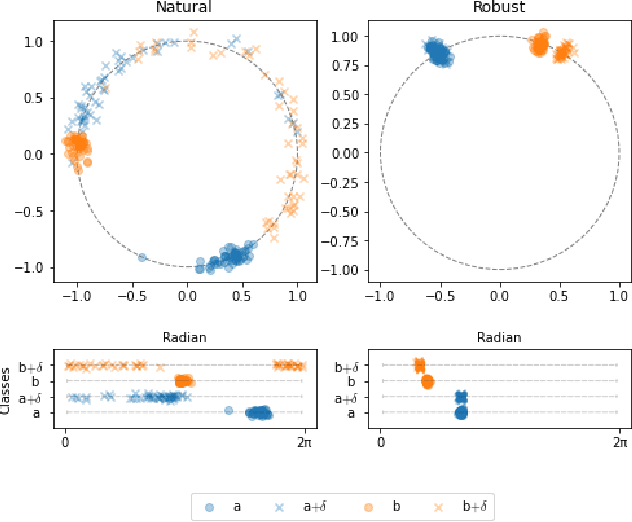

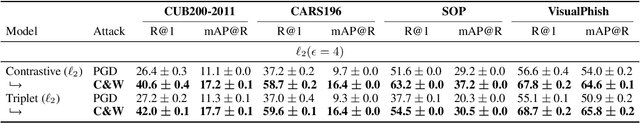

Abstract:Deep Metric Learning (DML), a widely-used technique, involves learning a distance metric between pairs of samples. DML uses deep neural architectures to learn semantic embeddings of the input, where the distance between similar examples is small while dissimilar ones are far apart. Although the underlying neural networks produce good accuracy on naturally occurring samples, they are vulnerable to adversarially-perturbed samples that reduce performance. We take a first step towards training robust DML models and tackle the primary challenge of the metric losses being dependent on the samples in a mini-batch, unlike standard losses that only depend on the specific input-output pair. We analyze this dependence effect and contribute a robust optimization formulation. Using experiments on three commonly-used DML datasets, we demonstrate 5-76 fold increases in adversarial accuracy, and outperform an existing DML model that sought out to be robust.

CAUSE: Learning Granger Causality from Event Sequences using Attribution Methods

Feb 18, 2020

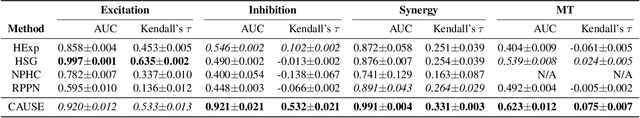

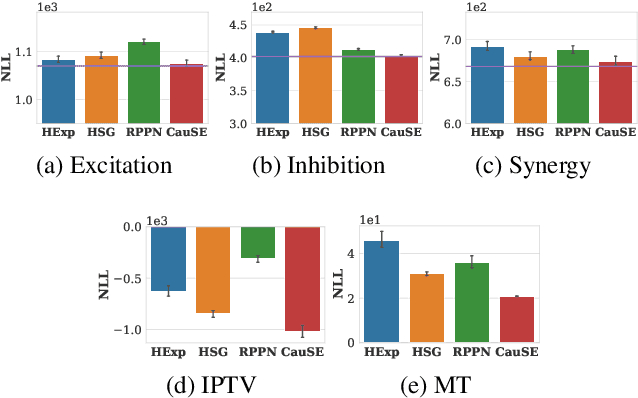

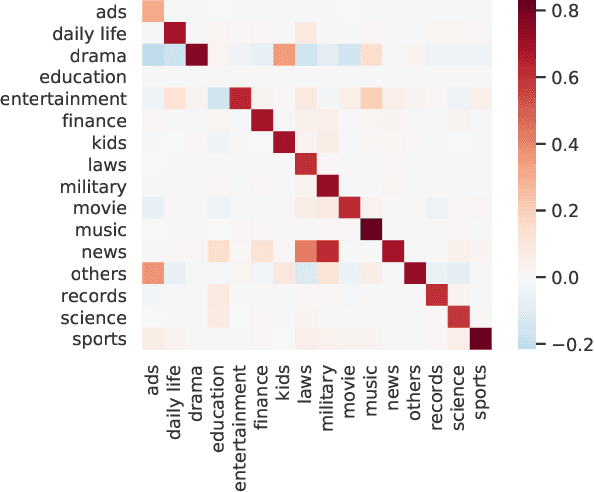

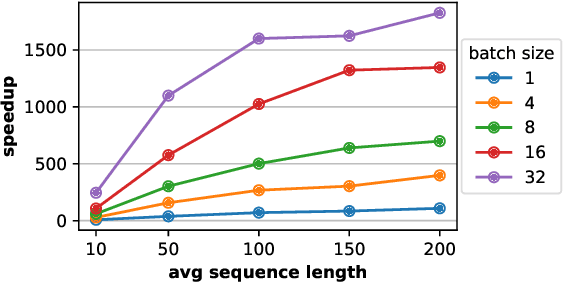

Abstract:We study the problem of learning Granger causality between event types from asynchronous, interdependent, multi-type event sequences. Existing work suffers from either limited model flexibility or poor model explainability and thus fails to uncover Granger causality across a wide variety of event sequences with diverse event interdependency. To address these weaknesses, we propose CAUSE (Causality from AttribUtions on Sequence of Events), a novel framework for the studied task. The key idea of CAUSE is to first implicitly capture the underlying event interdependency by fitting a neural point process, and then extract from the process a Granger causality statistic using an axiomatic attribution method. Across multiple datasets riddled with diverse event interdependency, we demonstrate that CAUSE achieves superior performance on correctly inferring the inter-type Granger causality over a range of state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge