Thierry Lefebvre

Regularized infill criteria for multi-objective Bayesian optimization with application to aircraft design

Apr 11, 2025

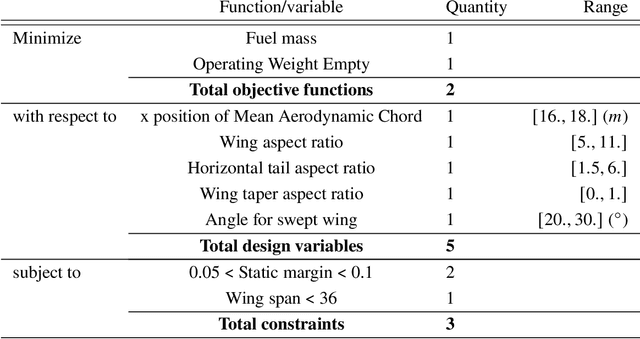

Abstract:Bayesian optimization is an advanced tool to perform ecient global optimization It consists on enriching iteratively surrogate Kriging models of the objective and the constraints both supposed to be computationally expensive of the targeted optimization problem Nowadays efficient extensions of Bayesian optimization to solve expensive multiobjective problems are of high interest The proposed method in this paper extends the super efficient global optimization with mixture of experts SEGOMOE to solve constrained multiobjective problems To cope with the illposedness of the multiobjective inll criteria different enrichment procedures using regularization techniques are proposed The merit of the proposed approaches are shown on known multiobjective benchmark problems with and without constraints The proposed methods are then used to solve a biobjective application related to conceptual aircraft design with ve unknown design variables and three nonlinear inequality constraints The preliminary results show a reduction of the total cost in terms of function evaluations by a factor of 20 compared to the evolutionary algorithm NSGA-II.

Surrogate-based optimization of system architectures subject to hidden constraints

Apr 11, 2025Abstract:The exploration of novel architectures requires physics-based simulation due to a lack of prior experience to start from, which introduces two specific challenges for optimization algorithms: evaluations become more expensive (in time) and evaluations might fail. The former challenge is addressed by Surrogate-Based Optimization (SBO) algorithms, in particular Bayesian Optimization (BO) using Gaussian Process (GP) models. An overview is provided of how BO can deal with challenges specific to architecture optimization, such as design variable hierarchy and multiple objectives: specific measures include ensemble infills and a hierarchical sampling algorithm. Evaluations might fail due to non-convergence of underlying solvers or infeasible geometry in certain areas of the design space. Such failed evaluations, also known as hidden constraints, pose a particular challenge to SBO/BO, as the surrogate model cannot be trained on empty results. This work investigates various strategies for satisfying hidden constraints in BO algorithms. Three high-level strategies are identified: rejection of failed points from the training set, replacing failed points based on viable (non-failed) points, and predicting the failure region. Through investigations on a set of test problems including a jet engine architecture optimization problem, it is shown that best performance is achieved with a mixed-discrete GP to predict the Probability of Viability (PoV), and by ensuring selected infill points satisfy some minimum PoV threshold. This strategy is demonstrated by solving a jet engine architecture problem that features at 50% failure rate and could not previously be solved by a BO algorithm. The developed BO algorithm and used test problems are available in the open-source Python library SBArchOpt.

Bayesian optimization for mixed variables using an adaptive dimension reduction process: applications to aircraft design

Apr 11, 2025Abstract:Multidisciplinary design optimization methods aim at adapting numerical optimization techniques to the design of engineering systems involving multiple disciplines. In this context, a large number of mixed continuous, integer and categorical variables might arise during the optimization process and practical applications involve a large number of design variables. Recently, there has been a growing interest in mixed variables constrained Bayesian optimization but most existing approaches severely increase the number of the hyperparameters related to the surrogate model. In this paper, we address this issue by constructing surrogate models using less hyperparameters. The reduction process is based on the partial least squares method. An adaptive procedure for choosing the number of hyperparameters is proposed. The performance of the proposed approach is confirmed on analytical tests as well as two real applications related to aircraft design. A significant improvement is obtained compared to genetic algorithms.

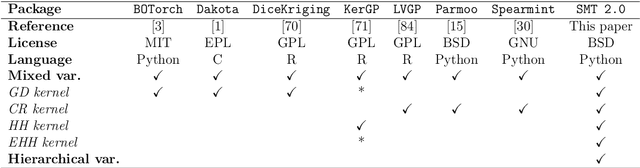

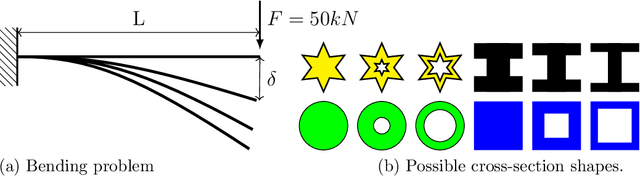

High-dimensional mixed-categorical Gaussian processes with application to multidisciplinary design optimization for a green aircraft

Nov 10, 2023Abstract:Multidisciplinary design optimization (MDO) methods aim at adapting numerical optimization techniques to the design of engineering systems involving multiple disciplines. In this context, a large number of mixed continuous, integer, and categorical variables might arise during the optimization process, and practical applications involve a significant number of design variables. Recently, there has been a growing interest in mixed-categorical metamodels based on Gaussian Process (GP) for Bayesian optimization. In particular, to handle mixed-categorical variables, several existing approaches employ different strategies to build the GP. These strategies either use continuous kernels, such as the continuous relaxation or the Gower distance-based kernels, or direct estimation of the correlation matrix, such as the exponential homoscedastic hypersphere (EHH) or the Homoscedastic Hypersphere (HH) kernel. Although the EHH and HH kernels are shown to be very efficient and lead to accurate GPs, they are based on a large number of hyperparameters. In this paper, we address this issue by constructing mixed-categorical GPs with fewer hyperparameters using Partial Least Squares (PLS) regression. Our goal is to generalize Kriging with PLS, commonly used for continuous inputs, to handle mixed-categorical inputs. The proposed method is implemented in the open-source software SMT and has been efficiently applied to structural and multidisciplinary applications. Our method is used to effectively demonstrate the structural behavior of a cantilever beam and facilitates MDO of a green aircraft, resulting in a 439-kilogram reduction in the amount of fuel consumed during a single aircraft mission.

SMT 2.0: A Surrogate Modeling Toolbox with a focus on Hierarchical and Mixed Variables Gaussian Processes

May 23, 2023

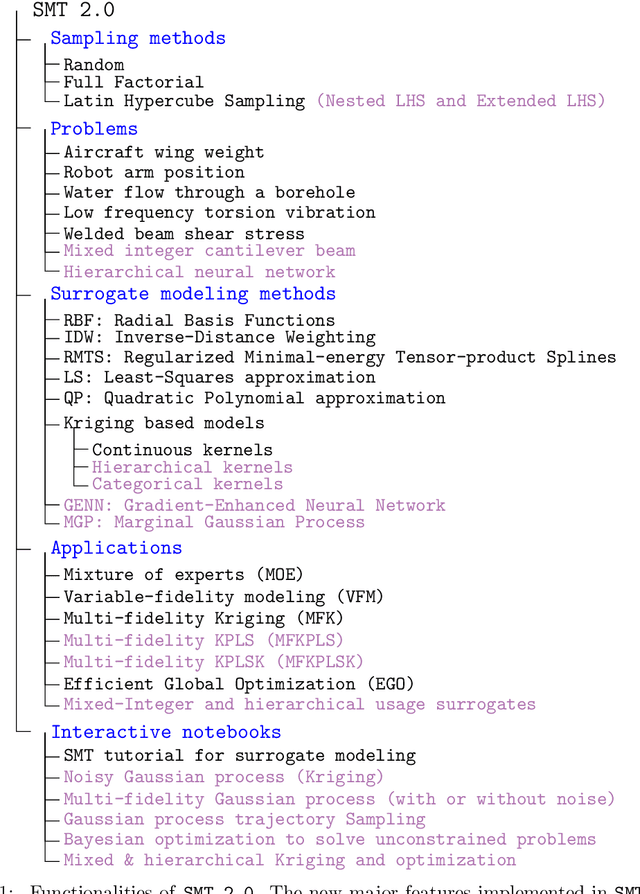

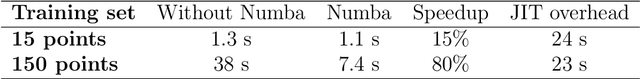

Abstract:The Surrogate Modeling Toolbox (SMT) is an open-source Python package that offers a collection of surrogate modeling methods, sampling techniques, and a set of sample problems. This paper presents SMT 2.0, a major new release of SMT that introduces significant upgrades and new features to the toolbox. This release adds the capability to handle mixed-variable surrogate models and hierarchical variables. These types of variables are becoming increasingly important in several surrogate modeling applications. SMT 2.0 also improves SMT by extending sampling methods, adding new surrogate models, and computing variance and kernel derivatives for Kriging. This release also includes new functions to handle noisy and use multifidelity data. To the best of our knowledge, SMT 2.0 is the first open-source surrogate library to propose surrogate models for hierarchical and mixed inputs. This open-source software is distributed under the New BSD license.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge