Thanh Trung Nguyen

PADM: A Physics-aware Diffusion Model for Attenuation Correction

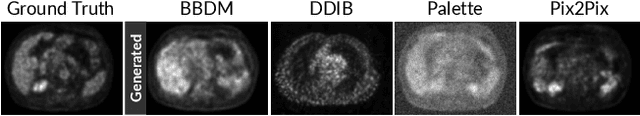

Nov 10, 2025Abstract:Attenuation artifacts remain a significant challenge in cardiac Myocardial Perfusion Imaging (MPI) using Single-Photon Emission Computed Tomography (SPECT), often compromising diagnostic accuracy and reducing clinical interpretability. While hybrid SPECT/CT systems mitigate these artifacts through CT-derived attenuation maps, their high cost, limited accessibility, and added radiation exposure hinder widespread clinical adoption. In this study, we propose a novel CT-free solution to attenuation correction in cardiac SPECT. Specifically, we introduce Physics-aware Attenuation Correction Diffusion Model (PADM), a diffusion-based generative method that incorporates explicit physics priors via a teacher--student distillation mechanism. This approach enables attenuation artifact correction using only Non-Attenuation-Corrected (NAC) input, while still benefiting from physics-informed supervision during training. To support this work, we also introduce CardiAC, a comprehensive dataset comprising 424 patient studies with paired NAC and Attenuation-Corrected (AC) reconstructions, alongside high-resolution CT-based attenuation maps. Extensive experiments demonstrate that PADM outperforms state-of-the-art generative models, delivering superior reconstruction fidelity across both quantitative metrics and visual assessment.

CT to PET Translation: A Large-scale Dataset and Domain-Knowledge-Guided Diffusion Approach

Oct 29, 2024

Abstract:Positron Emission Tomography (PET) and Computed Tomography (CT) are essential for diagnosing, staging, and monitoring various diseases, particularly cancer. Despite their importance, the use of PET/CT systems is limited by the necessity for radioactive materials, the scarcity of PET scanners, and the high cost associated with PET imaging. In contrast, CT scanners are more widely available and significantly less expensive. In response to these challenges, our study addresses the issue of generating PET images from CT images, aiming to reduce both the medical examination cost and the associated health risks for patients. Our contributions are twofold: First, we introduce a conditional diffusion model named CPDM, which, to our knowledge, is one of the initial attempts to employ a diffusion model for translating from CT to PET images. Second, we provide the largest CT-PET dataset to date, comprising 2,028,628 paired CT-PET images, which facilitates the training and evaluation of CT-to-PET translation models. For the CPDM model, we incorporate domain knowledge to develop two conditional maps: the Attention map and the Attenuation map. The former helps the diffusion process focus on areas of interest, while the latter improves PET data correction and ensures accurate diagnostic information. Experimental evaluations across various benchmarks demonstrate that CPDM surpasses existing methods in generating high-quality PET images in terms of multiple metrics. The source code and data samples are available at https://github.com/thanhhff/CPDM.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge