Thang V. Nguyen

Secrecy Performance Analysis of Space-to-Ground Optical Satellite Communications

Feb 21, 2024

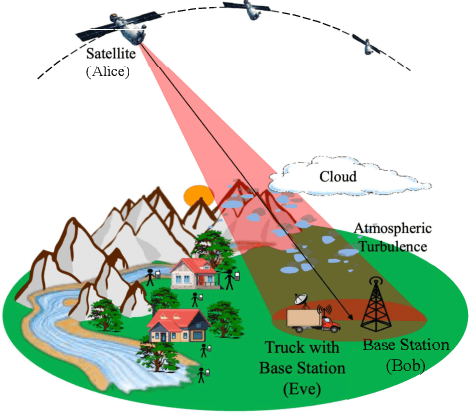

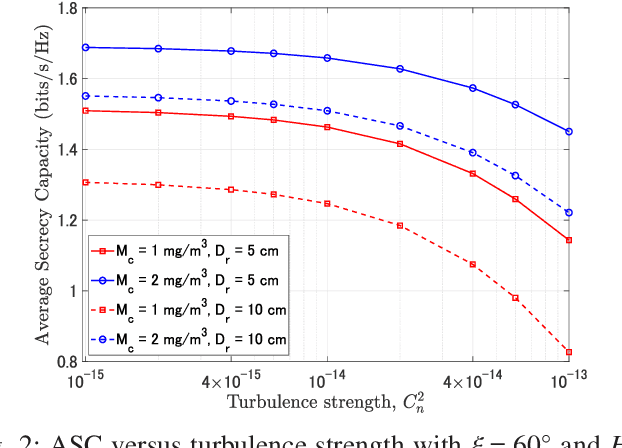

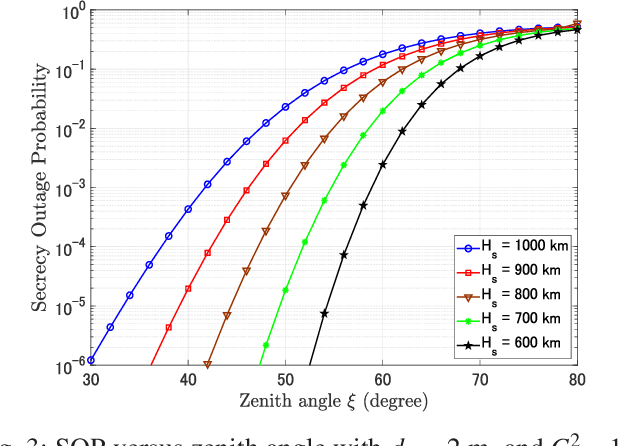

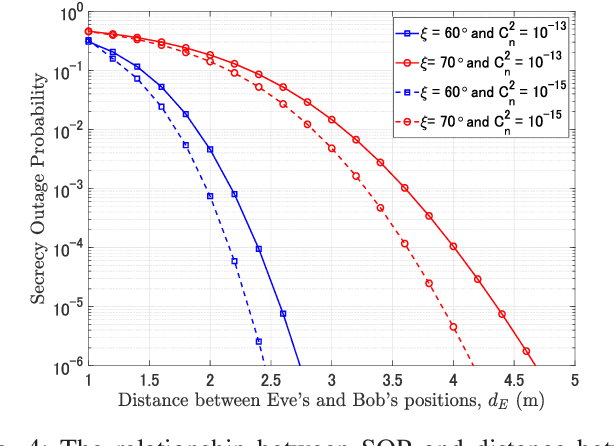

Abstract:Free-space optics (FSO)-based satellite communication systems have recently received considerable attention due to their enhanced capacity compared to their radio frequency (RF) counterparts. This paper analyzes the performance of physical layer security of space-to-ground intensity modulation/direct detection FSO satellite links under the effect of atmospheric loss, misalignment, cloud attenuation, and atmospheric turbulence-induced fading. Specifically, a wiretap channel consisting of a legitimate transmitter Alice (i.e., the satellite), a legitimate user Bob, and an eavesdropper Eve over turbulence channels modeled by the Fisher-Snedecor $\mathcal{F}$ distribution is considered. The secrecy performance in terms of the average secrecy capacity, secrecy outage probability, and strictly positive secrecy capacity are derived in closed-form. Simulation results reveal significant impacts of satellite altitude, zenith angle, and turbulence strength on the secrecy performance.

Learning to diagnose common thorax diseases on chest radiographs from radiology reports in Vietnamese

Sep 11, 2022

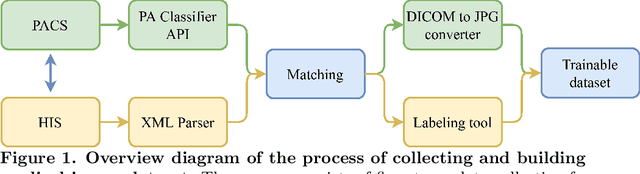

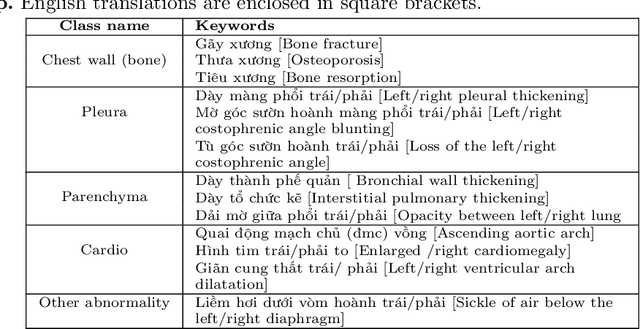

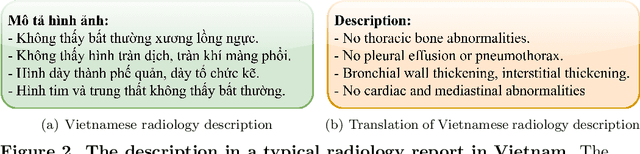

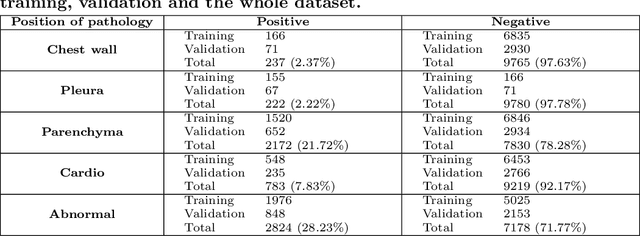

Abstract:We propose a data collecting and annotation pipeline that extracts information from Vietnamese radiology reports to provide accurate labels for chest X-ray (CXR) images. This can benefit Vietnamese radiologists and clinicians by annotating data that closely match their endemic diagnosis categories which may vary from country to country. To assess the efficacy of the proposed labeling technique, we built a CXR dataset containing 9,752 studies and evaluated our pipeline using a subset of this dataset. With an F1-score of at least 0.9923, the evaluation demonstrates that our labeling tool performs precisely and consistently across all classes. After building the dataset, we train deep learning models that leverage knowledge transferred from large public CXR datasets. We employ a variety of loss functions to overcome the curse of imbalanced multi-label datasets and conduct experiments with various model architectures to select the one that delivers the best performance. Our best model (CheXpert-pretrained EfficientNet-B2) yields an F1-score of 0.6989 (95% CI 0.6740, 0.7240), AUC of 0.7912, sensitivity of 0.7064 and specificity of 0.8760 for the abnormal diagnosis in general. Finally, we demonstrate that our coarse classification (based on five specific locations of abnormalities) yields comparable results to fine classification (twelve pathologies) on the benchmark CheXpert dataset for general anomaly detection while delivering better performance in terms of the average performance of all classes.

Phase Recognition in Contrast-Enhanced CT Scans based on Deep Learning and Random Sampling

Mar 20, 2022Abstract:A fully automated system for interpreting abdominal computed tomography (CT) scans with multiple phases of contrast enhancement requires an accurate classification of the phases. This work aims at developing and validating a precise, fast multi-phase classifier to recognize three main types of contrast phases in abdominal CT scans. We propose in this study a novel method that uses a random sampling mechanism on top of deep CNNs for the phase recognition of abdominal CT scans of four different phases: non-contrast, arterial, venous, and others. The CNNs work as a slice-wise phase prediction, while the random sampling selects input slices for the CNN models. Afterward, majority voting synthesizes the slice-wise results of the CNNs, to provide the final prediction at scan level. Our classifier was trained on 271,426 slices from 830 phase-annotated CT scans, and when combined with majority voting on 30% of slices randomly chosen from each scan, achieved a mean F1-score of 92.09% on our internal test set of 358 scans. The proposed method was also evaluated on 2 external test sets: CTPAC-CCRCC (N = 242) and LiTS (N = 131), which were annotated by our experts. Although a drop in performance has been observed, the model performance remained at a high level of accuracy with a mean F1-score of 76.79% and 86.94% on CTPAC-CCRCC and LiTS datasets, respectively. Our experimental results also showed that the proposed method significantly outperformed the state-of-the-art 3D approaches while requiring less computation time for inference.

Learning to Automatically Diagnose Multiple Diseases in Pediatric Chest Radiographs Using Deep Convolutional Neural Networks

Aug 14, 2021Abstract:Chest radiograph (CXR) interpretation in pediatric patients is error-prone and requires a high level of understanding of radiologic expertise. Recently, deep convolutional neural networks (D-CNNs) have shown remarkable performance in interpreting CXR in adults. However, there is a lack of evidence indicating that D-CNNs can recognize accurately multiple lung pathologies from pediatric CXR scans. In particular, the development of diagnostic models for the detection of pediatric chest diseases faces significant challenges such as (i) lack of physician-annotated datasets and (ii) class imbalance problems. In this paper, we retrospectively collect a large dataset of 5,017 pediatric CXR scans, for which each is manually labeled by an experienced radiologist for the presence of 10 common pathologies. A D-CNN model is then trained on 3,550 annotated scans to classify multiple pediatric lung pathologies automatically. To address the high-class imbalance issue, we propose to modify and apply "Distribution-Balanced loss" for training D-CNNs which reshapes the standard Binary-Cross Entropy loss (BCE) to efficiently learn harder samples by down-weighting the loss assigned to the majority classes. On an independent test set of 777 studies, the proposed approach yields an area under the receiver operating characteristic (AUC) of 0.709 (95% CI, 0.690-0.729). The sensitivity, specificity, and F1-score at the cutoff value are 0.722 (0.694-0.750), 0.579 (0.563-0.595), and 0.389 (0.373-0.405), respectively. These results significantly outperform previous state-of-the-art methods on most of the target diseases. Moreover, our ablation studies validate the effectiveness of the proposed loss function compared to other standard losses, e.g., BCE and Focal Loss, for this learning task. Overall, we demonstrate the potential of D-CNNs in interpreting pediatric CXRs.

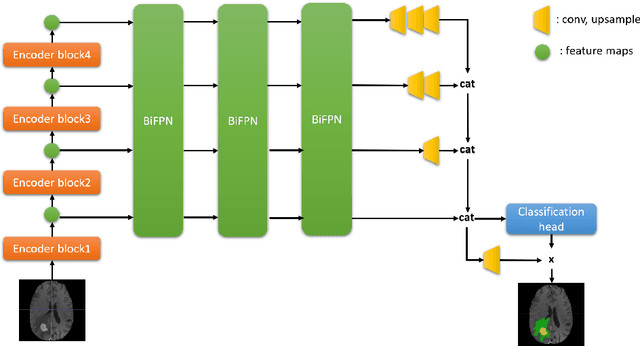

Enhancing MRI Brain Tumor Segmentation with an Additional Classification Network

Sep 25, 2020

Abstract:Brain tumor segmentation plays an essential role in medical image analysis. In recent studies, deep convolution neural networks (DCNNs) are extremely powerful to tackle tumor segmentation tasks. We propose in this paper a novel training method that enhances the segmentation results by adding an additional classification branch to the network. The whole network was trained end-to-end on the Multimodal Brain Tumor Segmentation Challenge (BraTS) 2020 training dataset. On the BraTS's validation set, it achieved an average Dice score of 78.43%, 89.99%, and 84.22% respectively for the enhancing tumor, the whole tumor, and the tumor core.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge