Tessa Verhoef

NeLLCom-Lex: A Neural-agent Framework to Study the Interplay between Lexical Systems and Language Use

Sep 26, 2025Abstract:Lexical semantic change has primarily been investigated with observational and experimental methods; however, observational methods (corpus analysis, distributional semantic modeling) cannot get at causal mechanisms, and experimental paradigms with humans are hard to apply to semantic change due to the extended diachronic processes involved. This work introduces NeLLCom-Lex, a neural-agent framework designed to simulate semantic change by first grounding agents in a real lexical system (e.g. English) and then systematically manipulating their communicative needs. Using a well-established color naming task, we simulate the evolution of a lexical system within a single generation, and study which factors lead agents to: (i) develop human-like naming behavior and lexicons, and (ii) change their behavior and lexicons according to their communicative needs. Our experiments with different supervised and reinforcement learning pipelines show that neural agents trained to 'speak' an existing language can reproduce human-like patterns in color naming to a remarkable extent, supporting the further use of NeLLCom-Lex to elucidate the mechanisms of semantic change.

Probing Vision-Language Understanding through the Visual Entailment Task: promises and pitfalls

Jul 23, 2025Abstract:This study investigates the extent to which the Visual Entailment (VE) task serves as a reliable probe of vision-language understanding in multimodal language models, using the LLaMA 3.2 11B Vision model as a test case. Beyond reporting performance metrics, we aim to interpret what these results reveal about the underlying possibilities and limitations of the VE task. We conduct a series of experiments across zero-shot, few-shot, and fine-tuning settings, exploring how factors such as prompt design, the number and order of in-context examples and access to visual information might affect VE performance. To further probe the reasoning processes of the model, we used explanation-based evaluations. Results indicate that three-shot inference outperforms the zero-shot baselines. However, additional examples introduce more noise than they provide benefits. Additionally, the order of the labels in the prompt is a critical factor that influences the predictions. In the absence of visual information, the model has a strong tendency to hallucinate and imagine content, raising questions about the model's over-reliance on linguistic priors. Fine-tuning yields strong results, achieving an accuracy of 83.3% on the e-SNLI-VE dataset and outperforming the state-of-the-art OFA-X model. Additionally, the explanation evaluation demonstrates that the fine-tuned model provides semantically meaningful explanations similar to those of humans, with a BERTScore F1-score of 89.2%. We do, however, find comparable BERTScore results in experiments with limited vision, questioning the visual grounding of this task. Overall, our results highlight both the utility and limitations of VE as a diagnostic task for vision-language understanding and point to directions for refining multimodal evaluation methods.

Shaping Shared Languages: Human and Large Language Models' Inductive Biases in Emergent Communication

Mar 06, 2025

Abstract:Languages are shaped by the inductive biases of their users. Using a classical referential game, we investigate how artificial languages evolve when optimised for inductive biases in humans and large language models (LLMs) via Human-Human, LLM-LLM and Human-LLM experiments. We show that referentially grounded vocabularies emerge that enable reliable communication in all conditions, even when humans and LLMs collaborate. Comparisons between conditions reveal that languages optimised for LLMs subtly differ from those optimised for humans. Interestingly, interactions between humans and LLMs alleviate these differences and result in vocabularies which are more human-like than LLM-like. These findings advance our understanding of how inductive biases in LLMs play a role in the dynamic nature of human language and contribute to maintaining alignment in human and machine communication. In particular, our work underscores the need to think of new methods that include human interaction in the training processes of LLMs, and shows that using communicative success as a reward signal can be a fruitful, novel direction.

Simulating the Emergence of Differential Case Marking with Communicating Neural-Network Agents

Feb 06, 2025

Abstract:Differential Case Marking (DCM) refers to the phenomenon where grammatical case marking is applied selectively based on semantic, pragmatic, or other factors. The emergence of DCM has been studied in artificial language learning experiments with human participants, which were specifically aimed at disentangling the effects of learning from those of communication (Smith & Culbertson, 2020). Multi-agent reinforcement learning frameworks based on neural networks have gained significant interest to simulate the emergence of human-like linguistic phenomena. In this study, we employ such a framework in which agents first acquire an artificial language before engaging in communicative interactions, enabling direct comparisons to human result. Using a very generic communication optimization algorithm and neural-network learners that have no prior experience with language or semantic preferences, our results demonstrate that learning alone does not lead to DCM, but when agents communicate, differential use of markers arises. This supports Smith and Culbertson (2020)'s findings that highlight the critical role of communication in shaping DCM and showcases the potential of neural-agent models to complement experimental research on language evolution.

Searching for Structure: Investigating Emergent Communication with Large Language Models

Dec 10, 2024

Abstract:Human languages have evolved to be structured through repeated language learning and use. These processes introduce biases that operate during language acquisition and shape linguistic systems toward communicative efficiency. In this paper, we investigate whether the same happens if artificial languages are optimised for implicit biases of Large Language Models (LLMs). To this end, we simulate a classical referential game in which LLMs learn and use artificial languages. Our results show that initially unstructured holistic languages are indeed shaped to have some structural properties that allow two LLM agents to communicate successfully. Similar to observations in human experiments, generational transmission increases the learnability of languages, but can at the same time result in non-humanlike degenerate vocabularies. Taken together, this work extends experimental findings, shows that LLMs can be used as tools in simulations of language evolution, and opens possibilities for future human-machine experiments in this field.

The Curious Case of Representational Alignment: Unravelling Visio-Linguistic Tasks in Emergent Communication

Jul 25, 2024Abstract:Natural language has the universal properties of being compositional and grounded in reality. The emergence of linguistic properties is often investigated through simulations of emergent communication in referential games. However, these experiments have yielded mixed results compared to similar experiments addressing linguistic properties of human language. Here we address representational alignment as a potential contributing factor to these results. Specifically, we assess the representational alignment between agent image representations and between agent representations and input images. Doing so, we confirm that the emergent language does not appear to encode human-like conceptual visual features, since agent image representations drift away from inputs whilst inter-agent alignment increases. We moreover identify a strong relationship between inter-agent alignment and topographic similarity, a common metric for compositionality, and address its consequences. To address these issues, we introduce an alignment penalty that prevents representational drift but interestingly does not improve performance on a compositional discrimination task. Together, our findings emphasise the key role representational alignment plays in simulations of language emergence.

What does Kiki look like? Cross-modal associations between speech sounds and visual shapes in vision-and-language models

Jul 25, 2024

Abstract:Humans have clear cross-modal preferences when matching certain novel words to visual shapes. Evidence suggests that these preferences play a prominent role in our linguistic processing, language learning, and the origins of signal-meaning mappings. With the rise of multimodal models in AI, such as vision- and-language (VLM) models, it becomes increasingly important to uncover the kinds of visio-linguistic associations these models encode and whether they align with human representations. Informed by experiments with humans, we probe and compare four VLMs for a well-known human cross-modal preference, the bouba-kiki effect. We do not find conclusive evidence for this effect but suggest that results may depend on features of the models, such as architecture design, model size, and training details. Our findings inform discussions on the origins of the bouba-kiki effect in human cognition and future developments of VLMs that align well with human cross-modal associations.

NeLLCom-X: A Comprehensive Neural-Agent Framework to Simulate Language Learning and Group Communication

Jul 19, 2024Abstract:Recent advances in computational linguistics include simulating the emergence of human-like languages with interacting neural network agents, starting from sets of random symbols. The recently introduced NeLLCom framework (Lian et al., 2023) allows agents to first learn an artificial language and then use it to communicate, with the aim of studying the emergence of specific linguistics properties. We extend this framework (NeLLCom-X) by introducing more realistic role-alternating agents and group communication in order to investigate the interplay between language learnability, communication pressures, and group size effects. We validate NeLLCom-X by replicating key findings from prior research simulating the emergence of a word-order/case-marking trade-off. Next, we investigate how interaction affects linguistic convergence and emergence of the trade-off. The novel framework facilitates future simulations of diverse linguistic aspects, emphasizing the importance of interaction and group dynamics in language evolution.

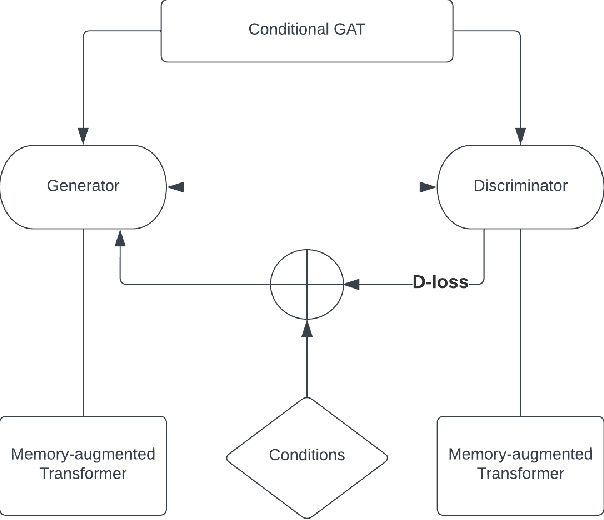

Memory-Augmented Generative Adversarial Transformers

Feb 29, 2024

Abstract:Conversational AI systems that rely on Large Language Models, like Transformers, have difficulty interweaving external data (like facts) with the language they generate. Vanilla Transformer architectures are not designed for answering factual questions with high accuracy. This paper investigates a possible route for addressing this problem. We propose to extend the standard Transformer architecture with an additional memory bank holding extra information (such as facts drawn from a knowledge base), and an extra attention layer for addressing this memory. We add this augmented memory to a Generative Adversarial Network-inspired Transformer architecture. This setup allows for implementing arbitrary felicity conditions on the generated language of the Transformer. We first demonstrate how this machinery can be deployed for handling factual questions in goal-oriented dialogues. Secondly, we demonstrate that our approach can be useful for applications like {\it style adaptation} as well: the adaptation of utterances according to certain stylistic (external) constraints, like social properties of human interlocutors in dialogues.

Communication Drives the Emergence of Language Universals in Neural Agents: Evidence from the Word-order/Case-marking Trade-off

Jan 30, 2023Abstract:Artificial learners often behave differently from human learners in the context of neural agent-based simulations of language emergence and change. The lack of appropriate cognitive biases in these learners is one of the prevailing explanations. However, it has also been proposed that more naturalistic settings of language learning and use could lead to more human-like results. In this work, we investigate the latter account focusing on the word-order/case-marking trade-off, a widely attested language universal which has proven particularly difficult to simulate. We propose a new Neural-agent Language Learning and Communication framework (NeLLCom) where pairs of speaking and listening agents first learn a given miniature language through supervised learning, and then optimize it for communication via reinforcement learning. Following closely the setup of earlier human experiments, we succeed in replicating the trade-off with the new framework without hard-coding any learning bias in the agents. We see this as an essential step towards the investigation of language universals with neural learners.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge