Teresa B. Ludermir

A Neuromorphic Architecture for Reinforcement Learning from Real-Valued Observations

Jul 06, 2023

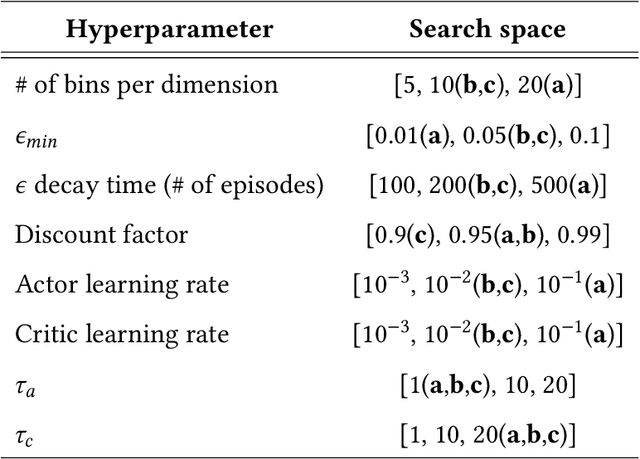

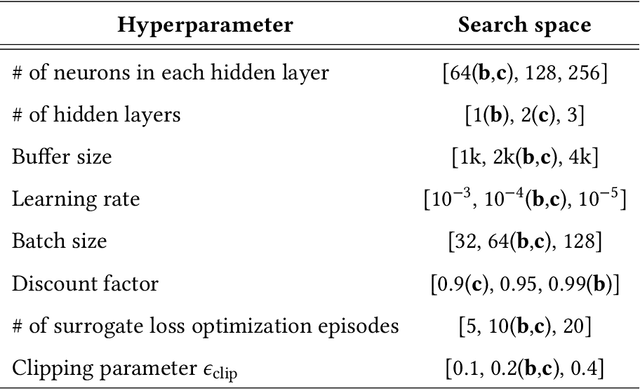

Abstract:Reinforcement Learning (RL) provides a powerful framework for decision-making in complex environments. However, implementing RL in hardware-efficient and bio-inspired ways remains a challenge. This paper presents a novel Spiking Neural Network (SNN) architecture for solving RL problems with real-valued observations. The proposed model incorporates multi-layered event-based clustering, with the addition of Temporal Difference (TD)-error modulation and eligibility traces, building upon prior work. An ablation study confirms the significant impact of these components on the proposed model's performance. A tabular actor-critic algorithm with eligibility traces and a state-of-the-art Proximal Policy Optimization (PPO) algorithm are used as benchmarks. Our network consistently outperforms the tabular approach and successfully discovers stable control policies on classic RL environments: mountain car, cart-pole, and acrobot. The proposed model offers an appealing trade-off in terms of computational and hardware implementation requirements. The model does not require an external memory buffer nor a global error gradient computation, and synaptic updates occur online, driven by local learning rules and a broadcasted TD-error signal. Thus, this work contributes to the development of more hardware-efficient RL solutions.

Um Metodo para Busca Automatica de Redes Neurais Artificiais

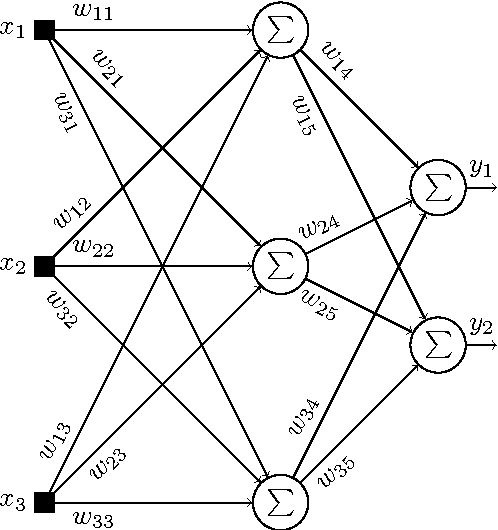

Jul 09, 2021Abstract:This paper describes a method that automatically searches Artificial Neural Networks using Cellular Genetic Algorithms. The main difference of this method for a common genetic algorithm is the use of a cellular automaton capable of providing the location for individuals, reducing the possibility of local minima in search space. This method employs an evolutionary search for simultaneous choices of initial weights, transfer functions, architectures and learning rules. Experimental results have shown that the developed method can find compact, efficient networks with a satisfactory generalization power and with shorter training times when compared to other methods found in the literature.

Distance Metric Learning through Minimization of the Free Energy

Jun 10, 2021

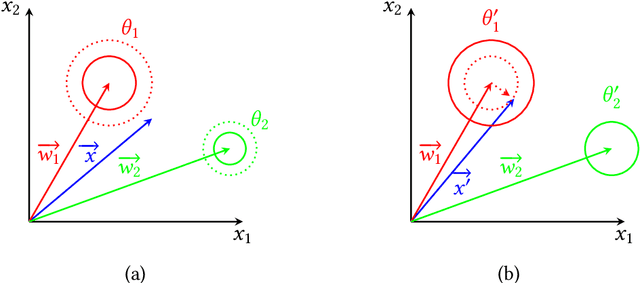

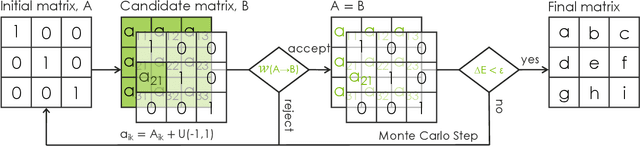

Abstract:Distance metric learning has attracted a lot of interest for solving machine learning and pattern recognition problems over the last decades. In this work we present a simple approach based on concepts from statistical physics to learn optimal distance metric for a given problem. We formulate the task as a typical statistical physics problem: distances between patterns represent constituents of a physical system and the objective function corresponds to energy. Then we express the problem as a minimization of the free energy of a complex system, which is equivalent to distance metric learning. Much like for many problems in physics, we propose an approach based on Metropolis Monte Carlo to find the best distance metric. This provides a natural way to learn the distance metric, where the learning process can be intuitively seen as stretching and rotating the metric space until some heuristic is satisfied. Our proposed method can handle a wide variety of constraints including those with spurious local minima. The approach works surprisingly well with stochastic nearest neighbors from neighborhood component analysis (NCA). Experimental results on artificial and real-world data sets reveal a clear superiority over a number of state-of-the-art distance metric learning methods for nearest neighbors classification.

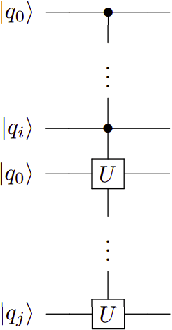

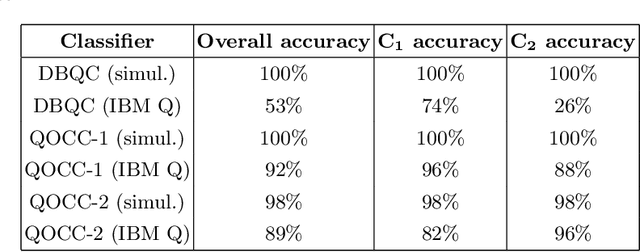

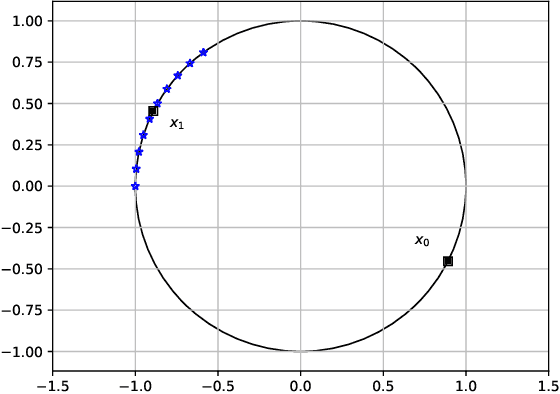

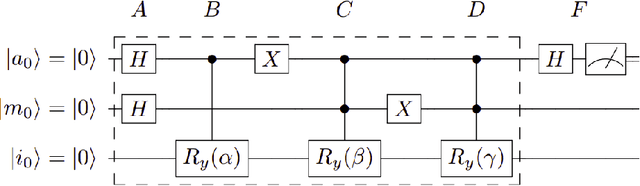

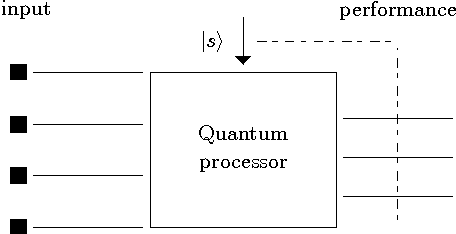

Quantum One-class Classification With a Distance-based Classifier

Jul 31, 2020

Abstract:Distance-based Quantum Classifier (DBQC) is a quantum machine learning model for pattern recognition. However, DBQC has a low accuracy on real noisy quantum processors. We present a modification of DBQC named Quantum One-class Classifier (QOCC) to improve accuracy on NISQ (Noisy Intermediate-Scale Quantum) computers. Experimental results were obtained by running the proposed classifier on a computer provided by IBM Quantum Experience and show that QOCC has improved accuracy over DBQC.

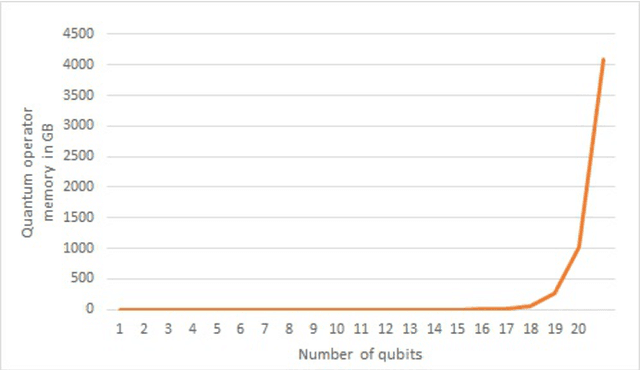

Quantum perceptron over a field and neural network architecture selection in a quantum computer

Jan 29, 2016

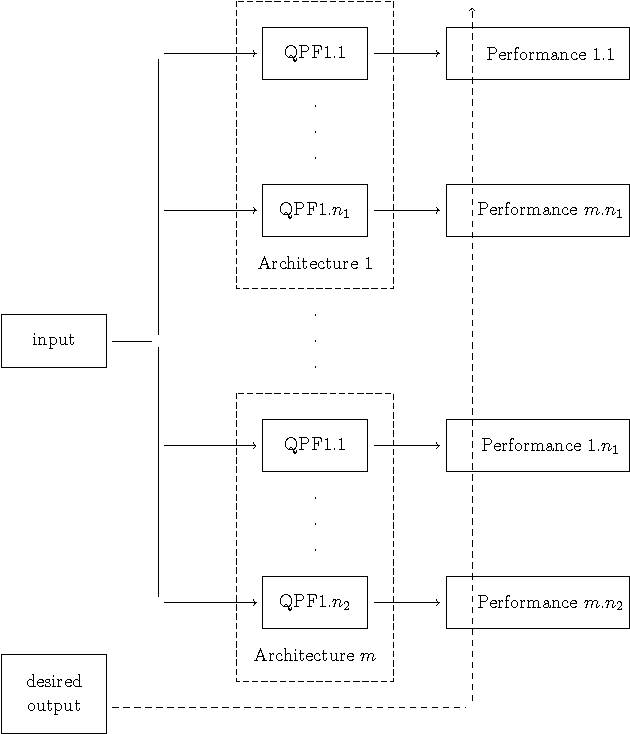

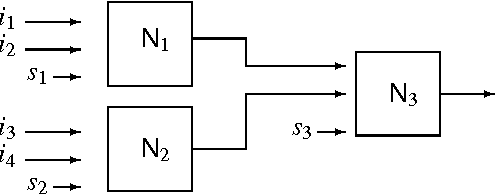

Abstract:In this work, we propose a quantum neural network named quantum perceptron over a field (QPF). Quantum computers are not yet a reality and the models and algorithms proposed in this work cannot be simulated in actual (or classical) computers. QPF is a direct generalization of a classical perceptron and solves some drawbacks found in previous models of quantum perceptrons. We also present a learning algorithm named Superposition based Architecture Learning algorithm (SAL) that optimizes the neural network weights and architectures. SAL searches for the best architecture in a finite set of neural network architectures with linear time over the number of patterns in the training set. SAL is the first learning algorithm to determine neural network architectures in polynomial time. This speedup is obtained by the use of quantum parallelism and a non-linear quantum operator.

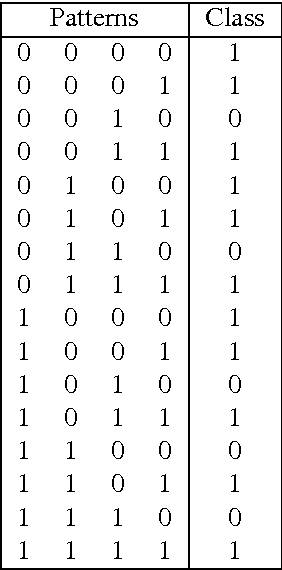

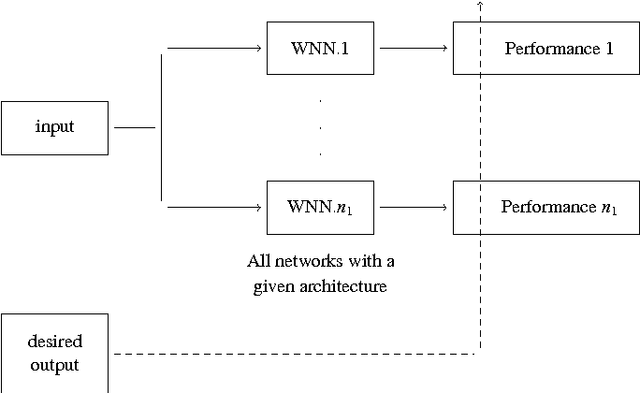

Weightless neural network parameters and architecture selection in a quantum computer

Jan 12, 2016

Abstract:Training artificial neural networks requires a tedious empirical evaluation to determine a suitable neural network architecture. To avoid this empirical process several techniques have been proposed to automatise the architecture selection process. In this paper, we propose a method to perform parameter and architecture selection for a quantum weightless neural network (qWNN). The architecture selection is performed through the learning procedure of a qWNN with a learning algorithm that uses the principle of quantum superposition and a non-linear quantum operator. The main advantage of the proposed method is that it performs a global search in the space of qWNN architecture and parameters rather than a local search.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge