Adenilton J. da Silva

A divide-and-conquer algorithm for quantum state preparation

Aug 04, 2020

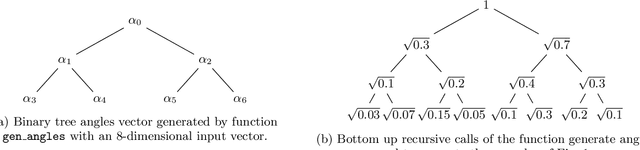

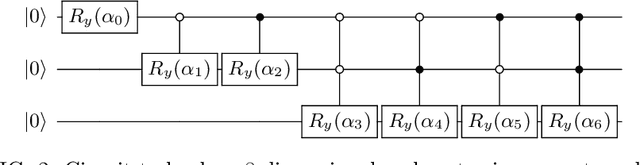

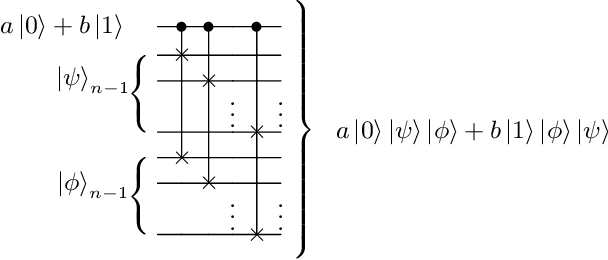

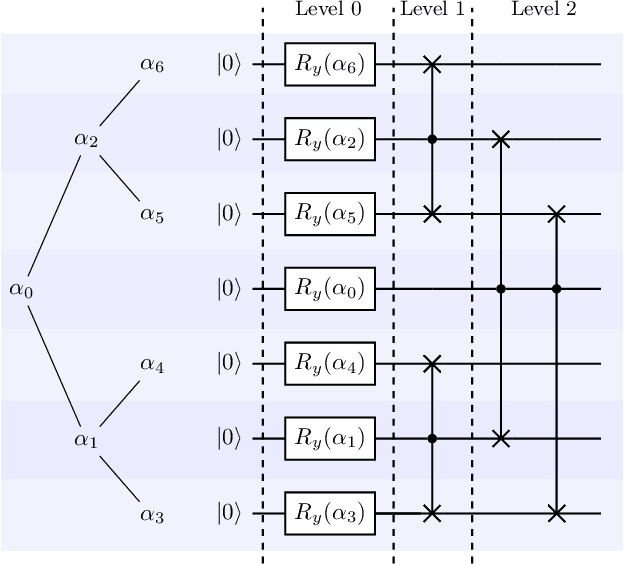

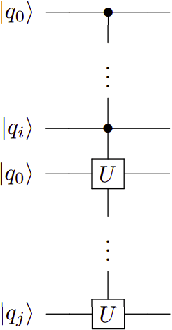

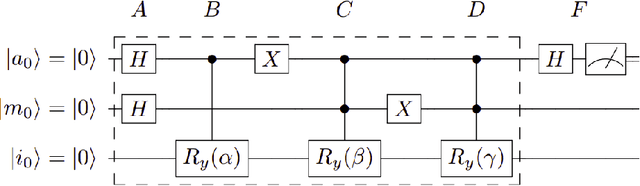

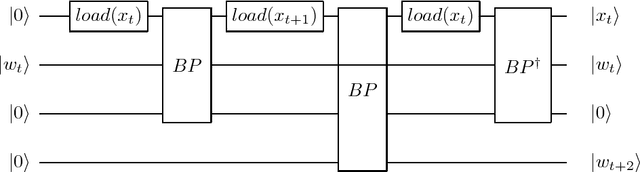

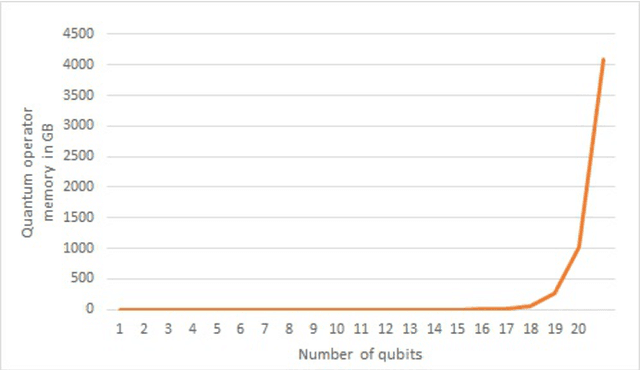

Abstract:Advantages in several fields of research and industry are expected with the rise of quantum computers. However, the computational cost to load classical data in quantum computers can impose restrictions on possible quantum speedups. Known algorithms to create arbitrary quantum states require quantum circuits with depth O(N) to load an N-dimensional vector. Here, we show that it is possible to load an N-dimensional vector with a quantum circuit with polylogarithmic depth and entangled information in ancillary qubits. Results show that we can efficiently load data in quantum devices using a divide-and-conquer strategy to exchange computational time for space. We demonstrate a proof of concept on a real quantum device and present two applications for quantum machine learning. We expect that this new loading strategy allows the quantum speedup of tasks that require to load a significant volume of information to quantum devices.

Quantum One-class Classification With a Distance-based Classifier

Jul 31, 2020

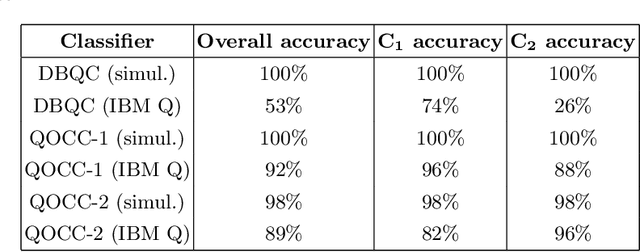

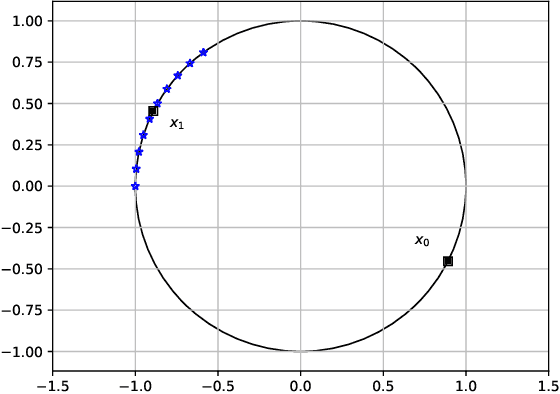

Abstract:Distance-based Quantum Classifier (DBQC) is a quantum machine learning model for pattern recognition. However, DBQC has a low accuracy on real noisy quantum processors. We present a modification of DBQC named Quantum One-class Classifier (QOCC) to improve accuracy on NISQ (Noisy Intermediate-Scale Quantum) computers. Experimental results were obtained by running the proposed classifier on a computer provided by IBM Quantum Experience and show that QOCC has improved accuracy over DBQC.

Quantum ensemble of trained classifiers

Jul 18, 2020

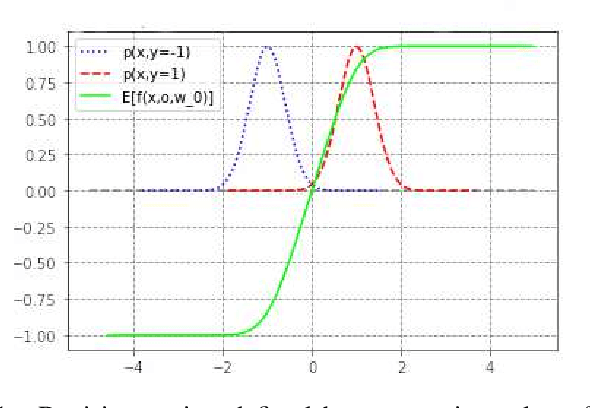

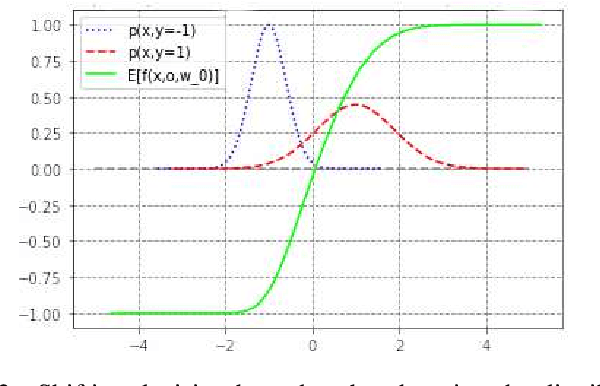

Abstract:Through superposition, a quantum computer is capable of representing an exponentially large set of states, according to the number of qubits available. Quantum machine learning is a subfield of quantum computing that explores the potential of quantum computing to enhance machine learning algorithms. An approach of quantum machine learning named quantum ensembles of quantum classifiers consists of using superposition to build an exponentially large ensemble of classifiers to be trained with an optimization-free learning algorithm. In this work, we investigate how the quantum ensemble works with the addition of an optimization method. Experiments using benchmark datasets show the improvements obtained with the addition of the optimization step.

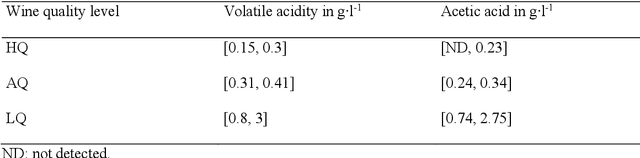

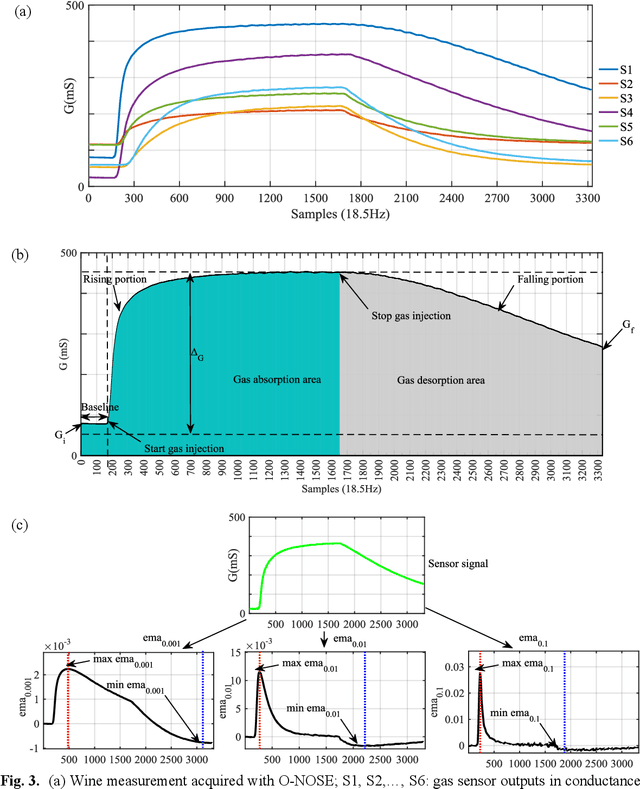

Wine quality rapid detection using a compact electronic nose system: application focused on spoilage thresholds by acetic acid

Jan 16, 2020

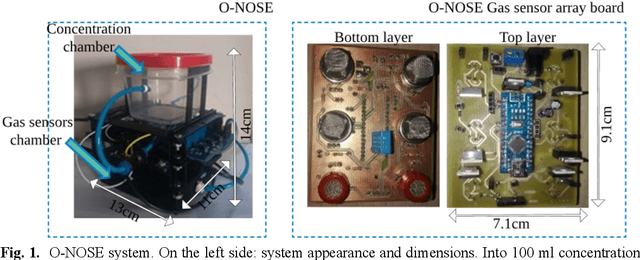

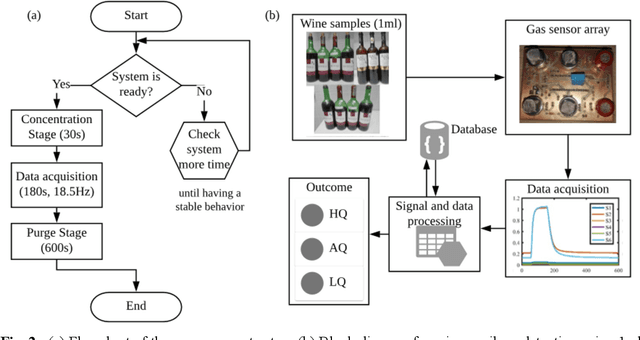

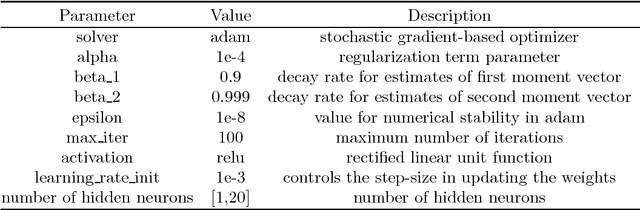

Abstract:It is crucial for the wine industry to have methods like electronic nose systems (E-Noses) for real-time monitoring thresholds of acetic acid in wines, preventing its spoilage or determining its quality. In this paper, we prove that the portable and compact self-developed E-Nose, based on thin film semiconductor (SnO2) sensors and trained with an approach that uses deep Multilayer Perceptron (MLP) neural network, can perform early detection of wine spoilage thresholds in routine tasks of wine quality control. To obtain rapid and online detection, we propose a method of rising-window focused on raw data processing to find an early portion of the sensor signals with the best recognition performance. Our approach was compared with the conventional approach employed in E-Noses for gas recognition that involves feature extraction and selection techniques for preprocessing data, succeeded by a Support Vector Machine (SVM) classifier. The results evidence that is possible to classify three wine spoilage levels in 2.7 seconds after the gas injection point, implying in a methodology 63 times faster than the results obtained with the conventional approach in our experimental setup.

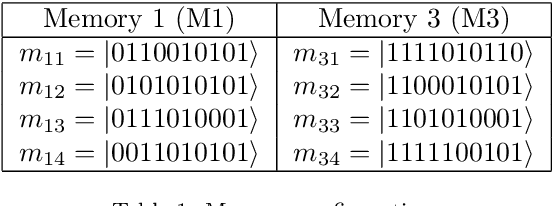

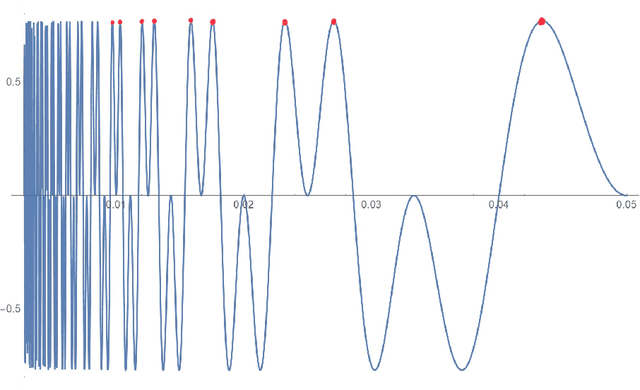

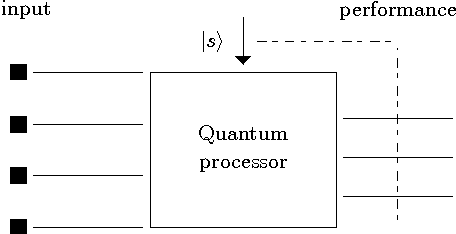

Parametric Probabilistic Quantum Memory

Jan 11, 2020

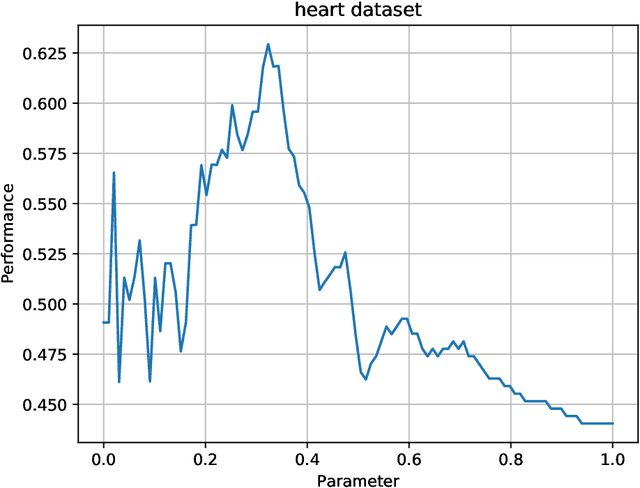

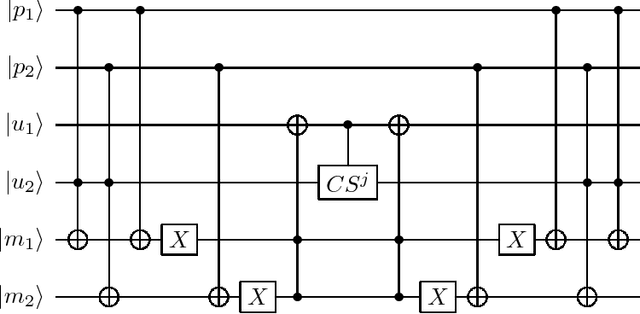

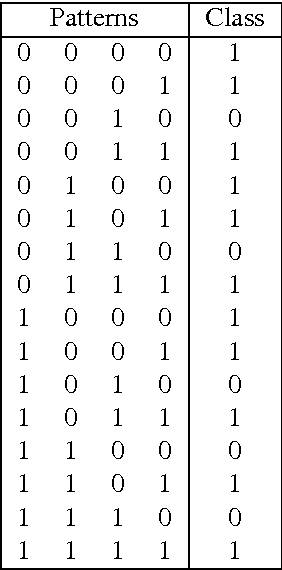

Abstract:Probabilistic Quantum Memory (PQM) is a data structure that computes the distance from a binary input to all binary patterns stored in superposition on the memory. This data structure allows the development of heuristics to speed up artificial neural networks architecture selection. In this work, we propose an improved parametric version of the PQM to perform pattern classification, and we also present a PQM quantum circuit suitable for Noisy Intermediate Scale Quantum (NISQ) computers. We present a classical evaluation of a parametric PQM network classifier on public benchmark datasets. We also perform experiments to verify the viability of PQM on a 5-qubit quantum computer.

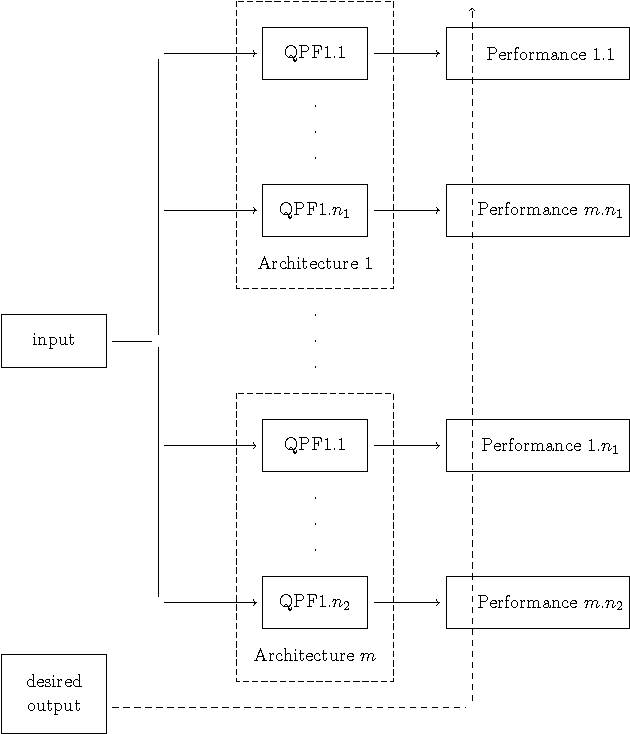

Quantum enhanced cross-validation for near-optimal neural networks architecture selection

Aug 27, 2018

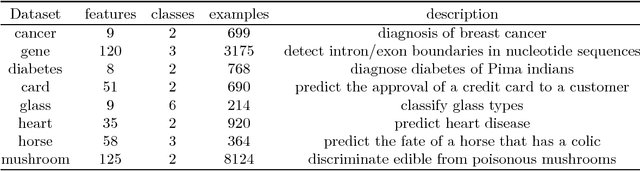

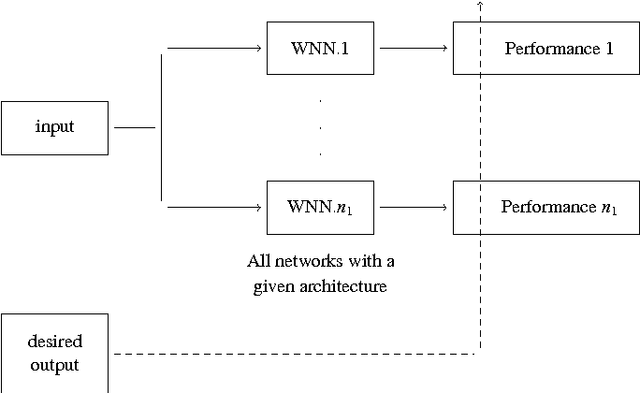

Abstract:This paper proposes a quantum-classical algorithm to evaluate and select classical artificial neural networks architectures. The proposed algorithm is based on a probabilistic quantum memory and the possibility to train artificial neural networks in superposition. We obtain an exponential quantum speedup in the evaluation of neural networks. We also verify experimentally through a reduced experimental analysis that the proposed algorithm can be used to select near-optimal neural networks.

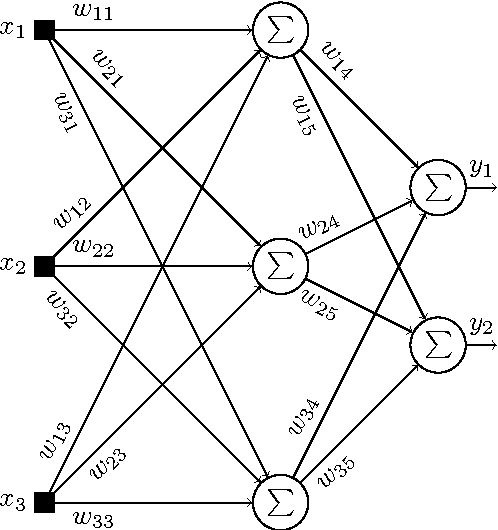

Quantum perceptron over a field and neural network architecture selection in a quantum computer

Jan 29, 2016

Abstract:In this work, we propose a quantum neural network named quantum perceptron over a field (QPF). Quantum computers are not yet a reality and the models and algorithms proposed in this work cannot be simulated in actual (or classical) computers. QPF is a direct generalization of a classical perceptron and solves some drawbacks found in previous models of quantum perceptrons. We also present a learning algorithm named Superposition based Architecture Learning algorithm (SAL) that optimizes the neural network weights and architectures. SAL searches for the best architecture in a finite set of neural network architectures with linear time over the number of patterns in the training set. SAL is the first learning algorithm to determine neural network architectures in polynomial time. This speedup is obtained by the use of quantum parallelism and a non-linear quantum operator.

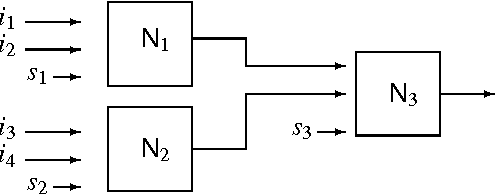

Weightless neural network parameters and architecture selection in a quantum computer

Jan 12, 2016

Abstract:Training artificial neural networks requires a tedious empirical evaluation to determine a suitable neural network architecture. To avoid this empirical process several techniques have been proposed to automatise the architecture selection process. In this paper, we propose a method to perform parameter and architecture selection for a quantum weightless neural network (qWNN). The architecture selection is performed through the learning procedure of a qWNN with a learning algorithm that uses the principle of quantum superposition and a non-linear quantum operator. The main advantage of the proposed method is that it performs a global search in the space of qWNN architecture and parameters rather than a local search.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge