Tejas Borkar

Defending against Adversarial Attacks through Resilient Feature Regeneration

Jun 08, 2019

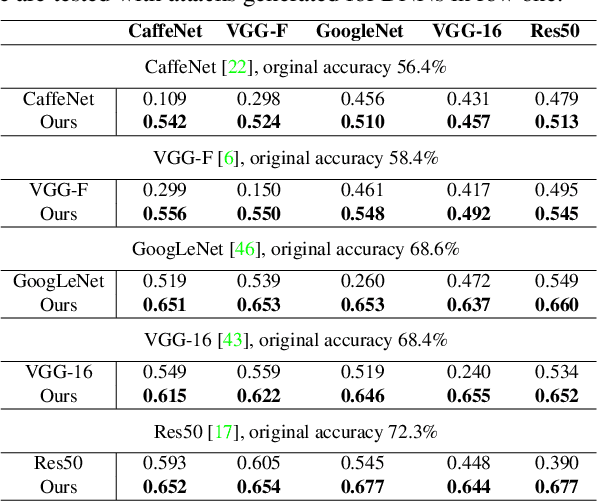

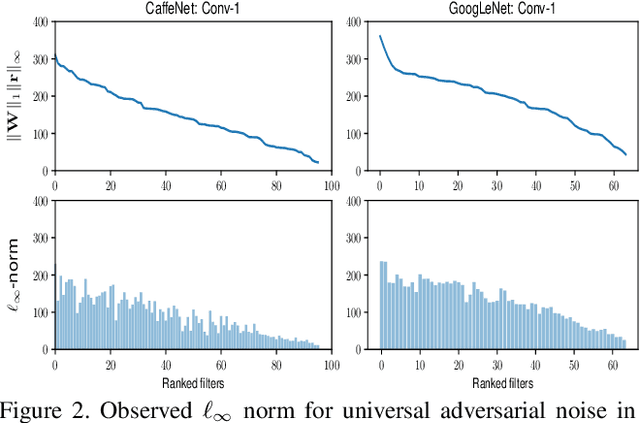

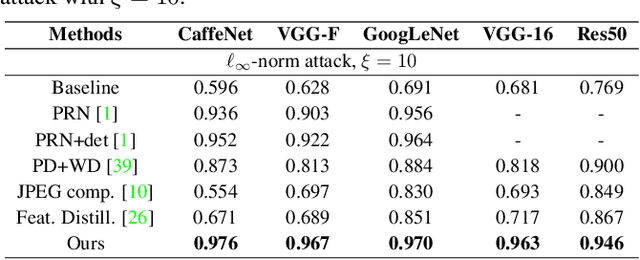

Abstract:Deep neural network (DNN) predictions have been shown to be vulnerable to carefully crafted adversarial perturbations. Specifically, so-called universal adversarial perturbations are image-agnostic perturbations that can be added to any image and can fool a target network into making erroneous predictions. Departing from existing adversarial defense strategies, which work in the image domain, we present a novel defense which operates in the DNN feature domain and effectively defends against such universal adversarial attacks. Our approach identifies pre-trained convolutional features that are most vulnerable to adversarial noise and deploys defender units which transform (regenerate) these DNN filter activations into noise-resilient features, guarding against unseen adversarial perturbations. The proposed defender units are trained using a target loss on synthetic adversarial perturbations, which we generate with a novel efficient synthesis method. We validate the proposed method for different DNN architectures, and demonstrate that it outperforms existing defense strategies across network architectures by more than 10% in restored accuracy. Moreover, we demonstrate that the approach also improves resilience of DNNs to other unseen adversarial attacks.

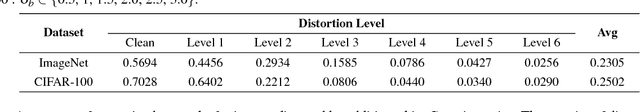

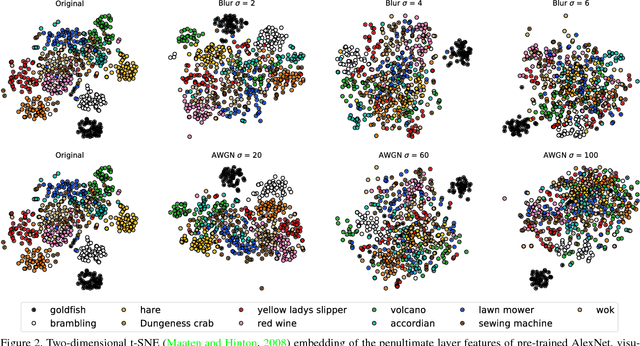

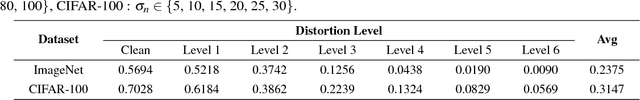

DeepCorrect: Correcting DNN models against Image Distortions

Jan 09, 2018

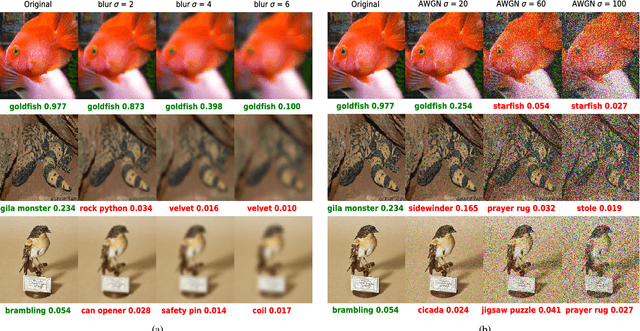

Abstract:In recent years, the widespread use of deep neural networks (DNNs) has facilitated great improvements in performance for computer vision tasks like image classification and object recognition. In most realistic computer vision applications, an input image undergoes some form of image distortion such as blur and additive noise during image acquisition or transmission. Deep networks trained on pristine images perform poorly when tested on such distortions. In this paper, we evaluate the effect of image distortions like Gaussian blur and additive noise on the activations of pre-trained convolutional filters. We propose a metric to identify the most noise susceptible convolutional filters and rank them in order of the highest gain in classification accuracy upon correction. In our proposed approach called DeepCorrect, we apply small stacks of convolutional layers with residual connections, at the output of these ranked filters and train them to correct the worst distortion affected filter activations, whilst leaving the rest of the pre-trained filter outputs in the network unchanged. Performance results show that applying DeepCorrect models for common vision tasks like image classification (CIFAR-100, ImageNet), object recognition (Caltech-101, Caltech-256) and scene classification (SUN-397), significantly improves the robustness of DNNs against distorted images and outperforms the alternative approach of network fine-tuning.

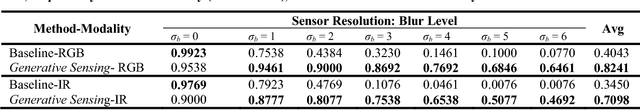

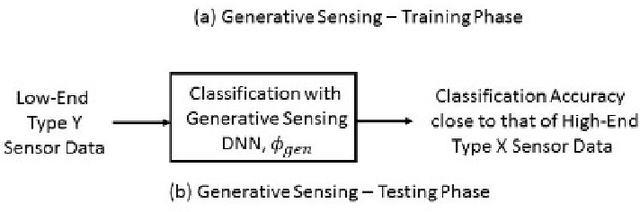

Generative Sensing: Transforming Unreliable Sensor Data for Reliable Recognition

Jan 08, 2018

Abstract:This paper introduces a deep learning enabled generative sensing framework which integrates low-end sensors with computational intelligence to attain a high recognition accuracy on par with that attained with high-end sensors. The proposed generative sensing framework aims at transforming low-end, low-quality sensor data into higher quality sensor data in terms of achieved classification accuracy. The low-end data can be transformed into higher quality data of the same modality or into data of another modality. Different from existing methods for image generation, the proposed framework is based on discriminative models and targets to maximize the recognition accuracy rather than a similarity measure. This is achieved through the introduction of selective feature regeneration in a deep neural network (DNN). The proposed generative sensing will essentially transform low-quality sensor data into high-quality information for robust perception. Results are presented to illustrate the performance of the proposed framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge