Junseok Chae

Generative Sensing: Transforming Unreliable Sensor Data for Reliable Recognition

Jan 08, 2018

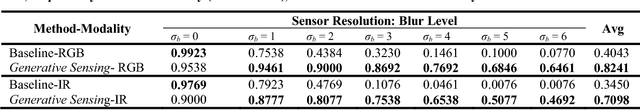

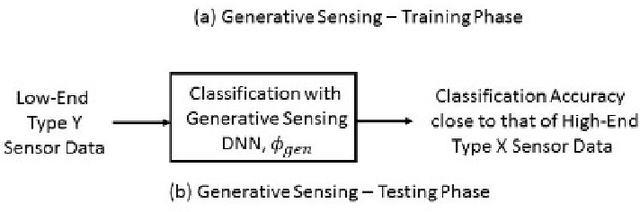

Abstract:This paper introduces a deep learning enabled generative sensing framework which integrates low-end sensors with computational intelligence to attain a high recognition accuracy on par with that attained with high-end sensors. The proposed generative sensing framework aims at transforming low-end, low-quality sensor data into higher quality sensor data in terms of achieved classification accuracy. The low-end data can be transformed into higher quality data of the same modality or into data of another modality. Different from existing methods for image generation, the proposed framework is based on discriminative models and targets to maximize the recognition accuracy rather than a similarity measure. This is achieved through the introduction of selective feature regeneration in a deep neural network (DNN). The proposed generative sensing will essentially transform low-quality sensor data into high-quality information for robust perception. Results are presented to illustrate the performance of the proposed framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge