Defending against Adversarial Attacks through Resilient Feature Regeneration

Paper and Code

Jun 08, 2019

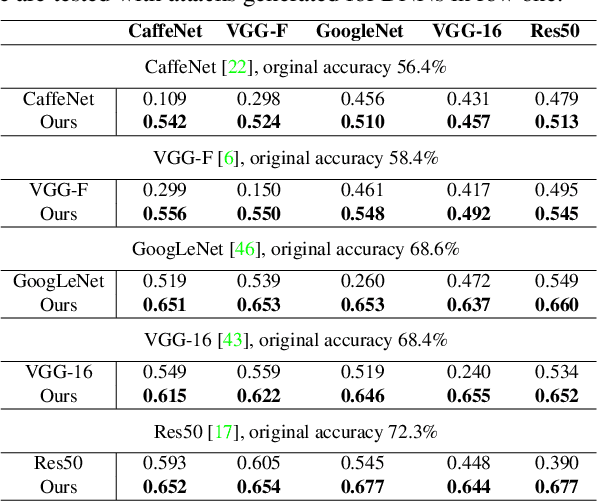

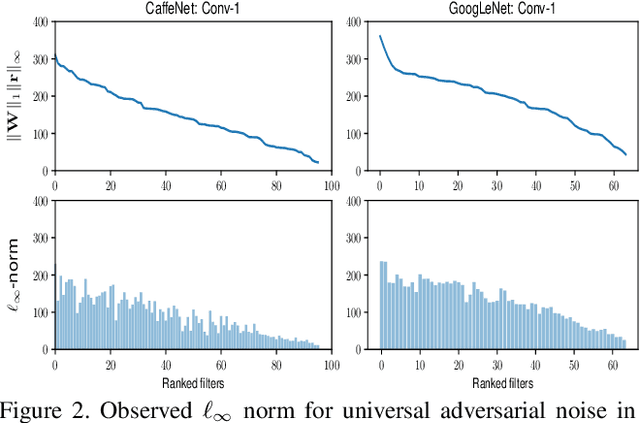

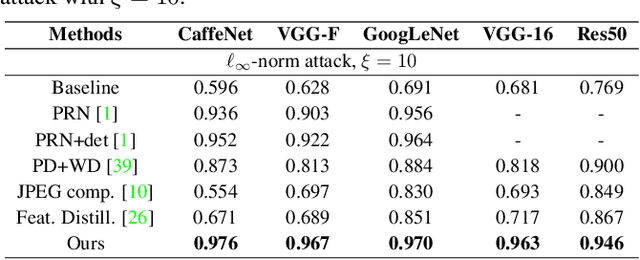

Deep neural network (DNN) predictions have been shown to be vulnerable to carefully crafted adversarial perturbations. Specifically, so-called universal adversarial perturbations are image-agnostic perturbations that can be added to any image and can fool a target network into making erroneous predictions. Departing from existing adversarial defense strategies, which work in the image domain, we present a novel defense which operates in the DNN feature domain and effectively defends against such universal adversarial attacks. Our approach identifies pre-trained convolutional features that are most vulnerable to adversarial noise and deploys defender units which transform (regenerate) these DNN filter activations into noise-resilient features, guarding against unseen adversarial perturbations. The proposed defender units are trained using a target loss on synthetic adversarial perturbations, which we generate with a novel efficient synthesis method. We validate the proposed method for different DNN architectures, and demonstrate that it outperforms existing defense strategies across network architectures by more than 10% in restored accuracy. Moreover, we demonstrate that the approach also improves resilience of DNNs to other unseen adversarial attacks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge