Tarmo Koppel

Evaluating Chatbots to Promote Users' Trust -- Practices and Open Problems

Sep 14, 2023Abstract:Chatbots, the common moniker for collaborative assistants, are Artificial Intelligence (AI) software that enables people to naturally interact with them to get tasks done. Although chatbots have been studied since the dawn of AI, they have particularly caught the imagination of the public and businesses since the launch of easy-to-use and general-purpose Large Language Model-based chatbots like ChatGPT. As businesses look towards chatbots as a potential technology to engage users, who may be end customers, suppliers, or even their own employees, proper testing of chatbots is important to address and mitigate issues of trust related to service or product performance, user satisfaction and long-term unintended consequences for society. This paper reviews current practices for chatbot testing, identifies gaps as open problems in pursuit of user trust, and outlines a path forward.

ULTRA: A Data-driven Approach for Recommending Team Formation in Response to Proposal Calls

Jan 13, 2022

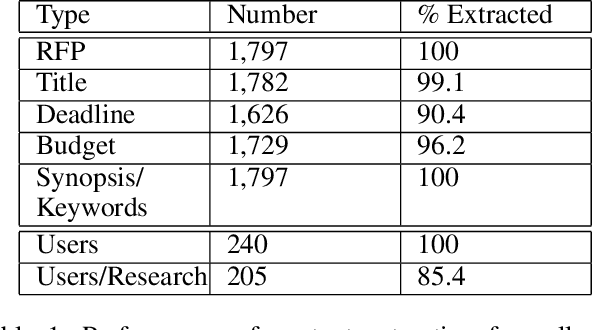

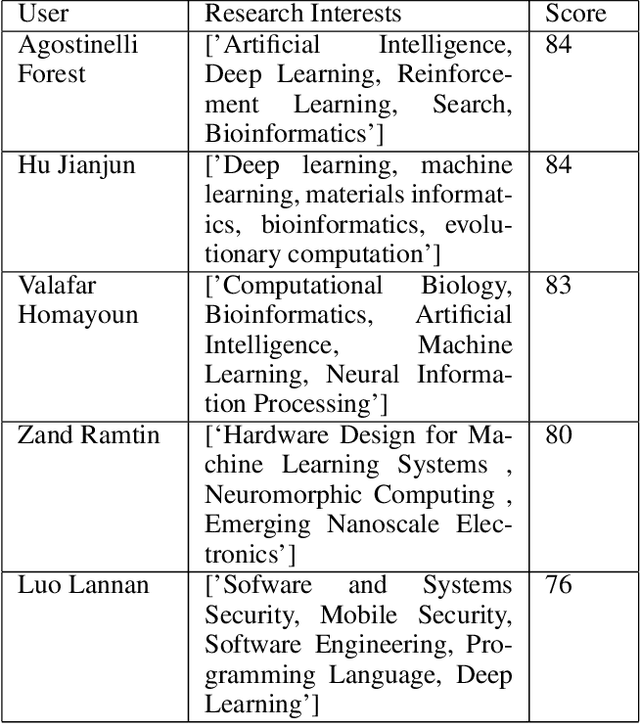

Abstract:We introduce an emerging AI-based approach and prototype system for assisting team formation when researchers respond to calls for proposals from funding agencies. This is an instance of the general problem of building teams when demand opportunities come periodically and potential members may vary over time. The novelties of our approach are that we: (a) extract technical skills needed about researchers and calls from multiple data sources and normalize them using Natural Language Processing (NLP) techniques, (b) build a prototype solution based on matching and teaming based on constraints, (c) describe initial feedback about system from researchers at a University to deploy, and (d) create and publish a dataset that others can use.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge