Tarek M. Taha

COVID_MTNet: COVID-19 Detection with Multi-Task Deep Learning Approaches

Apr 18, 2020

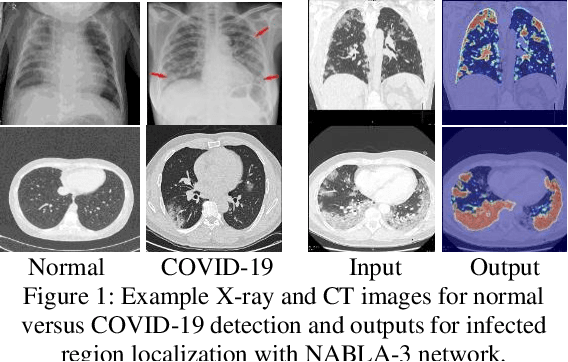

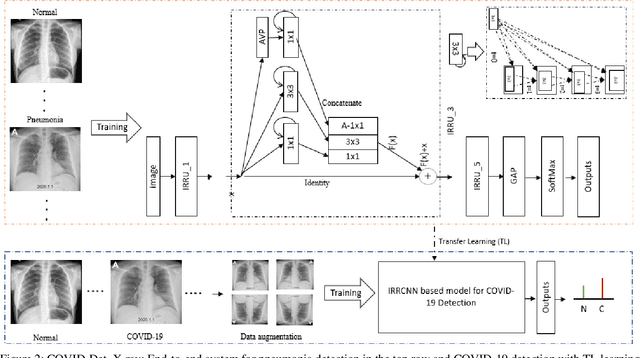

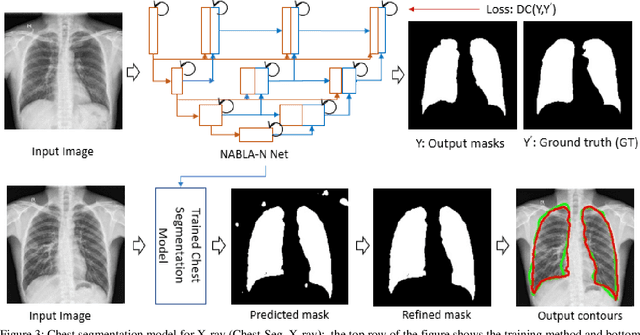

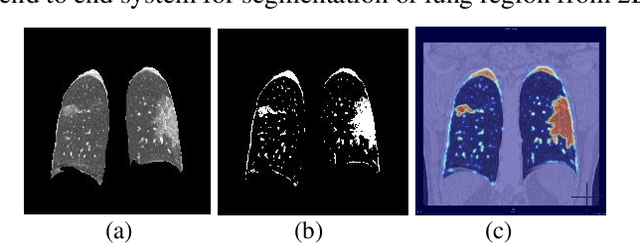

Abstract:COVID-19 is currently one the most life-threatening problems around the world. The fast and accurate detection of the COVID-19 infection is essential to identify, take better decisions and ensure treatment for the patients which will help save their lives. In this paper, we propose a fast and efficient way to identify COVID-19 patients with multi-task deep learning (DL) methods. Both X-ray and CT scan images are considered to evaluate the proposed technique. We employ our Inception Residual Recurrent Convolutional Neural Network with Transfer Learning (TL) approach for COVID-19 detection and our NABLA-N network model for segmenting the regions infected by COVID-19. The detection model shows around 84.67% testing accuracy from X-ray images and 98.78% accuracy in CT-images. A novel quantitative analysis strategy is also proposed in this paper to determine the percentage of infected regions in X-ray and CT images. The qualitative and quantitative results demonstrate promising results for COVID-19 detection and infected region localization.

High Speed Cognitive Domain Ontologies for Asset Allocation Using Loihi Spiking Neurons

Jun 28, 2019

Abstract:Cognitive agents are typically utilized in autonomous systems for automated decision making. These systems interact at real time with their environment and are generally heavily power constrained. Thus, there is a strong need for a real time agent running on a low power platform. The agent examined is the Cognitively Enhanced Complex Event Processing (CECEP) architecture. This is an autonomous decision support tool that reasons like humans and enables enhanced agent-based decision-making. It has applications in a large variety of domains including autonomous systems, operations research, intelligence analysis, and data mining. One of the key components of CECEP is the mining of knowledge from a repository described as a Cognitive Domain Ontology (CDO). One problem that is often tasked to CDOs is asset allocation. Given the number of possible solutions in this allocation problem, determining the optimal solution via CDO can be very time consuming. In this work we show that a grid of isolated spiking neurons is capable of generating solutions to this problem very quickly, although some degree of approximation is required to achieve the speedup. However, the approximate spiking approach presented in this work was able to complete all allocation simulations with greater than 99.9% accuracy. To show the feasibility of low power implementation, this algorithm was executed using the Intel Loihi manycore neuromorphic processor. Given the vast increase in speed (greater than 1000 times in larger allocation problems), as well as the reduction in computational requirements, the presented algorithm is ideal for moving asset allocation to low power, portable, embedded hardware.

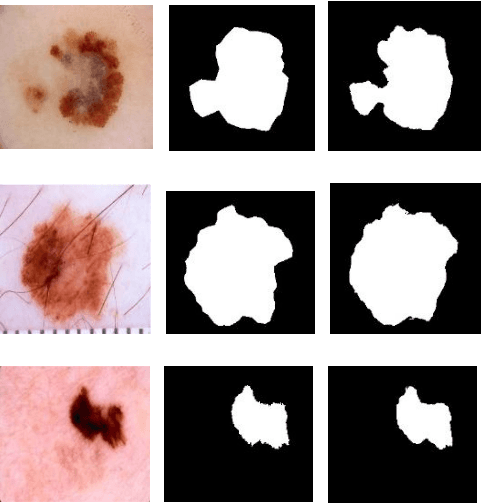

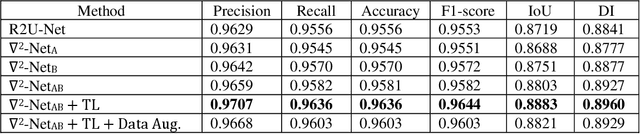

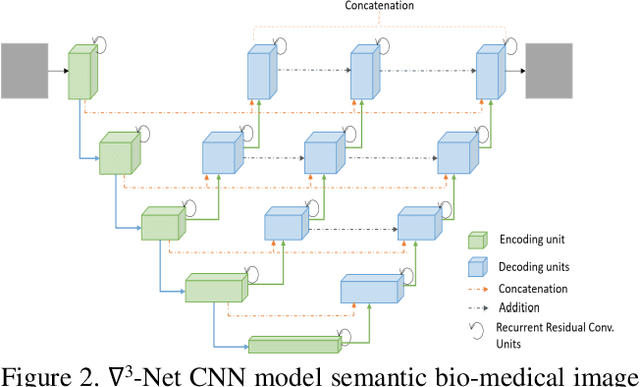

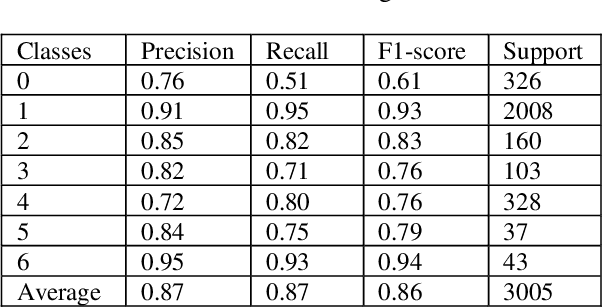

Skin Cancer Segmentation and Classification with NABLA-N and Inception Recurrent Residual Convolutional Networks

Apr 25, 2019

Abstract:In the last few years, Deep Learning (DL) has been showing superior performance in different modalities of biomedical image analysis. Several DL architectures have been proposed for classification, segmentation, and detection tasks in medical imaging and computational pathology. In this paper, we propose a new DL architecture, the NABLA-N network, with better feature fusion techniques in decoding units for dermoscopic image segmentation tasks. The NABLA-N network has several advances for segmentation tasks. First, this model ensures better feature representation for semantic segmentation with a combination of low to high-level feature maps. Second, this network shows better quantitative and qualitative results with the same or fewer network parameters compared to other methods. In addition, the Inception Recurrent Residual Convolutional Neural Network (IRRCNN) model is used for skin cancer classification. The proposed NABLA-N network and IRRCNN models are evaluated for skin cancer segmentation and classification on the benchmark datasets from the International Skin Imaging Collaboration 2018 (ISIC-2018). The experimental results show superior performance on segmentation tasks compared to the Recurrent Residual U-Net (R2U-Net). The classification model shows around 87% testing accuracy for dermoscopic skin cancer classification on ISIC2018 dataset.

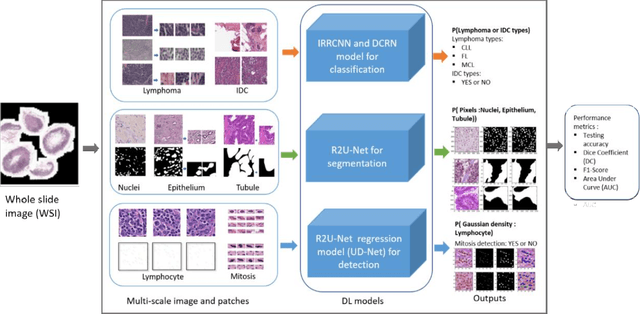

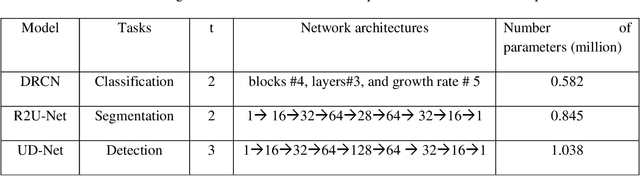

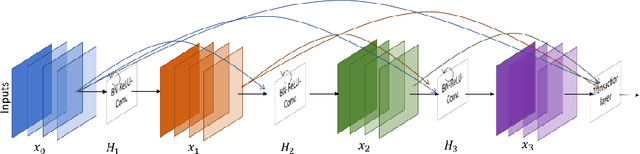

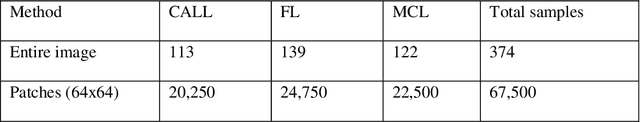

Advanced Deep Convolutional Neural Network Approaches for Digital Pathology Image Analysis: a comprehensive evaluation with different use cases

Apr 19, 2019

Abstract:Deep Learning (DL) approaches have been providing state-of-the-art performance in different modalities in the field of medical imagining including Digital Pathology Image Analysis (DPIA). Out of many different DL approaches, Deep Convolutional Neural Network (DCNN) technique provides superior performance for classification, segmentation, and detection tasks. Most of the task in DPIA problems are somehow possible to solve with classification, segmentation, and detection approaches. In addition, sometimes pre and post-processing methods are applied for solving some specific type of problems. Recently, different DCNN models including Inception residual recurrent CNN (IRRCNN), Densely Connected Recurrent Convolution Network (DCRCN), Recurrent Residual U-Net (R2U-Net), and R2U-Net based regression model (UD-Net) have proposed and provide state-of-the-art performance for different computer vision and medical image analysis tasks. However, these advanced DCNN models have not been explored for solving different problems related to DPIA. In this study, we have applied these DCNN techniques for solving different DPIA problems and evaluated on different publicly available benchmark datasets for seven different tasks in digital pathology including lymphoma classification, Invasive Ductal Carcinoma (IDC) detection, nuclei segmentation, epithelium segmentation, tubule segmentation, lymphocyte detection, and mitosis detection. The experimental results are evaluated with different performance metrics such as sensitivity, specificity, accuracy, F1-score, Receiver Operating Characteristics (ROC) curve, dice coefficient (DC), and Means Squired Errors (MSE). The results demonstrate superior performance for classification, segmentation, and detection tasks compared to existing machine learning and DCNN based approaches.

Breast Cancer Classification from Histopathological Images with Inception Recurrent Residual Convolutional Neural Network

Nov 10, 2018

Abstract:The Deep Convolutional Neural Network (DCNN) is one of the most powerful and successful deep learning approaches. DCNNs have already provided superior performance in different modalities of medical imaging including breast cancer classification, segmentation, and detection. Breast cancer is one of the most common and dangerous cancers impacting women worldwide. In this paper, we have proposed a method for breast cancer classification with the Inception Recurrent Residual Convolutional Neural Network (IRRCNN) model. The IRRCNN is a powerful DCNN model that combines the strength of the Inception Network (Inception-v4), the Residual Network (ResNet), and the Recurrent Convolutional Neural Network (RCNN). The IRRCNN shows superior performance against equivalent Inception Networks, Residual Networks, and RCNNs for object recognition tasks. In this paper, the IRRCNN approach is applied for breast cancer classification on two publicly available datasets including BreakHis and Breast Cancer Classification Challenge 2015. The experimental results are compared against the existing machine learning and deep learning-based approaches with respect to image-based, patch-based, image-level, and patient-level classification. The IRRCNN model provides superior classification performance in terms of sensitivity, Area Under the Curve (AUC), the ROC curve, and global accuracy compared to existing approaches for both datasets.

Microscopic Nuclei Classification, Segmentation and Detection with improved Deep Convolutional Neural Network (DCNN) Approaches

Nov 08, 2018

Abstract:Due to cellular heterogeneity, cell nuclei classification, segmentation, and detection from pathological images are challenging tasks. In the last few years, Deep Convolutional Neural Networks (DCNN) approaches have been shown state-of-the-art (SOTA) performance on histopathological imaging in different studies. In this work, we have proposed different advanced DCNN models and evaluated for nuclei classification, segmentation, and detection. First, the Densely Connected Recurrent Convolutional Network (DCRN) model is used for nuclei classification. Second, Recurrent Residual U-Net (R2U-Net) is applied for nuclei segmentation. Third, the R2U-Net regression model which is named UD-Net is used for nuclei detection from pathological images. The experiments are conducted with different datasets including Routine Colon Cancer(RCC) classification and detection dataset, and Nuclei Segmentation Challenge 2018 dataset. The experimental results show that the proposed DCNN models provide superior performance compared to the existing approaches for nuclei classification, segmentation, and detection tasks. The results are evaluated with different performance metrics including precision, recall, Dice Coefficient (DC), Means Squared Errors (MSE), F1-score, and overall accuracy. We have achieved around 3.4% and 4.5% better F-1 score for nuclei classification and detection tasks compared to recently published DCNN based method. In addition, R2U-Net shows around 92.15% testing accuracy in term of DC. These improved methods will help for pathological practices for better quantitative analysis of nuclei in Whole Slide Images(WSI) which ultimately will help for better understanding of different types of cancer in clinical workflow.

The History Began from AlexNet: A Comprehensive Survey on Deep Learning Approaches

Sep 12, 2018

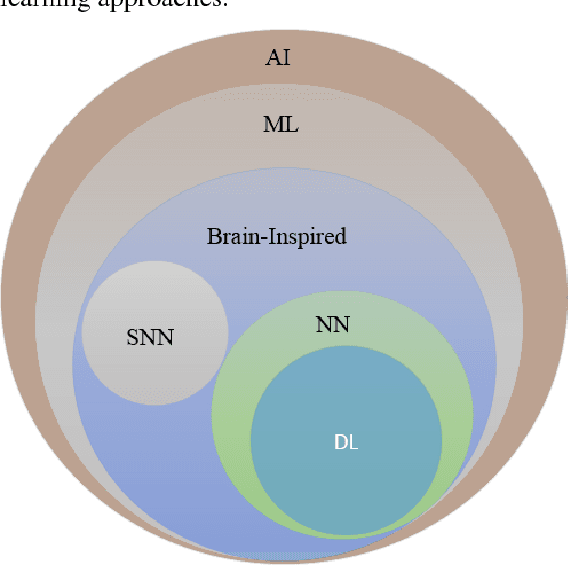

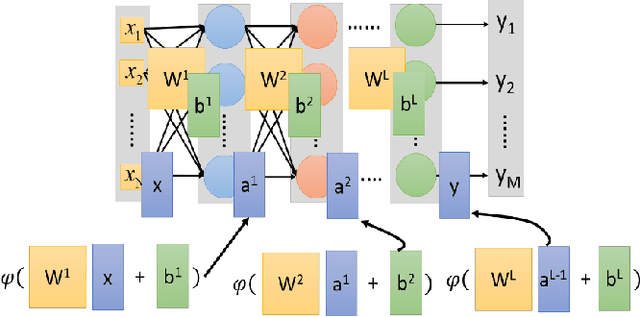

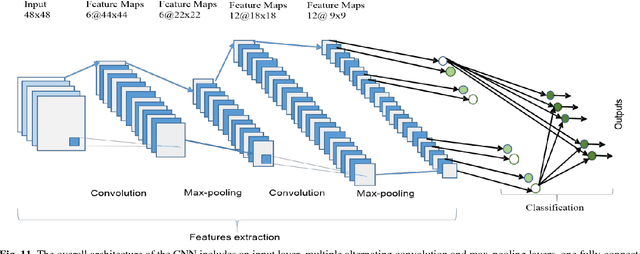

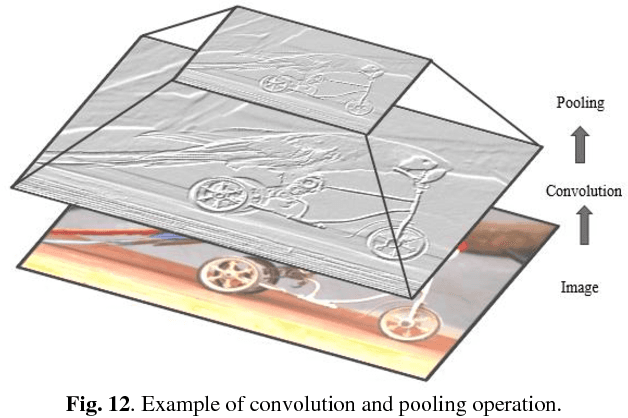

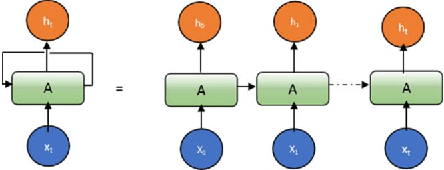

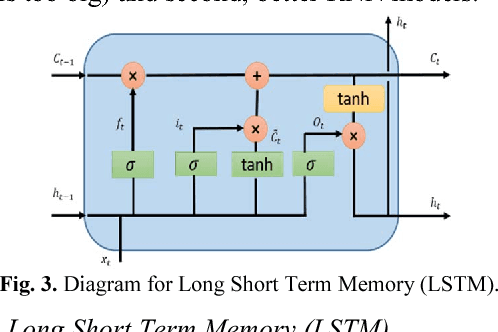

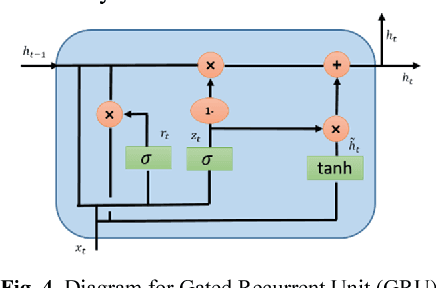

Abstract:Deep learning has demonstrated tremendous success in variety of application domains in the past few years. This new field of machine learning has been growing rapidly and applied in most of the application domains with some new modalities of applications, which helps to open new opportunity. There are different methods have been proposed on different category of learning approaches, which includes supervised, semi-supervised and un-supervised learning. The experimental results show state-of-the-art performance of deep learning over traditional machine learning approaches in the field of Image Processing, Computer Vision, Speech Recognition, Machine Translation, Art, Medical imaging, Medical information processing, Robotics and control, Bio-informatics, Natural Language Processing (NLP), Cyber security, and many more. This report presents a brief survey on development of DL approaches, including Deep Neural Network (DNN), Convolutional Neural Network (CNN), Recurrent Neural Network (RNN) including Long Short Term Memory (LSTM) and Gated Recurrent Units (GRU), Auto-Encoder (AE), Deep Belief Network (DBN), Generative Adversarial Network (GAN), and Deep Reinforcement Learning (DRL). In addition, we have included recent development of proposed advanced variant DL techniques based on the mentioned DL approaches. Furthermore, DL approaches have explored and evaluated in different application domains are also included in this survey. We have also comprised recently developed frameworks, SDKs, and benchmark datasets that are used for implementing and evaluating deep learning approaches. There are some surveys have published on Deep Learning in Neural Networks [1, 38] and a survey on RL [234]. However, those papers have not discussed the individual advanced techniques for training large scale deep learning models and the recently developed method of generative models [1].

Recurrent Residual Convolutional Neural Network based on U-Net (R2U-Net) for Medical Image Segmentation

May 29, 2018

Abstract:Deep learning (DL) based semantic segmentation methods have been providing state-of-the-art performance in the last few years. More specifically, these techniques have been successfully applied to medical image classification, segmentation, and detection tasks. One deep learning technique, U-Net, has become one of the most popular for these applications. In this paper, we propose a Recurrent Convolutional Neural Network (RCNN) based on U-Net as well as a Recurrent Residual Convolutional Neural Network (RRCNN) based on U-Net models, which are named RU-Net and R2U-Net respectively. The proposed models utilize the power of U-Net, Residual Network, as well as RCNN. There are several advantages of these proposed architectures for segmentation tasks. First, a residual unit helps when training deep architecture. Second, feature accumulation with recurrent residual convolutional layers ensures better feature representation for segmentation tasks. Third, it allows us to design better U-Net architecture with same number of network parameters with better performance for medical image segmentation. The proposed models are tested on three benchmark datasets such as blood vessel segmentation in retina images, skin cancer segmentation, and lung lesion segmentation. The experimental results show superior performance on segmentation tasks compared to equivalent models including U-Net and residual U-Net (ResU-Net).

Effective Quantization Approaches for Recurrent Neural Networks

Feb 07, 2018

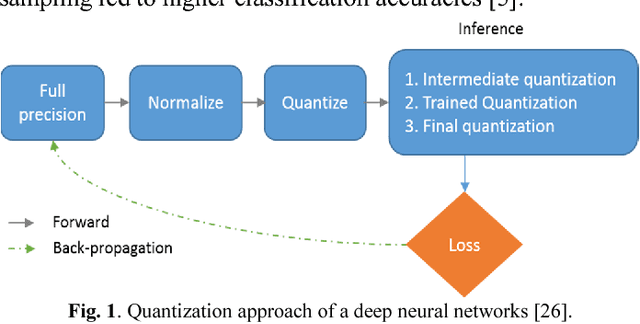

Abstract:Deep learning, and in particular Recurrent Neural Networks (RNN) have shown superior accuracy in a large variety of tasks including machine translation, language understanding, and movie frame generation. However, these deep learning approaches are very expensive in terms of computation. In most cases, Graphic Processing Units (GPUs) are in used for large scale implementations. Meanwhile, energy efficient RNN approaches are proposed for deploying solutions on special purpose hardware including Field Programming Gate Arrays (FPGAs) and mobile platforms. In this paper, we propose an effective quantization approach for Recurrent Neural Networks (RNN) techniques including Long Short Term Memory (LSTM), Gated Recurrent Units (GRU), and Convolutional Long Short Term Memory (ConvLSTM). We have implemented different quantization methods including Binary Connect {-1, 1}, Ternary Connect {-1, 0, 1}, and Quaternary Connect {-1, -0.5, 0.5, 1}. These proposed approaches are evaluated on different datasets for sentiment analysis on IMDB and video frame predictions on the moving MNIST dataset. The experimental results are compared against the full precision versions of the LSTM, GRU, and ConvLSTM. They show promising results for both sentiment analysis and video frame prediction.

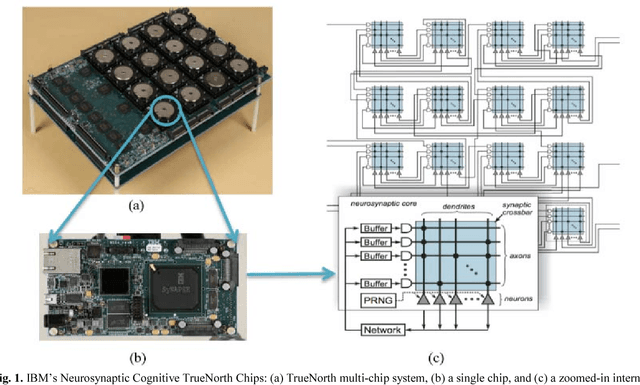

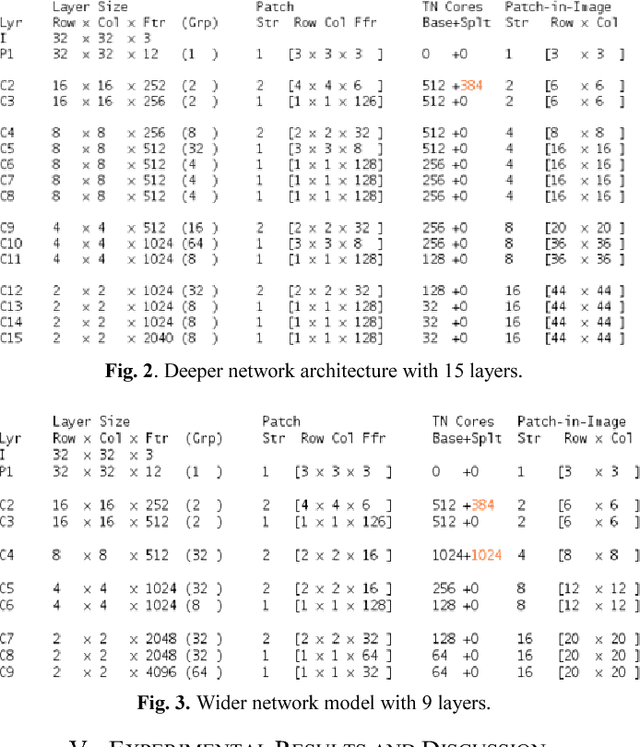

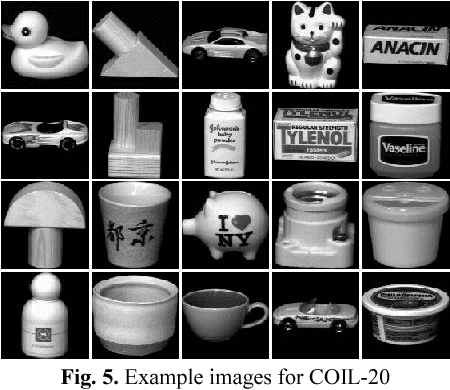

Deep Versus Wide Convolutional Neural Networks for Object Recognition on Neuromorphic System

Feb 07, 2018

Abstract:In the last decade, special purpose computing systems, such as Neuromorphic computing, have become very popular in the field of computer vision and machine learning for classification tasks. In 2015, IBM's released the TrueNorth Neuromorphic system, kick-starting a new era of Neuromorphic computing. Alternatively, Deep Learning approaches such as Deep Convolutional Neural Networks (DCNN) show almost human-level accuracies for detection and classification tasks. IBM's 2016 release of a deep learning framework for DCNNs, called Energy Efficient Deep Neuromorphic Networks (Eedn). Eedn shows promise for delivering high accuracies across a number of different benchmarks, while consuming very low power, using IBM's TrueNorth chip. However, there are many things that remained undiscovered using the Eedn framework for classification tasks on a Neuromorphic system. In this paper, we have empirically evaluated the performance of different DCNN architectures implemented within the Eedn framework. The goal of this work was discover the most efficient way to implement DCNN models for object classification tasks using the TrueNorth system. We performed our experiments using benchmark data sets such as MNIST, COIL 20, and COIL 100. The experimental results show very promising classification accuracies with very low power consumption on IBM's NS1e Neurosynaptic system. The results show that for datasets with large numbers of classes, wider networks perform better when compared to deep networks comprised of nearly the same core complexity on IBM's TrueNorth system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge