Deep Versus Wide Convolutional Neural Networks for Object Recognition on Neuromorphic System

Paper and Code

Feb 07, 2018

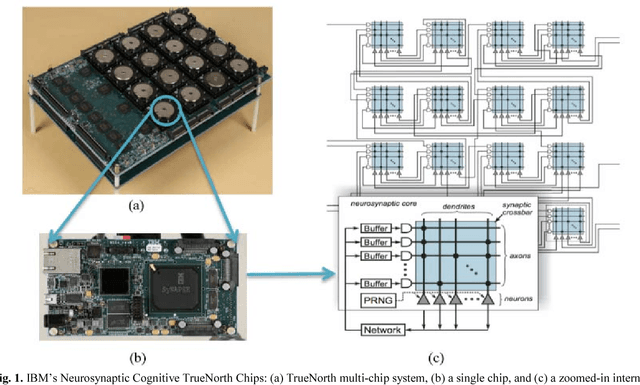

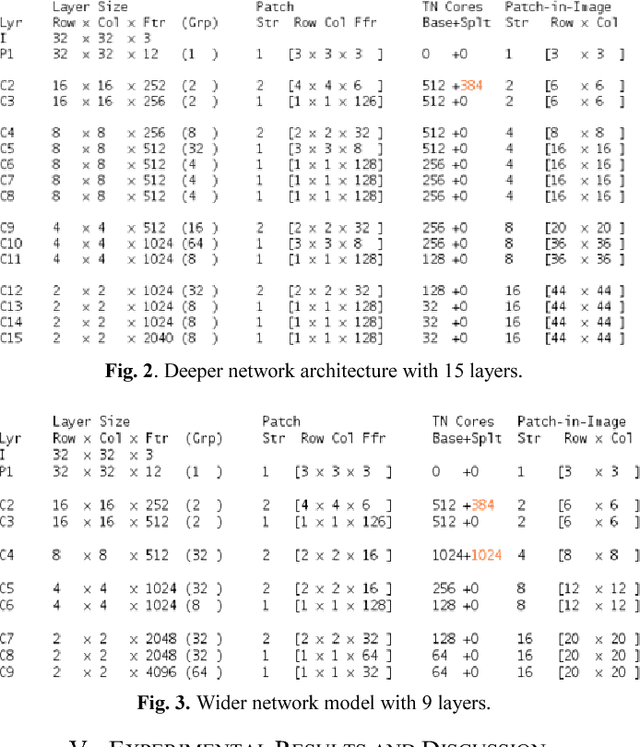

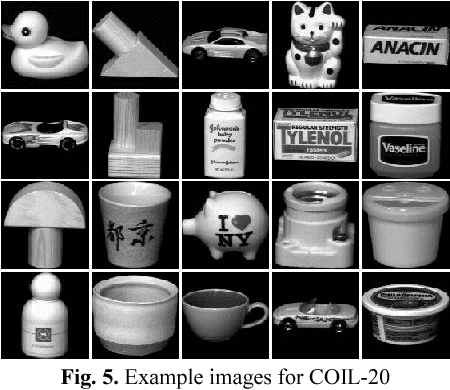

In the last decade, special purpose computing systems, such as Neuromorphic computing, have become very popular in the field of computer vision and machine learning for classification tasks. In 2015, IBM's released the TrueNorth Neuromorphic system, kick-starting a new era of Neuromorphic computing. Alternatively, Deep Learning approaches such as Deep Convolutional Neural Networks (DCNN) show almost human-level accuracies for detection and classification tasks. IBM's 2016 release of a deep learning framework for DCNNs, called Energy Efficient Deep Neuromorphic Networks (Eedn). Eedn shows promise for delivering high accuracies across a number of different benchmarks, while consuming very low power, using IBM's TrueNorth chip. However, there are many things that remained undiscovered using the Eedn framework for classification tasks on a Neuromorphic system. In this paper, we have empirically evaluated the performance of different DCNN architectures implemented within the Eedn framework. The goal of this work was discover the most efficient way to implement DCNN models for object classification tasks using the TrueNorth system. We performed our experiments using benchmark data sets such as MNIST, COIL 20, and COIL 100. The experimental results show very promising classification accuracies with very low power consumption on IBM's NS1e Neurosynaptic system. The results show that for datasets with large numbers of classes, wider networks perform better when compared to deep networks comprised of nearly the same core complexity on IBM's TrueNorth system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge