Md Zahangir Alom

Member, IEEE

Learned Image resizing with efficient training (LRET) facilitates improved performance of large-scale digital histopathology image classification models

Jan 19, 2024Abstract:Histologic examination plays a crucial role in oncology research and diagnostics. The adoption of digital scanning of whole slide images (WSI) has created an opportunity to leverage deep learning-based image classification methods to enhance diagnosis and risk stratification. Technical limitations of current approaches to training deep convolutional neural networks (DCNN) result in suboptimal model performance and make training and deployment of comprehensive classification models unobtainable. In this study, we introduce a novel approach that addresses the main limitations of traditional histopathology classification model training. Our method, termed Learned Resizing with Efficient Training (LRET), couples efficient training techniques with image resizing to facilitate seamless integration of larger histology image patches into state-of-the-art classification models while preserving important structural information. We used the LRET method coupled with two distinct resizing techniques to train three diverse histology image datasets using multiple diverse DCNN architectures. Our findings demonstrate a significant enhancement in classification performance and training efficiency. Across the spectrum of experiments, LRET consistently outperforms existing methods, yielding a substantial improvement of 15-28% in accuracy for a large-scale, multiclass tumor classification task consisting of 74 distinct brain tumor types. LRET not only elevates classification accuracy but also substantially reduces training times, unlocking the potential for faster model development and iteration. The implications of this work extend to broader applications within medical imaging and beyond, where efficient integration of high-resolution images into deep learning pipelines is paramount for driving advancements in research and clinical practice.

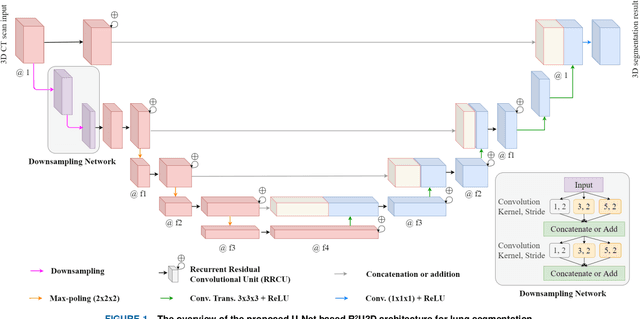

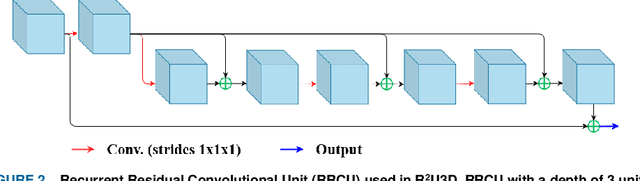

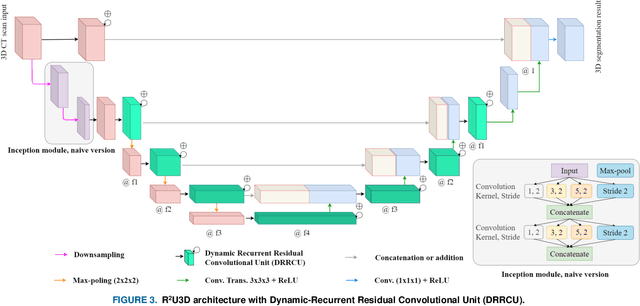

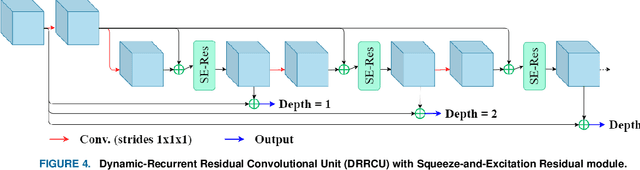

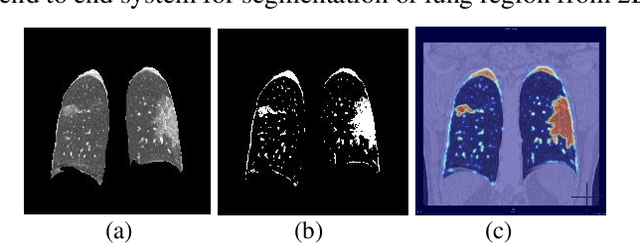

R2U3D: Recurrent Residual 3D U-Net for Lung Segmentation

May 05, 2021

Abstract:3D lung segmentation is essential since it processes the volumetric information of the lungs, removes the unnecessary areas of the scan, and segments the actual area of the lungs in a 3D volume. Recently, the deep learning model, such as U-Net outperforms other network architectures for biomedical image segmentation. In this paper, we propose a novel model, namely, Recurrent Residual 3D U-Net (R2U3D), for the 3D lung segmentation task. In particular, the proposed model integrates 3D convolution into the Recurrent Residual Neural Network based on U-Net. It helps learn spatial dependencies in 3D and increases the propagation of 3D volumetric information. The proposed R2U3D network is trained on the publicly available dataset LUNA16 and it achieves state-of-the-art performance on both LUNA16 (testing set) and VESSEL12 dataset. In addition, we show that training the R2U3D model with a smaller number of CT scans, i.e., 100 scans, without applying data augmentation achieves an outstanding result in terms of Soft Dice Similarity Coefficient (Soft-DSC) of 0.9920.

GanglionNet: Objectively Assess the Density and Distribution of Ganglion Cells With NABLA-N Network

Jul 05, 2020

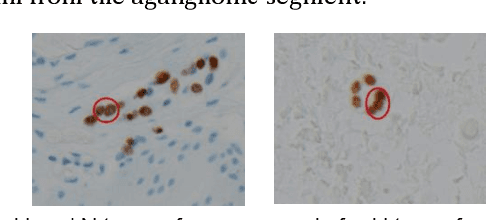

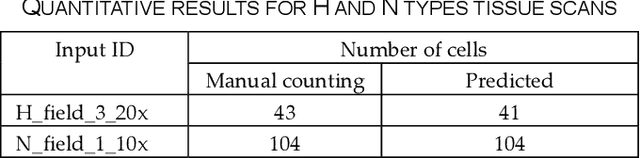

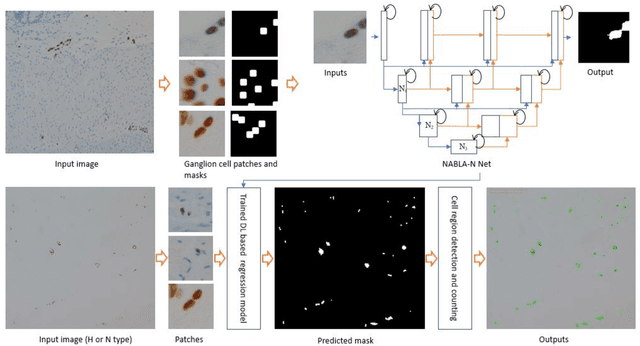

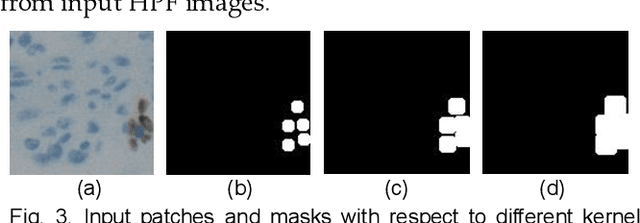

Abstract:Hirschsprungs disease (HD) is a birth defect which is diagnosed and managed by multiple medical specialties such as pediatric gastroenterology, surgery, radiology, and pathology. HD is characterized by absence of ganglion cells in the distal intestinal tract with a gradual normalization of ganglion cell numbers in adjacent upstream bowel, termed as the transition zone (TZ). Definitive surgical management to remove the abnormal bowel requires accurate assessment of ganglion cell density in histological sections from the TZ, which is difficult, time-consuming and prone to operator error. We present an automated method to detect and count immunostained ganglion cells using a new NABLA_N network based deep learning (DL) approach, called GanglionNet. The morphological image analysis methods are applied for refinement of the regions for counting of the cells and define ganglia regions (a set of ganglion cells) from the predicted masks. The proposed model is trained with single point annotated samples by the expert pathologist. The GanglionNet is tested on ten completely new High Power Field (HPF) images with dimension of 2560x1920 pixels and the outputs are compared against the manual counting results by the expert pathologist. The proposed method shows a robust 97.49% detection accuracy for ganglion cells, when compared to counts by the expert pathologist, which demonstrates the robustness of GanglionNet. The proposed DL based ganglion cell detection and counting method will simplify and standardize TZ diagnosis for HD patients.

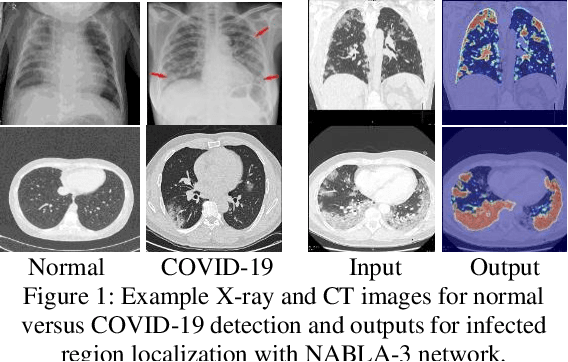

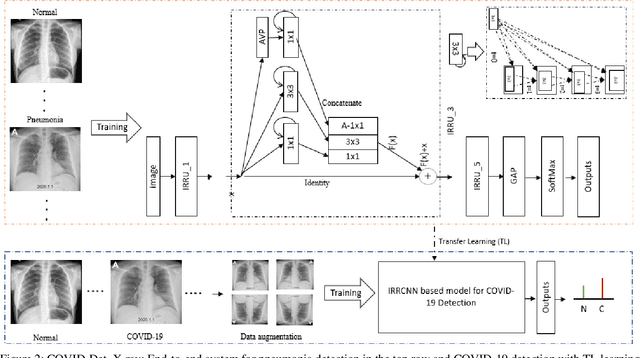

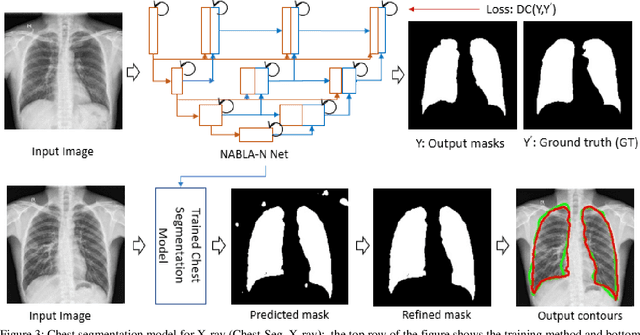

COVID_MTNet: COVID-19 Detection with Multi-Task Deep Learning Approaches

Apr 18, 2020

Abstract:COVID-19 is currently one the most life-threatening problems around the world. The fast and accurate detection of the COVID-19 infection is essential to identify, take better decisions and ensure treatment for the patients which will help save their lives. In this paper, we propose a fast and efficient way to identify COVID-19 patients with multi-task deep learning (DL) methods. Both X-ray and CT scan images are considered to evaluate the proposed technique. We employ our Inception Residual Recurrent Convolutional Neural Network with Transfer Learning (TL) approach for COVID-19 detection and our NABLA-N network model for segmenting the regions infected by COVID-19. The detection model shows around 84.67% testing accuracy from X-ray images and 98.78% accuracy in CT-images. A novel quantitative analysis strategy is also proposed in this paper to determine the percentage of infected regions in X-ray and CT images. The qualitative and quantitative results demonstrate promising results for COVID-19 detection and infected region localization.

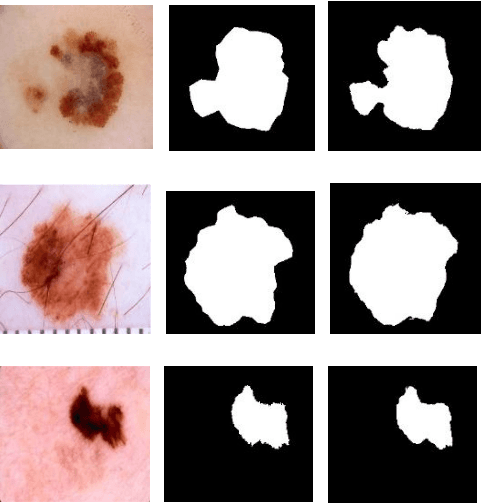

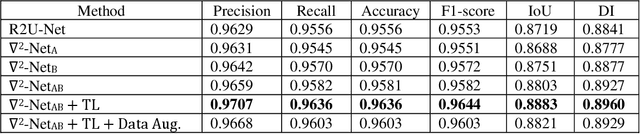

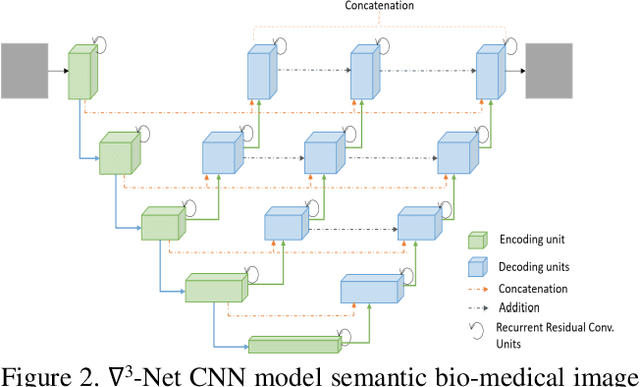

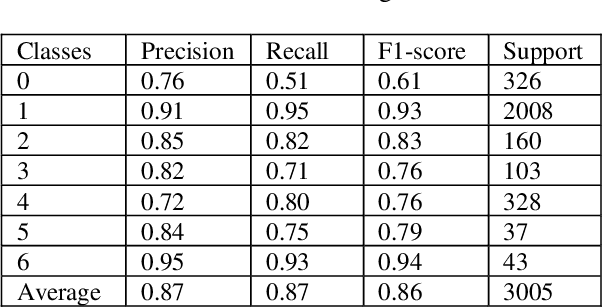

Skin Cancer Segmentation and Classification with NABLA-N and Inception Recurrent Residual Convolutional Networks

Apr 25, 2019

Abstract:In the last few years, Deep Learning (DL) has been showing superior performance in different modalities of biomedical image analysis. Several DL architectures have been proposed for classification, segmentation, and detection tasks in medical imaging and computational pathology. In this paper, we propose a new DL architecture, the NABLA-N network, with better feature fusion techniques in decoding units for dermoscopic image segmentation tasks. The NABLA-N network has several advances for segmentation tasks. First, this model ensures better feature representation for semantic segmentation with a combination of low to high-level feature maps. Second, this network shows better quantitative and qualitative results with the same or fewer network parameters compared to other methods. In addition, the Inception Recurrent Residual Convolutional Neural Network (IRRCNN) model is used for skin cancer classification. The proposed NABLA-N network and IRRCNN models are evaluated for skin cancer segmentation and classification on the benchmark datasets from the International Skin Imaging Collaboration 2018 (ISIC-2018). The experimental results show superior performance on segmentation tasks compared to the Recurrent Residual U-Net (R2U-Net). The classification model shows around 87% testing accuracy for dermoscopic skin cancer classification on ISIC2018 dataset.

Advanced Deep Convolutional Neural Network Approaches for Digital Pathology Image Analysis: a comprehensive evaluation with different use cases

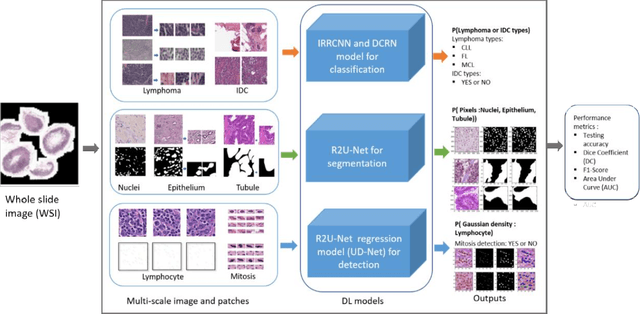

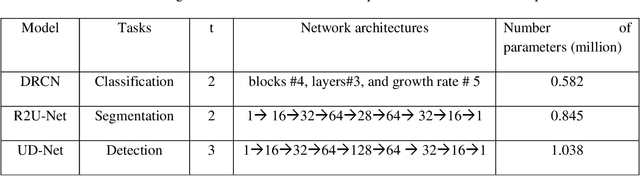

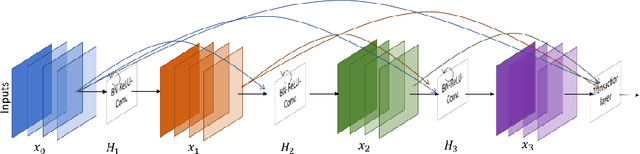

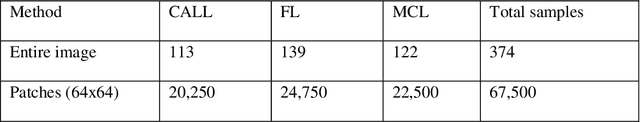

Apr 19, 2019

Abstract:Deep Learning (DL) approaches have been providing state-of-the-art performance in different modalities in the field of medical imagining including Digital Pathology Image Analysis (DPIA). Out of many different DL approaches, Deep Convolutional Neural Network (DCNN) technique provides superior performance for classification, segmentation, and detection tasks. Most of the task in DPIA problems are somehow possible to solve with classification, segmentation, and detection approaches. In addition, sometimes pre and post-processing methods are applied for solving some specific type of problems. Recently, different DCNN models including Inception residual recurrent CNN (IRRCNN), Densely Connected Recurrent Convolution Network (DCRCN), Recurrent Residual U-Net (R2U-Net), and R2U-Net based regression model (UD-Net) have proposed and provide state-of-the-art performance for different computer vision and medical image analysis tasks. However, these advanced DCNN models have not been explored for solving different problems related to DPIA. In this study, we have applied these DCNN techniques for solving different DPIA problems and evaluated on different publicly available benchmark datasets for seven different tasks in digital pathology including lymphoma classification, Invasive Ductal Carcinoma (IDC) detection, nuclei segmentation, epithelium segmentation, tubule segmentation, lymphocyte detection, and mitosis detection. The experimental results are evaluated with different performance metrics such as sensitivity, specificity, accuracy, F1-score, Receiver Operating Characteristics (ROC) curve, dice coefficient (DC), and Means Squired Errors (MSE). The results demonstrate superior performance for classification, segmentation, and detection tasks compared to existing machine learning and DCNN based approaches.

Breast Cancer Classification from Histopathological Images with Inception Recurrent Residual Convolutional Neural Network

Nov 10, 2018

Abstract:The Deep Convolutional Neural Network (DCNN) is one of the most powerful and successful deep learning approaches. DCNNs have already provided superior performance in different modalities of medical imaging including breast cancer classification, segmentation, and detection. Breast cancer is one of the most common and dangerous cancers impacting women worldwide. In this paper, we have proposed a method for breast cancer classification with the Inception Recurrent Residual Convolutional Neural Network (IRRCNN) model. The IRRCNN is a powerful DCNN model that combines the strength of the Inception Network (Inception-v4), the Residual Network (ResNet), and the Recurrent Convolutional Neural Network (RCNN). The IRRCNN shows superior performance against equivalent Inception Networks, Residual Networks, and RCNNs for object recognition tasks. In this paper, the IRRCNN approach is applied for breast cancer classification on two publicly available datasets including BreakHis and Breast Cancer Classification Challenge 2015. The experimental results are compared against the existing machine learning and deep learning-based approaches with respect to image-based, patch-based, image-level, and patient-level classification. The IRRCNN model provides superior classification performance in terms of sensitivity, Area Under the Curve (AUC), the ROC curve, and global accuracy compared to existing approaches for both datasets.

Microscopic Nuclei Classification, Segmentation and Detection with improved Deep Convolutional Neural Network (DCNN) Approaches

Nov 08, 2018

Abstract:Due to cellular heterogeneity, cell nuclei classification, segmentation, and detection from pathological images are challenging tasks. In the last few years, Deep Convolutional Neural Networks (DCNN) approaches have been shown state-of-the-art (SOTA) performance on histopathological imaging in different studies. In this work, we have proposed different advanced DCNN models and evaluated for nuclei classification, segmentation, and detection. First, the Densely Connected Recurrent Convolutional Network (DCRN) model is used for nuclei classification. Second, Recurrent Residual U-Net (R2U-Net) is applied for nuclei segmentation. Third, the R2U-Net regression model which is named UD-Net is used for nuclei detection from pathological images. The experiments are conducted with different datasets including Routine Colon Cancer(RCC) classification and detection dataset, and Nuclei Segmentation Challenge 2018 dataset. The experimental results show that the proposed DCNN models provide superior performance compared to the existing approaches for nuclei classification, segmentation, and detection tasks. The results are evaluated with different performance metrics including precision, recall, Dice Coefficient (DC), Means Squared Errors (MSE), F1-score, and overall accuracy. We have achieved around 3.4% and 4.5% better F-1 score for nuclei classification and detection tasks compared to recently published DCNN based method. In addition, R2U-Net shows around 92.15% testing accuracy in term of DC. These improved methods will help for pathological practices for better quantitative analysis of nuclei in Whole Slide Images(WSI) which ultimately will help for better understanding of different types of cancer in clinical workflow.

The History Began from AlexNet: A Comprehensive Survey on Deep Learning Approaches

Sep 12, 2018

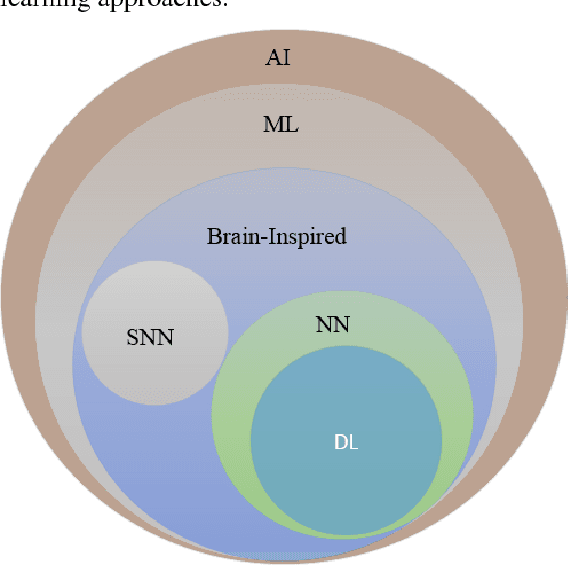

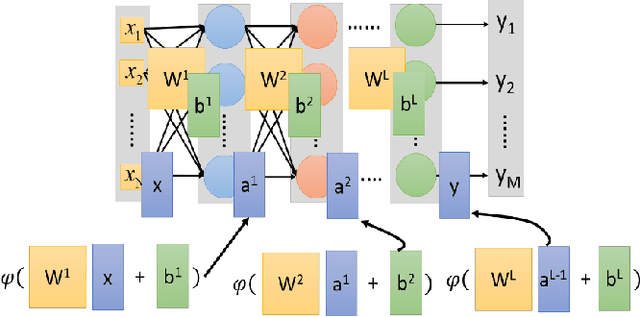

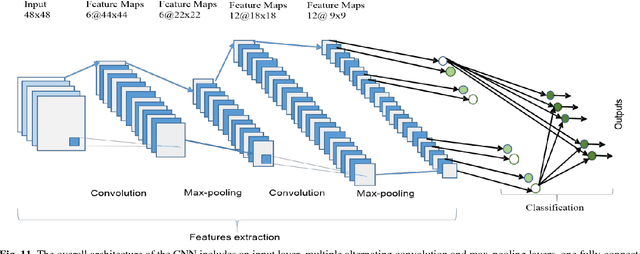

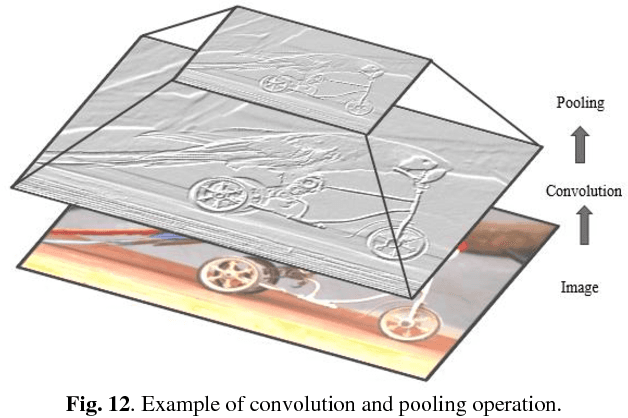

Abstract:Deep learning has demonstrated tremendous success in variety of application domains in the past few years. This new field of machine learning has been growing rapidly and applied in most of the application domains with some new modalities of applications, which helps to open new opportunity. There are different methods have been proposed on different category of learning approaches, which includes supervised, semi-supervised and un-supervised learning. The experimental results show state-of-the-art performance of deep learning over traditional machine learning approaches in the field of Image Processing, Computer Vision, Speech Recognition, Machine Translation, Art, Medical imaging, Medical information processing, Robotics and control, Bio-informatics, Natural Language Processing (NLP), Cyber security, and many more. This report presents a brief survey on development of DL approaches, including Deep Neural Network (DNN), Convolutional Neural Network (CNN), Recurrent Neural Network (RNN) including Long Short Term Memory (LSTM) and Gated Recurrent Units (GRU), Auto-Encoder (AE), Deep Belief Network (DBN), Generative Adversarial Network (GAN), and Deep Reinforcement Learning (DRL). In addition, we have included recent development of proposed advanced variant DL techniques based on the mentioned DL approaches. Furthermore, DL approaches have explored and evaluated in different application domains are also included in this survey. We have also comprised recently developed frameworks, SDKs, and benchmark datasets that are used for implementing and evaluating deep learning approaches. There are some surveys have published on Deep Learning in Neural Networks [1, 38] and a survey on RL [234]. However, those papers have not discussed the individual advanced techniques for training large scale deep learning models and the recently developed method of generative models [1].

Bangla License Plate Recognition Using Convolutional Neural Networks (CNN)

Sep 04, 2018

Abstract:In the last few years, the deep learning technique in particular Convolutional Neural Networks (CNNs) is using massively in the field of computer vision and machine learning. This deep learning technique provides state-of-the-art accuracy in different classification, segmentation, and detection tasks on different benchmarks such as MNIST, CIFAR-10, CIFAR-100, Microsoft COCO, and ImageNet. However, there are a lot of research has been conducted for Bangla License plate recognition with traditional machine learning approaches in last decade. None of them are used to deploy a physical system for Bangla License Plate Recognition System (BLPRS) due to their poor recognition accuracy. In this paper, we have implemented CNNs based Bangla license plate recognition system with better accuracy that can be applied for different purposes including roadside assistance, automatic parking lot management system, vehicle license status detection and so on. Along with that, we have also created and released a very first and standard database for BLPRS.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge