Tanja Katharina Kaiser

CloudTrack: Scalable UAV Tracking with Cloud Semantics

Sep 24, 2024Abstract:Nowadays, unmanned aerial vehicles (UAVs) are commonly used in search and rescue scenarios to gather information in the search area. The automatic identification of the person searched for in aerial footage could increase the autonomy of such systems, reduce the search time, and thus increase the missed person's chances of survival. In this paper, we present a novel approach to perform semantically conditioned open vocabulary object tracking that is specifically designed to cope with the limitations of UAV hardware. Our approach has several advantages. It can run with verbal descriptions of the missing person, e.g., the color of the shirt, it does not require dedicated training to execute the mission and can efficiently track a potentially moving person. Our experimental results demonstrate the versatility and efficacy of our approach.

Self-Organized Construction by Minimal Surprise

May 05, 2024Abstract:For the robots to achieve a desired behavior, we can program them directly, train them, or give them an innate driver that makes the robots themselves desire the targeted behavior. With the minimal surprise approach, we implant in our robots the desire to make their world predictable. Here, we apply minimal surprise to collective construction. Simulated robots push blocks in a 2D torus grid world. In two variants of our experiment we either allow for emergent behaviors or predefine the expected environment of the robots. In either way, we evolve robot behaviors that move blocks to structure their environment and make it more predictable. The resulting controllers can be applied in collective construction by robots.

Innate Motivation for Robot Swarms by Minimizing Surprise: From Simple Simulations to Real-World Experiments

May 04, 2024Abstract:Applications of large-scale mobile multi-robot systems can be beneficial over monolithic robots because of higher potential for robustness and scalability. Developing controllers for multi-robot systems is challenging because the multitude of interactions is hard to anticipate and difficult to model. Automatic design using machine learning or evolutionary robotics seem to be options to avoid that challenge, but bring the challenge of designing reward or fitness functions. Generic reward and fitness functions seem unlikely to exist and task-specific rewards often have undesired side effects. Approaches of so-called innate motivation try to avoid the specific formulation of rewards and work instead with different drivers, such as curiosity. Our approach to innate motivation is to minimize surprise, which we implement by maximizing the accuracy of the swarm robot's sensor predictions using neuroevolution. A unique advantage of the swarm robot case is that swarm members populate the robot's environment and can trigger more active behaviors in a self-referential loop. We summarize our previous simulation-based results concerning behavioral diversity, robustness, scalability, and engineered self-organization, and put them into context. In several new studies, we analyze the influence of the optimizer's hyperparameters, the scalability of evolved behaviors, and the impact of realistic robot simulations. Finally, we present results using real robots that show how the reality gap can be bridged.

ROS2swarm - A ROS 2 Package for Swarm Robot Behaviors

May 03, 2024Abstract:Developing reusable software for mobile robots is still challenging. Even more so for swarm robots, despite the desired simplicity of the robot controllers. Prototyping and experimenting are difficult due to the multi-robot setting and often require robot-robot communication. Also, the diversity of swarm robot hardware platforms increases the need for hardware-independent software concepts. The main advantages of the commonly used robot software architecture ROS 2 are modularity and platform independence. We propose a new ROS 2 package, ROS2swarm, for applications of swarm robotics that provides a library of ready-to-use swarm behavioral primitives. We show the successful application of our approach on three different platforms, the TurtleBot3 Burger, the TurtleBot3 Waffle Pi, and the Jackal UGV, and with a set of different behavioral primitives, such as aggregation, dispersion, and collective decision-making. The proposed approach is easy to maintain, extendable, and has good potential for simplifying swarm robotics experiments in future applications.

Learning from Evolution: Improving Collective Decision-Making Mechanisms using Insights from Evolutionary Robotics

May 03, 2024

Abstract:Collective decision-making enables multi-robot systems to act autonomously in real-world environments. Existing collective decision-making mechanisms suffer from the so-called speed versus accuracy trade-off or rely on high complexity, e.g., by including global communication. Recent work has shown that more efficient collective decision-making mechanisms based on artificial neural networks can be generated using methods from evolutionary computation. A major drawback of these decision-making neural networks is their limited interpretability. Analyzing evolved decision-making mechanisms can help us improve the efficiency of hand-coded decision-making mechanisms while maintaining a higher interpretability. In this paper, we analyze evolved collective decision-making mechanisms in detail and hand-code two new decision-making mechanisms based on the insights gained. In benchmark experiments, we show that the newly implemented collective decision-making mechanisms are more efficient than the state-of-the-art collective decision-making mechanisms voter model and majority rule.

Evolution of Collective Decision-Making Mechanisms for Collective Perception

Nov 06, 2023

Abstract:Autonomous robot swarms must be able to make fast and accurate collective decisions, but speed and accuracy are known to be conflicting goals. While collective decision-making is widely studied in swarm robotics research, only few works on using methods of evolutionary computation to generate collective decision-making mechanisms exist. These works use task-specific fitness functions rewarding the accomplishment of the respective collective decision-making task. But task-independent rewards, such as for prediction error minimization, may promote the emergence of diverse and innovative solutions. We evolve collective decision-making mechanisms using a task-specific fitness function rewarding correct robot opinions, a task-independent reward for prediction accuracy, and a hybrid fitness function combining the two previous. In our simulations, we use the collective perception scenario, that is, robots must collectively determine which of two environmental features is more frequent. We show that evolution successfully optimizes fitness in all three scenarios, but that only the task-specific fitness function and the hybrid fitness function lead to the emergence of collective decision-making behaviors. In benchmark experiments, we show the competitiveness of the evolved decision-making mechanisms to the voter model and the majority rule and analyze the scalability of the decision-making mechanisms with problem difficulty.

Engineered Self-Organization for Resilient Robot Self-Assembly with Minimal Surprise

Feb 14, 2019

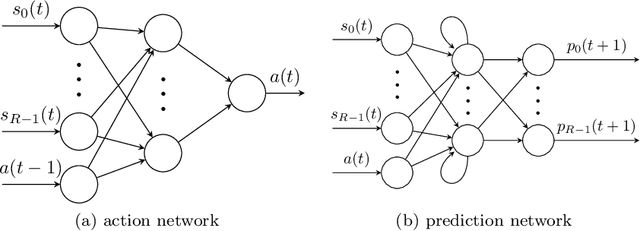

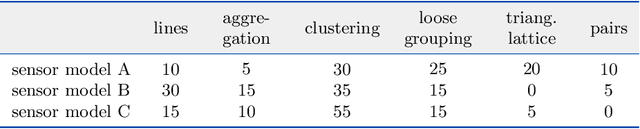

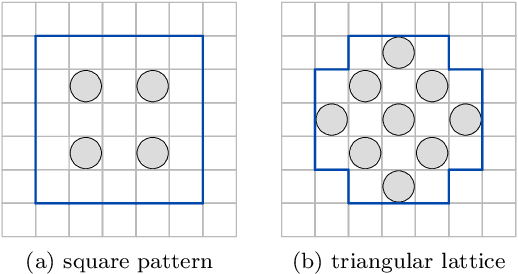

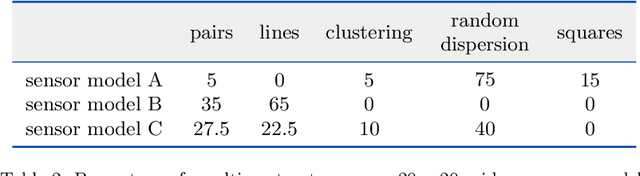

Abstract:In collective robotic systems, the automatic generation of controllers for complex tasks is still a challenging problem. Open-ended evolution of complex robot behaviors can be a possible solution whereby an intrinsic driver for pattern formation and self-organization may prove to be important. We implement such a driver in collective robot systems by evolving prediction networks as world models in pair with action-selection networks. Fitness is given for good predictions which causes a bias towards easily predictable environments and behaviors in the form of emergent patterns, that is, environments of minimal surprise. There is no task-dependent bias or any other explicit predetermination for the different qualities of the emerging patterns. A careful configuration of actions, sensor models, and the environment is required to stimulate the emergence of complex behaviors. We study self-assembly to increase the scenario's complexity for our minimal surprise approach and, at the same time, limit the complexity of our simulations to a grid world to manage the feasibility of this approach. We investigate the impact of different swarm densities and the shape of the environment on the emergent patterns. Furthermore, we study how evolution can be biased towards the emergence of desired patterns. We analyze the resilience of the resulting self-assembly behaviors by causing damages to the assembled pattern and observe the self-organized self-repair process. In summary, we evolved swarm behaviors for resilient self-assembly and successfully engineered self-organization in simulation. In future work, we plan to transfer our approach to a swarm of real robots.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge