Tamal Bose

Generalization Bounds for Neural Belief Propagation Decoders

May 17, 2023

Abstract:Machine learning based approaches are being increasingly used for designing decoders for next generation communication systems. One widely used framework is neural belief propagation (NBP), which unfolds the belief propagation (BP) iterations into a deep neural network and the parameters are trained in a data-driven manner. NBP decoders have been shown to improve upon classical decoding algorithms. In this paper, we investigate the generalization capabilities of NBP decoders. Specifically, the generalization gap of a decoder is the difference between empirical and expected bit-error-rate(s). We present new theoretical results which bound this gap and show the dependence on the decoder complexity, in terms of code parameters (blocklength, message length, variable/check node degrees), decoding iterations, and the training dataset size. Results are presented for both regular and irregular parity-check matrices. To the best of our knowledge, this is the first set of theoretical results on generalization performance of neural network based decoders. We present experimental results to show the dependence of generalization gap on the training dataset size, and decoding iterations for different codes.

Adversarial Filters for Secure Modulation Classification

Aug 15, 2020

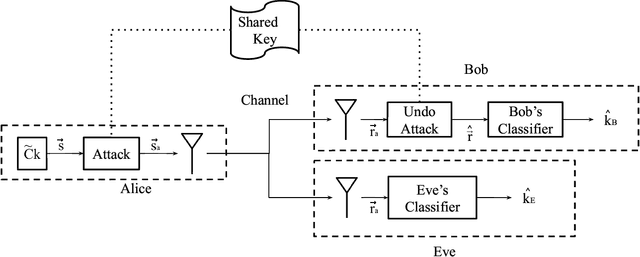

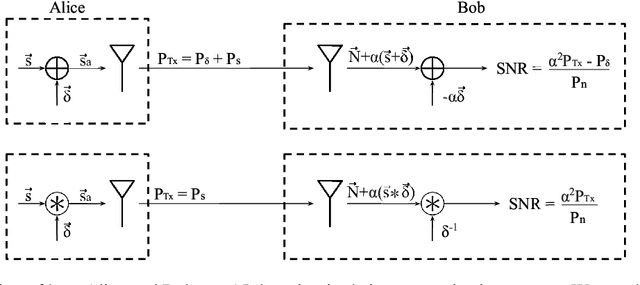

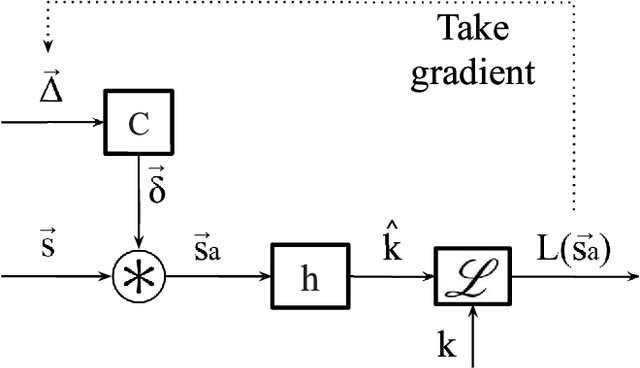

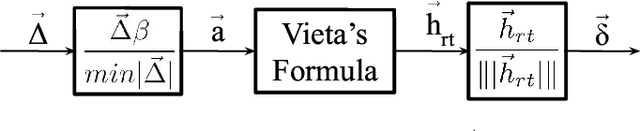

Abstract:Modulation Classification (MC) refers to the problem of classifying the modulation class of a wireless signal. In the wireless communications pipeline, MC is the first operation performed on the received signal and is critical for reliable decoding. This paper considers the problem of secure modulation classification, where a transmitter (Alice) wants to maximize MC accuracy at a legitimate receiver (Bob) while minimizing MC accuracy at an eavesdropper (Eve). The contribution of this work is to design novel adversarial learning techniques for secure MC. In particular, we present adversarial filtering based algorithms for secure MC, in which Alice uses a carefully designed adversarial filter to mask the transmitted signal, that can maximize MC accuracy at Bob while minimizing MC accuracy at Eve. We present two filtering based algorithms, namely gradient ascent filter (GAF), and a fast gradient filter method (FGFM), with varying levels of complexity. Our proposed adversarial filtering based approaches significantly outperform additive adversarial perturbations (used in the traditional ML community and other prior works on secure MC) and also have several other desirable properties. In particular, GAF and FGFM algorithms are a) computational efficient (allow fast decoding at Bob), b) power-efficient (do not require excessive transmit power at Alice); and c) SNR efficient (i.e., perform well even at low SNR values at Bob).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge