Talmaj Marinč

Analyzing ImageNet with Spectral Relevance Analysis: Towards ImageNet un-Hans'ed

Dec 22, 2019

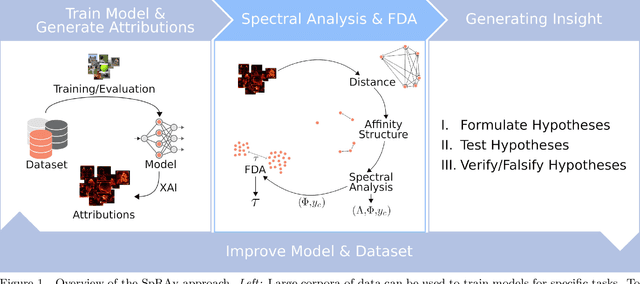

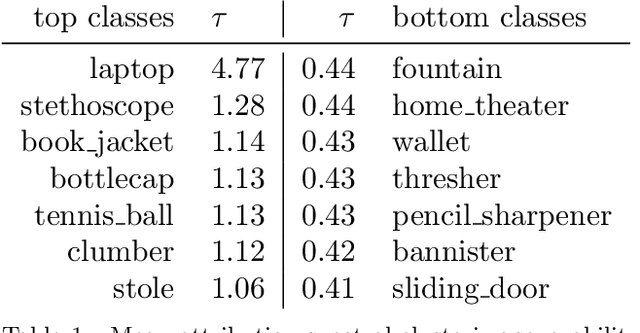

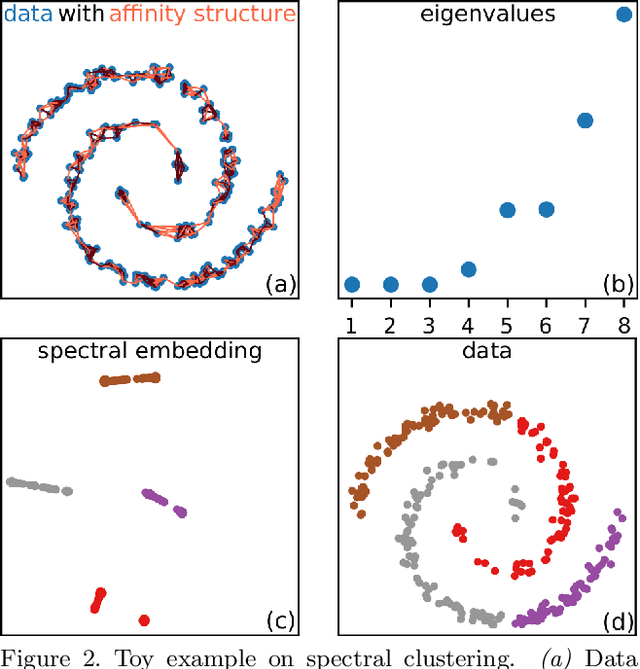

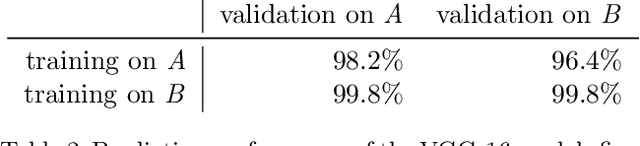

Abstract:Today's machine learning models for computer vision are typically trained on very large (benchmark) data sets with millions of samples. These may, however, contain biases, artifacts, or errors that have gone unnoticed and are exploited by the model. In the worst case, the trained model may become a 'Clever Hans' predictor that does not learn a valid and generalizable strategy to solve the problem it was trained for, but bases its decisions on spurious correlations in the training data. Recently developed techniques allow to explain individual model decisions and thus to gain deeper insights into the model's prediction strategies. In this paper, we contribute by providing a comprehensive analysis framework based on a scalable statistical analysis of attributions from explanation methods for large data corpora, here ImageNet. Based on a recent technique - Spectral Relevance Analysis (SpRAy) - we propose three technical contributions and resulting findings: (a) novel similarity metrics based on Wasserstein for comparing attributions to allow for the first time scale, translational, and rotational invariant comparisons of attributions, (b) a scalable quantification of artifactual and poisoned classes where the ML models under study exhibit Clever Hans behavior, (c) a cleaning procedure that allows to relief data of artifacts and biases in a systematic manner yielding significantly reduced Clever Hans behavior, i.e. we un-Hans the ImageNet data corpus. Using this novel method set, we provide qualitative and quantitative analyses of the biases and artifacts in ImageNet and demonstrate that the usage of these insights can give rise to improved models and functionally cleaned data corpora.

Multi-Kernel Prediction Networks for Denoising of Burst Images

Feb 05, 2019

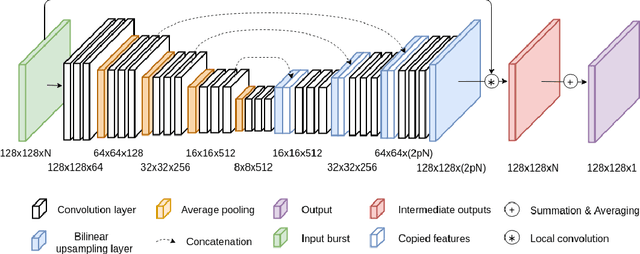

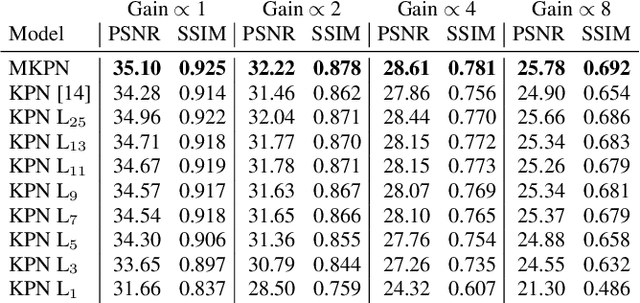

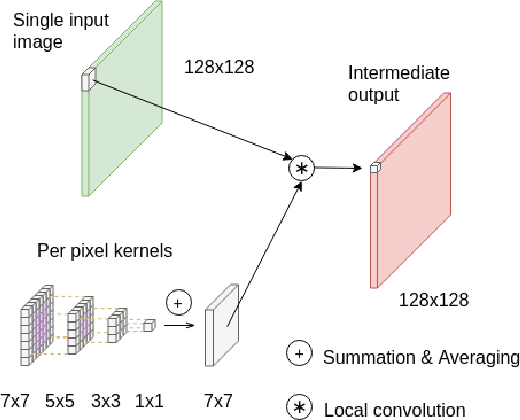

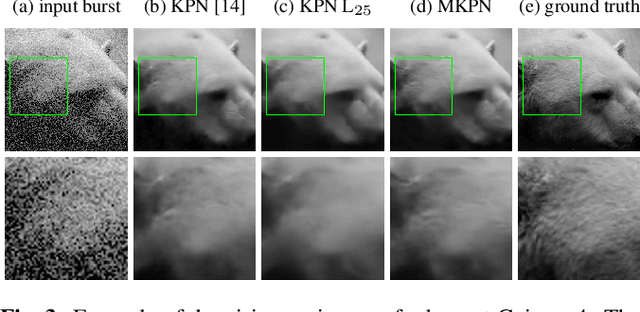

Abstract:In low light or short-exposure photography the image is often corrupted by noise. While longer exposure helps reduce the noise, it can produce blurry results due to the object and camera motion. The reconstruction of a noise-less image is an ill posed problem. Recent approaches for image denoising aim to predict kernels which are convolved with a set of successively taken images (burst) to obtain a clear image. We propose a deep neural network based approach called Multi-Kernel Prediction Networks (MKPN) for burst image denoising. MKPN predicts kernels of not just one size but of varying sizes and performs fusion of these different kernels resulting in one kernel per pixel. The advantages of our method are two fold: (a) the different sized kernels help in extracting different information from the image which results in better reconstruction and (b) kernel fusion assures retaining of the extracted information while maintaining computational efficiency. Experimental results reveal that MKPN outperforms state-of-the-art on our synthetic datasets with different noise levels.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge