Taha ValizadehAslani

LayerNorm: A key component in parameter-efficient fine-tuning

Mar 29, 2024Abstract:Fine-tuning a pre-trained model, such as Bidirectional Encoder Representations from Transformers (BERT), has been proven to be an effective method for solving many natural language processing (NLP) tasks. However, due to the large number of parameters in many state-of-the-art NLP models, including BERT, the process of fine-tuning is computationally expensive. One attractive solution to this issue is parameter-efficient fine-tuning, which involves modifying only a minimal segment of the model while keeping the remainder unchanged. Yet, it remains unclear which segment of the BERT model is crucial for fine-tuning. In this paper, we first analyze different components in the BERT model to pinpoint which one undergoes the most significant changes after fine-tuning. We find that output LayerNorm changes more than any other components when fine-tuned for different General Language Understanding Evaluation (GLUE) tasks. Then we show that only fine-tuning the LayerNorm can reach comparable, or in some cases better, performance to full fine-tuning and other parameter-efficient fine-tuning methods. Moreover, we use Fisher information to determine the most critical subset of LayerNorm and demonstrate that many NLP tasks in the GLUE benchmark can be solved by fine-tuning only a small portion of LayerNorm with negligible performance degradation.

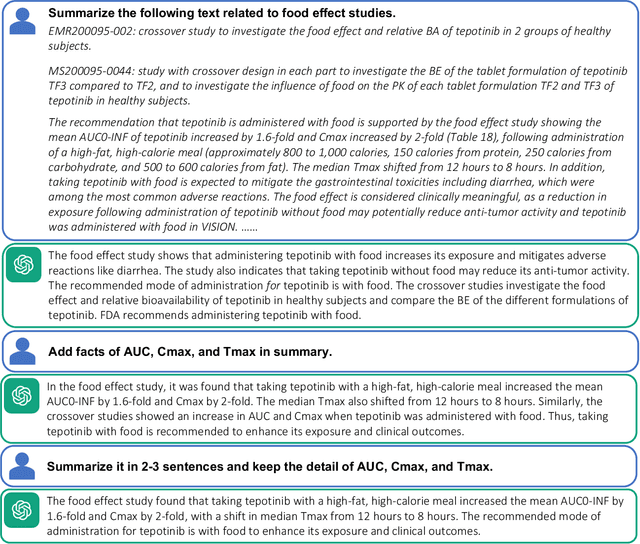

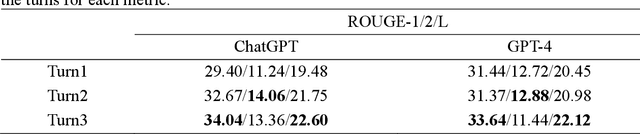

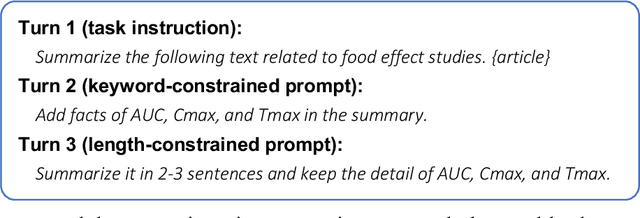

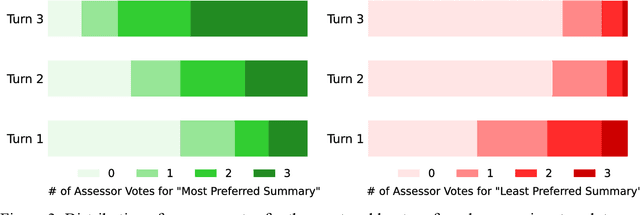

Leveraging GPT-4 for Food Effect Summarization to Enhance Product-Specific Guidance Development via Iterative Prompting

Jun 28, 2023

Abstract:Food effect summarization from New Drug Application (NDA) is an essential component of product-specific guidance (PSG) development and assessment. However, manual summarization of food effect from extensive drug application review documents is time-consuming, which arouses a need to develop automated methods. Recent advances in large language models (LLMs) such as ChatGPT and GPT-4, have demonstrated great potential in improving the effectiveness of automated text summarization, but its ability regarding the accuracy in summarizing food effect for PSG assessment remains unclear. In this study, we introduce a simple yet effective approach, iterative prompting, which allows one to interact with ChatGPT or GPT-4 more effectively and efficiently through multi-turn interaction. Specifically, we propose a three-turn iterative prompting approach to food effect summarization in which the keyword-focused and length-controlled prompts are respectively provided in consecutive turns to refine the quality of the generated summary. We conduct a series of extensive evaluations, ranging from automated metrics to FDA professionals and even evaluation by GPT-4, on 100 NDA review documents selected over the past five years. We observe that the summary quality is progressively improved throughout the process. Moreover, we find that GPT-4 performs better than ChatGPT, as evaluated by FDA professionals (43% vs. 12%) and GPT-4 (64% vs. 35%). Importantly, all the FDA professionals unanimously rated that 85% of the summaries generated by GPT-4 are factually consistent with the golden reference summary, a finding further supported by GPT-4 rating of 72% consistency. These results strongly suggest a great potential for GPT-4 to draft food effect summaries that could be reviewed by FDA professionals, thereby improving the efficiency of PSG assessment cycle and promoting the generic drug product development.

Fine-Tuning BERT for Automatic ADME Semantic Labeling in FDA Drug Labeling to Enhance Product-Specific Guidance Assessment

Jul 25, 2022

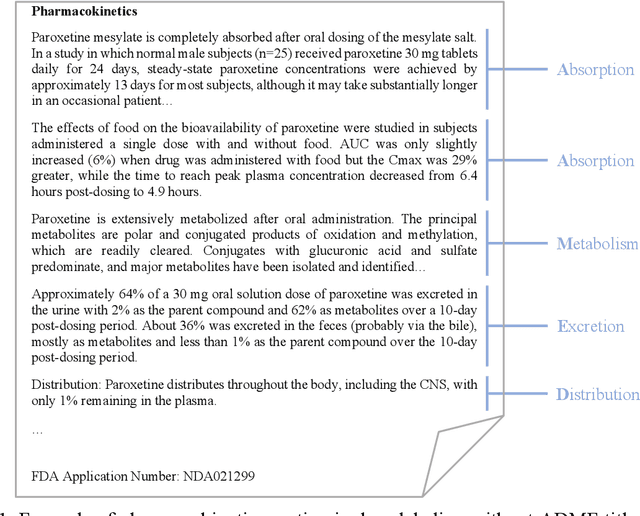

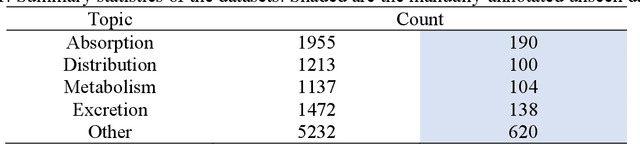

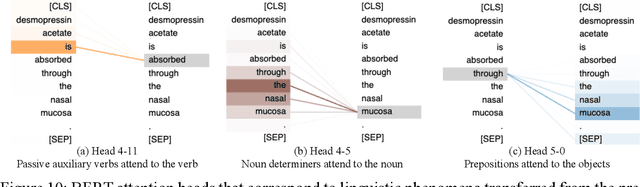

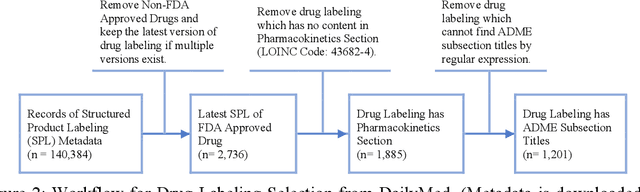

Abstract:Product-specific guidances (PSGs) recommended by the United States Food and Drug Administration (FDA) are instrumental to promote and guide generic drug product development. To assess a PSG, the FDA assessor needs to take extensive time and effort to manually retrieve supportive drug information of absorption, distribution, metabolism, and excretion (ADME) from the reference listed drug labeling. In this work, we leveraged the state-of-the-art pre-trained language models to automatically label the ADME paragraphs in the pharmacokinetics section from the FDA-approved drug labeling to facilitate PSG assessment. We applied a transfer learning approach by fine-tuning the pre-trained Bidirectional Encoder Representations from Transformers (BERT) model to develop a novel application of ADME semantic labeling, which can automatically retrieve ADME paragraphs from drug labeling instead of manual work. We demonstrated that fine-tuning the pre-trained BERT model can outperform the conventional machine learning techniques, achieving up to 11.6% absolute F1 improvement. To our knowledge, we were the first to successfully apply BERT to solve the ADME semantic labeling task. We further assessed the relative contribution of pre-training and fine-tuning to the overall performance of the BERT model in the ADME semantic labeling task using a series of analysis methods such as attention similarity and layer-based ablations. Our analysis revealed that the information learned via fine-tuning is focused on task-specific knowledge in the top layers of the BERT, whereas the benefit from the pre-trained BERT model is from the bottom layers.

Two-Stage Fine-Tuning: A Novel Strategy for Learning Class-Imbalanced Data

Jul 22, 2022

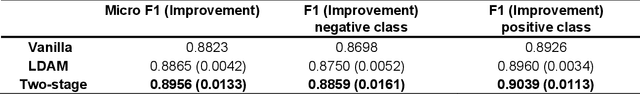

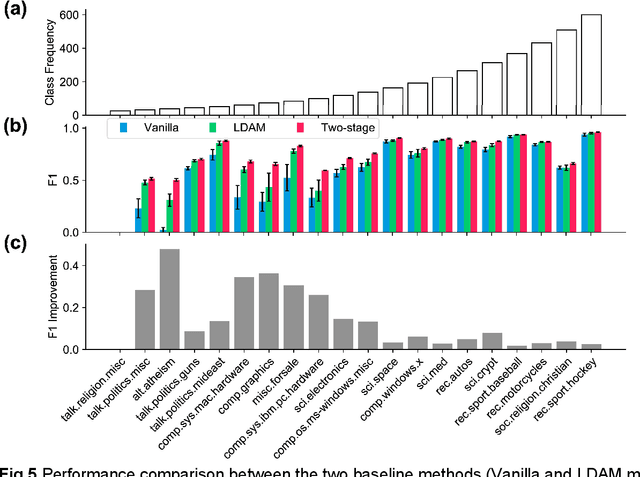

Abstract:Classification on long-tailed distributed data is a challenging problem, which suffers from serious class-imbalance and hence poor performance on tail classes with only a few samples. Owing to this paucity of samples, learning on the tail classes is especially challenging for the fine-tuning when transferring a pretrained model to a downstream task. In this work, we present a simple modification of standard fine-tuning to cope with these challenges. Specifically, we propose a two-stage fine-tuning: we first fine-tune the final layer of the pretrained model with class-balanced reweighting loss, and then we perform the standard fine-tuning. Our modification has several benefits: (1) it leverages pretrained representations by only fine-tuning a small portion of the model parameters while keeping the rest untouched; (2) it allows the model to learn an initial representation of the specific task; and importantly (3) it protects the learning of tail classes from being at a disadvantage during the model updating. We conduct extensive experiments on synthetic datasets of both two-class and multi-class tasks of text classification as well as a real-world application to ADME (i.e., absorption, distribution, metabolism, and excretion) semantic labeling. The experimental results show that the proposed two-stage fine-tuning outperforms both fine-tuning with conventional loss and fine-tuning with a reweighting loss on the above datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge