Sven Verdoolaege

Tensor Comprehensions: Framework-Agnostic High-Performance Machine Learning Abstractions

Jun 29, 2018

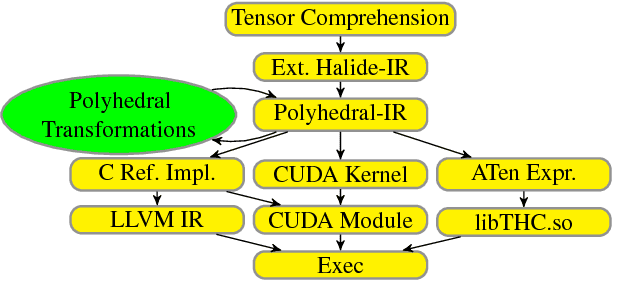

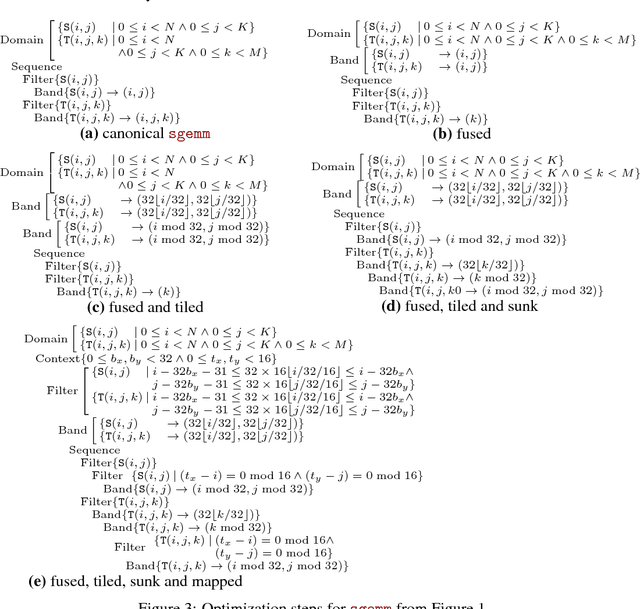

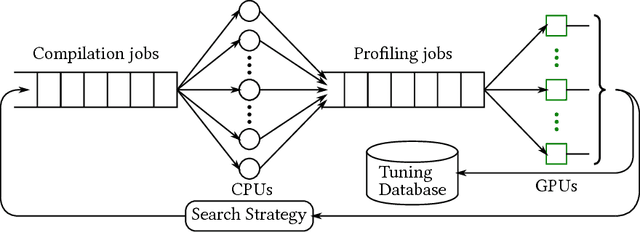

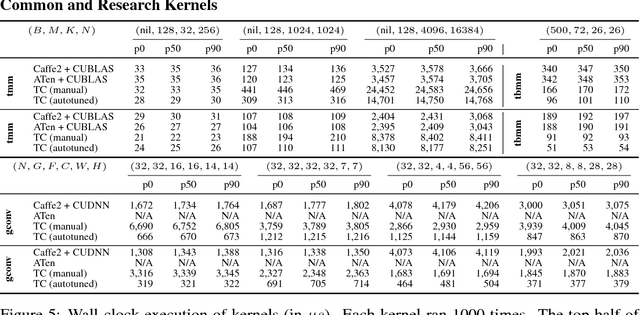

Abstract:Deep learning models with convolutional and recurrent networks are now ubiquitous and analyze massive amounts of audio, image, video, text and graph data, with applications in automatic translation, speech-to-text, scene understanding, ranking user preferences, ad placement, etc. Competing frameworks for building these networks such as TensorFlow, Chainer, CNTK, Torch/PyTorch, Caffe1/2, MXNet and Theano, explore different tradeoffs between usability and expressiveness, research or production orientation and supported hardware. They operate on a DAG of computational operators, wrapping high-performance libraries such as CUDNN (for NVIDIA GPUs) or NNPACK (for various CPUs), and automate memory allocation, synchronization, distribution. Custom operators are needed where the computation does not fit existing high-performance library calls, usually at a high engineering cost. This is frequently required when new operators are invented by researchers: such operators suffer a severe performance penalty, which limits the pace of innovation. Furthermore, even if there is an existing runtime call these frameworks can use, it often doesn't offer optimal performance for a user's particular network architecture and dataset, missing optimizations between operators as well as optimizations that can be done knowing the size and shape of data. Our contributions include (1) a language close to the mathematics of deep learning called Tensor Comprehensions, (2) a polyhedral Just-In-Time compiler to convert a mathematical description of a deep learning DAG into a CUDA kernel with delegated memory management and synchronization, also providing optimizations such as operator fusion and specialization for specific sizes, (3) a compilation cache populated by an autotuner. [Abstract cutoff]

Abductive reasoning with temporal information

Nov 23, 2000Abstract:Texts in natural language contain a lot of temporal information, both explicit and implicit. Verbs and temporal adjuncts carry most of the explicit information, but for a full understanding general world knowledge and default assumptions have to be taken into account. We will present a theory for describing the relation between, on the one hand, verbs, their tenses and adjuncts and, on the other, the eventualities and periods of time they represent and their relative temporal locations, while allowing interaction with general world knowledge. The theory is formulated in an extension of first order logic and is a practical implementation of the concepts described in Van Eynde 2001 and Schelkens et al. 2000. We will show how an abductive resolution procedure can be used on this representation to extract temporal information from texts. The theory presented here is an extension of that in Verdoolaege et al. 2000, adapted to VanEynde 2001, with a simplified and extended analysis of adjuncts and with more emphasis on how a model can be constructed.

Semantic interpretation of temporal information by abductive inference

Nov 22, 2000Abstract:Besides temporal information explicitly available in verbs and adjuncts, the temporal interpretation of a text also depends on general world knowledge and default assumptions. We will present a theory for describing the relation between, on the one hand, verbs, their tenses and adjuncts and, on the other, the eventualities and periods of time they represent and their relative temporal locations. The theory is formulated in logic and is a practical implementation of the concepts described in Ness Schelkens et al. We will show how an abductive resolution procedure can be used on this representation to extract temporal information from texts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge