Susan Stepney

Reservoir Computing Benchmarks: a review, a taxonomy, some best practices

May 10, 2024Abstract:Reservoir Computing is an Unconventional Computation model to perform computation on various different substrates, such as RNNs or physical materials. The method takes a "black-box" approach, training only the outputs of the system it is built on. As such, evaluating the computational capacity of these systems can be challenging. We review and critique the evaluation methods used in the field of Reservoir Computing. We introduce a categorisation of benchmark tasks. We review multiple examples of benchmarks from the literature as applied to reservoir computing, and note their strengths and shortcomings. We suggest ways in which benchmarks and their uses may be improved to the benefit of the reservoir computing community

Unsupervised self-organising map of prostate cell Raman spectra shows disease-state subclustering

Mar 12, 2024

Abstract:Prostate cancer is a disease which poses an interesting clinical question: should it be treated? A small subset of prostate cancers are aggressive and require removal and treatment to prevent metastatic spread. However, conventional diagnostics remain challenged to risk-stratify such patients, hence, new methods of approach to biomolecularly subclassify the disease are needed. Here we use an unsupervised, self-organising map approach to analyse live-cell Raman spectroscopy data obtained from prostate cell-lines; our aim is to test the feasibility of this method to differentiate, at the single-cell-level, cancer from normal using high-dimensional datasets with minimal preprocessing. The results demonstrate not only successful separation of normal prostate and cancer cells, but also a new subclustering of the prostate cancer cell-line into two groups. Initial analysis of the spectra from each of the cancer subclusters demonstrates a differential expression of lipids, which, against the normal control, may be linked to disease-related changes in cellular signalling.

Optimising network interactions through device agnostic models

Jan 14, 2024Abstract:Physically implemented neural networks hold the potential to achieve the performance of deep learning models by exploiting the innate physical properties of devices as computational tools. This exploration of physical processes for computation requires to also consider their intrinsic dynamics, which can serve as valuable resources to process information. However, existing computational methods are unable to extend the success of deep learning techniques to parameters influencing device dynamics, which often lack a precise mathematical description. In this work, we formulate a universal framework to optimise interactions with dynamic physical systems in a fully data-driven fashion. The framework adopts neural stochastic differential equations as differentiable digital twins, effectively capturing both deterministic and stochastic behaviours of devices. Employing differentiation through the trained models provides the essential mathematical estimates for optimizing a physical neural network, harnessing the intrinsic temporal computation abilities of its physical nodes. To accurately model real devices' behaviours, we formulated neural-SDE variants that can operate under a variety of experimental settings. Our work demonstrates the framework's applicability through simulations and physical implementations of interacting dynamic devices, while highlighting the importance of accurately capturing system stochasticity for the successful deployment of a physically defined neural network.

A perspective on physical reservoir computing with nanomagnetic devices

Dec 09, 2022

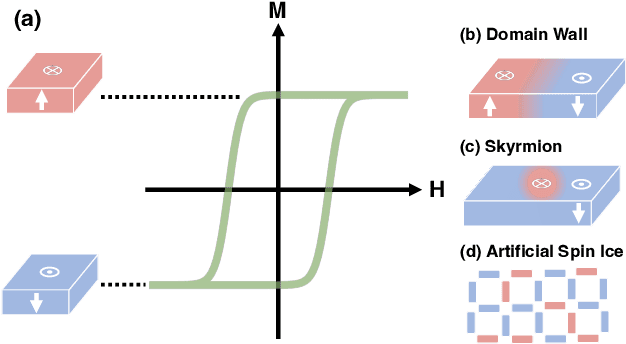

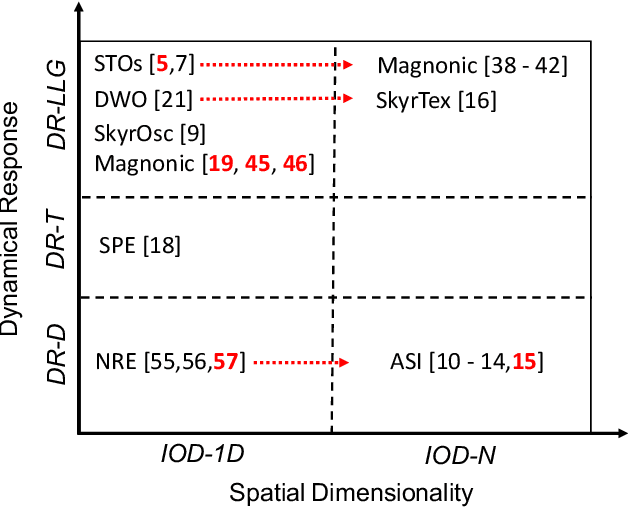

Abstract:Neural networks have revolutionized the area of artificial intelligence and introduced transformative applications to almost every scientific field and industry. However, this success comes at a great price; the energy requirements for training advanced models are unsustainable. One promising way to address this pressing issue is by developing low-energy neuromorphic hardware that directly supports the algorithm's requirements. The intrinsic non-volatility, non-linearity, and memory of spintronic devices make them appealing candidates for neuromorphic devices. Here we focus on the reservoir computing paradigm, a recurrent network with a simple training algorithm suitable for computation with spintronic devices since they can provide the properties of non-linearity and memory. We review technologies and methods for developing neuromorphic spintronic devices and conclude with critical open issues to address before such devices become widely used.

Quantifying the Computational Capability of a Nanomagnetic Reservoir Computing Platform with Emergent Magnetization Dynamics

Nov 29, 2021

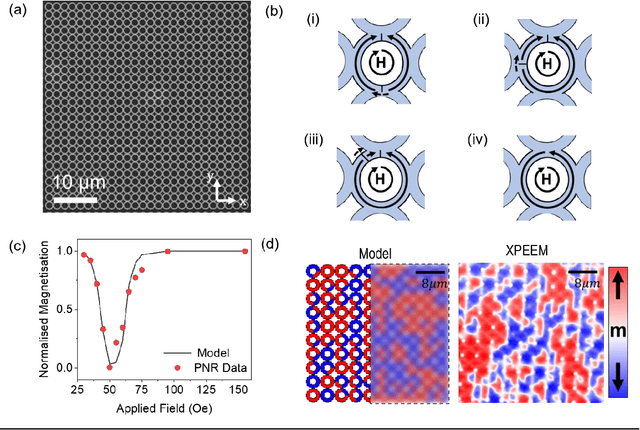

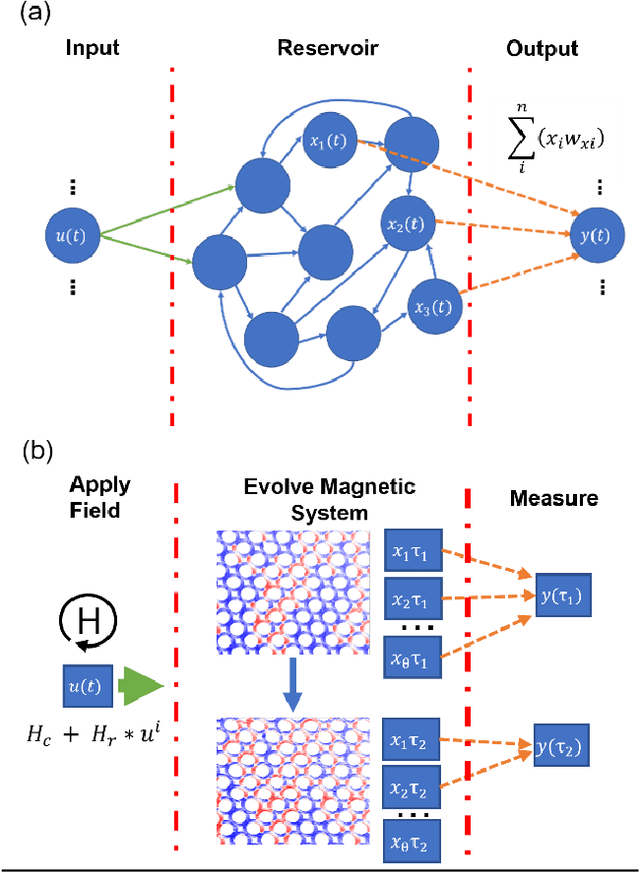

Abstract:Arrays of interconnected magnetic nano-rings with emergent magnetization dynamics have recently been proposed for use in reservoir computing applications, but for them to be computationally useful it must be possible to optimise their dynamical responses. Here, we use a phenomenological model to demonstrate that such reservoirs can be optimised for classification tasks by tuning hyperparameters that control the scaling and input-rate of data into the system using rotating magnetic fields. We use task-independent metrics to assess the rings' computational capabilities at each set of these hyperparameters and show how these metrics correlate directly to performance in spoken and written digit recognition tasks. We then show that these metrics can be further improved by expanding the reservoir's output to include multiple, concurrent measures of the ring arrays' magnetic states.

Reservoir Computing with Thin-film Ferromagnetic Devices

Jan 29, 2021

Abstract:Advances in artificial intelligence are driven by technologies inspired by the brain, but these technologies are orders of magnitude less powerful and energy efficient than biological systems. Inspired by the nonlinear dynamics of neural networks, new unconventional computing hardware has emerged with the potential for extreme parallelism and ultra-low power consumption. Physical reservoir computing demonstrates this with a variety of unconventional systems from optical-based to spintronic. Reservoir computers provide a nonlinear projection of the task input into a high-dimensional feature space by exploiting the system's internal dynamics. A trained readout layer then combines features to perform tasks, such as pattern recognition and time-series analysis. Despite progress, achieving state-of-the-art performance without external signal processing to the reservoir remains challenging. Here we show, through simulation, that magnetic materials in thin-film geometries can realise reservoir computers with greater than or similar accuracy to digital recurrent neural networks. Our results reveal that basic spin properties of magnetic films generate the required nonlinear dynamics and memory to solve machine learning tasks. Furthermore, we show that neuromorphic hardware can be reduced in size by removing the need for discrete neural components and external processing. The natural dynamics and nanoscale size of magnetic thin-films present a new path towards fast energy-efficient computing with the potential to innovate portable smart devices, self driving vehicles, and robotics.

Semantic Neutral Drift

Oct 24, 2018

Abstract:We introduce the concept of Semantic Neutral Drift (SND) for evolutionary algorithms, where we exploit equivalence laws to design semantics preserving mutations guaranteed to preserve individuals' fitness scores. A number of digital circuit benchmark problems have been implemented with rule-based graph programs and empirically evaluated, demonstrating quantitative improvements in evolutionary performance. Analysis reveals that the benefits of the designed SND reside in more complex processes than simple growth of individuals, and that there are circumstances where it is beneficial to choose otherwise detrimental parameters for an evolutionary algorithm if that facilitates the inclusion of SND.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge