Chester Wringe

Reservoir Computing Benchmarks: a review, a taxonomy, some best practices

May 10, 2024Abstract:Reservoir Computing is an Unconventional Computation model to perform computation on various different substrates, such as RNNs or physical materials. The method takes a "black-box" approach, training only the outputs of the system it is built on. As such, evaluating the computational capacity of these systems can be challenging. We review and critique the evaluation methods used in the field of Reservoir Computing. We introduce a categorisation of benchmark tasks. We review multiple examples of benchmarks from the literature as applied to reservoir computing, and note their strengths and shortcomings. We suggest ways in which benchmarks and their uses may be improved to the benefit of the reservoir computing community

A perspective on physical reservoir computing with nanomagnetic devices

Dec 09, 2022

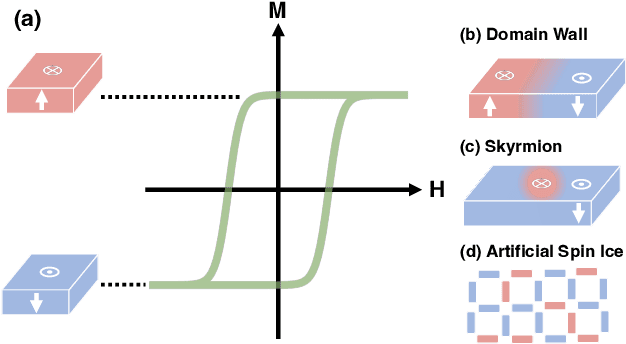

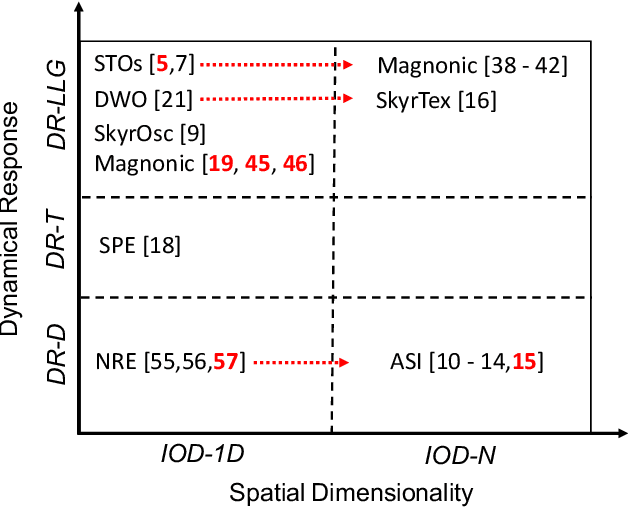

Abstract:Neural networks have revolutionized the area of artificial intelligence and introduced transformative applications to almost every scientific field and industry. However, this success comes at a great price; the energy requirements for training advanced models are unsustainable. One promising way to address this pressing issue is by developing low-energy neuromorphic hardware that directly supports the algorithm's requirements. The intrinsic non-volatility, non-linearity, and memory of spintronic devices make them appealing candidates for neuromorphic devices. Here we focus on the reservoir computing paradigm, a recurrent network with a simple training algorithm suitable for computation with spintronic devices since they can provide the properties of non-linearity and memory. We review technologies and methods for developing neuromorphic spintronic devices and conclude with critical open issues to address before such devices become widely used.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge