Thomas J Hayward

A perspective on physical reservoir computing with nanomagnetic devices

Dec 09, 2022

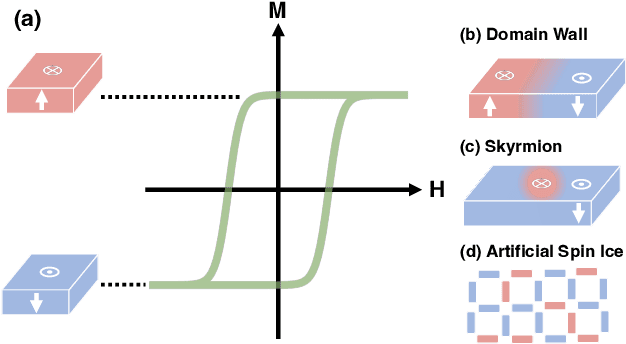

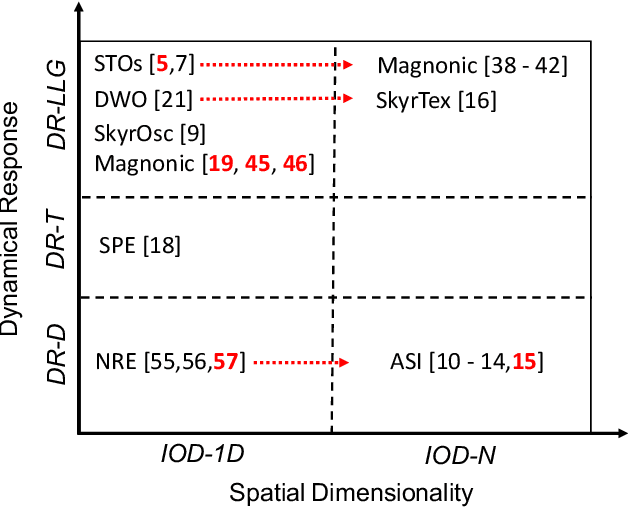

Abstract:Neural networks have revolutionized the area of artificial intelligence and introduced transformative applications to almost every scientific field and industry. However, this success comes at a great price; the energy requirements for training advanced models are unsustainable. One promising way to address this pressing issue is by developing low-energy neuromorphic hardware that directly supports the algorithm's requirements. The intrinsic non-volatility, non-linearity, and memory of spintronic devices make them appealing candidates for neuromorphic devices. Here we focus on the reservoir computing paradigm, a recurrent network with a simple training algorithm suitable for computation with spintronic devices since they can provide the properties of non-linearity and memory. We review technologies and methods for developing neuromorphic spintronic devices and conclude with critical open issues to address before such devices become widely used.

Quantifying the Computational Capability of a Nanomagnetic Reservoir Computing Platform with Emergent Magnetization Dynamics

Nov 29, 2021

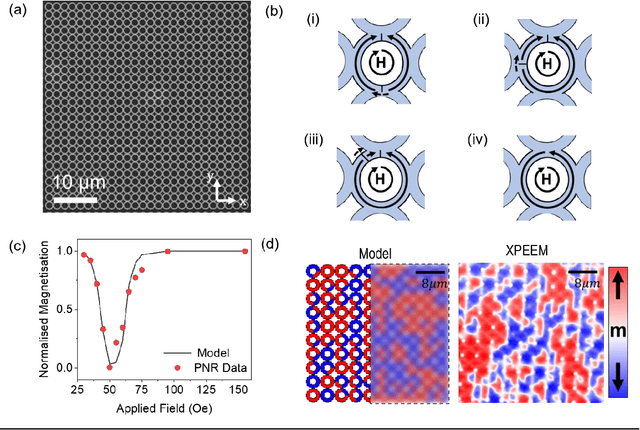

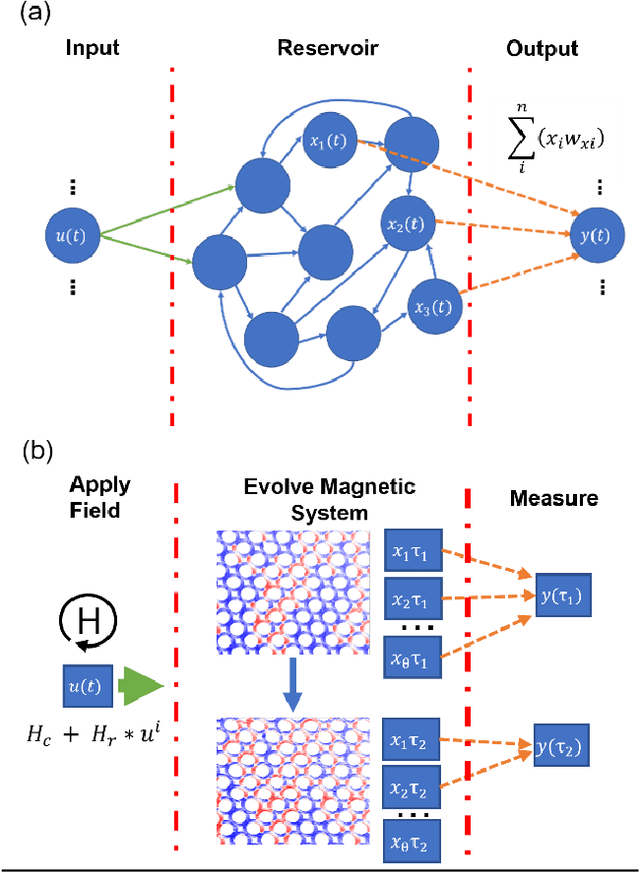

Abstract:Arrays of interconnected magnetic nano-rings with emergent magnetization dynamics have recently been proposed for use in reservoir computing applications, but for them to be computationally useful it must be possible to optimise their dynamical responses. Here, we use a phenomenological model to demonstrate that such reservoirs can be optimised for classification tasks by tuning hyperparameters that control the scaling and input-rate of data into the system using rotating magnetic fields. We use task-independent metrics to assess the rings' computational capabilities at each set of these hyperparameters and show how these metrics correlate directly to performance in spoken and written digit recognition tasks. We then show that these metrics can be further improved by expanding the reservoir's output to include multiple, concurrent measures of the ring arrays' magnetic states.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge