SungHyun Park

hSDB-instrument: Instrument Localization Database for Laparoscopic and Robotic Surgeries

Oct 26, 2021

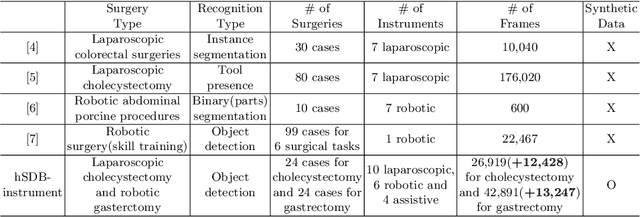

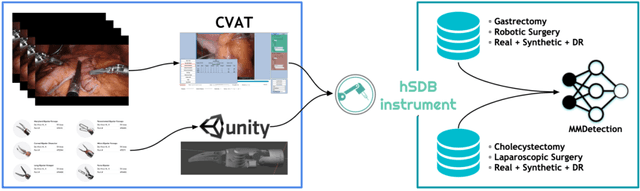

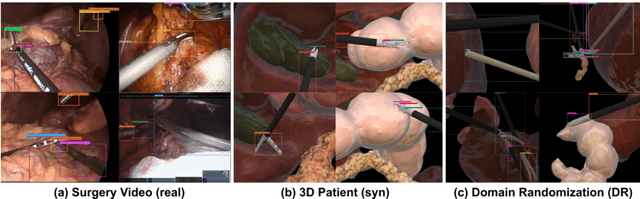

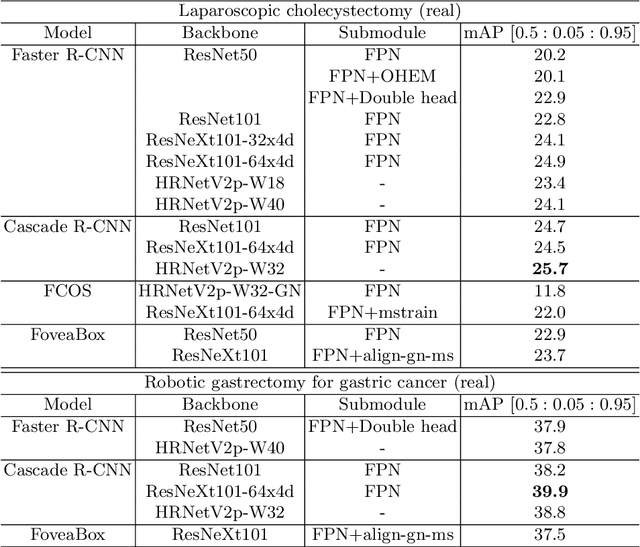

Abstract:Automated surgical instrument localization is an important technology to understand the surgical process and in order to analyze them to provide meaningful guidance during surgery or surgical index after surgery to the surgeon. We introduce a new dataset that reflects the kinematic characteristics of surgical instruments for automated surgical instrument localization of surgical videos. The hSDB(hutom Surgery DataBase)-instrument dataset consists of instrument localization information from 24 cases of laparoscopic cholecystecomy and 24 cases of robotic gastrectomy. Localization information for all instruments is provided in the form of a bounding box for object detection. To handle class imbalance problem between instruments, synthesized instruments modeled in Unity for 3D models are included as training data. Besides, for 3D instrument data, a polygon annotation is provided to enable instance segmentation of the tool. To reflect the kinematic characteristics of all instruments, they are annotated with head and body parts for laparoscopic instruments, and with head, wrist, and body parts for robotic instruments separately. Annotation data of assistive tools (specimen bag, needle, etc.) that are frequently used for surgery are also included. Moreover, we provide statistical information on the hSDB-instrument dataset and the baseline localization performances of the object detection networks trained by the MMDetection library and resulting analyses.

* https://hsdb-instrument.github.io

Rethinking Generalization Performance of Surgical Phase Recognition with Expert-Generated Annotations

Oct 22, 2021

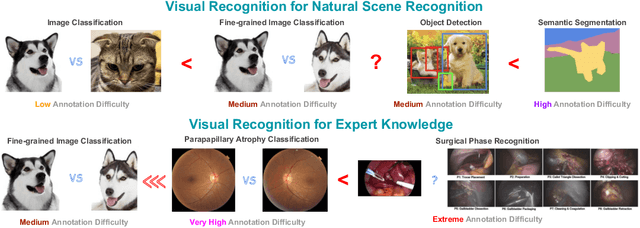

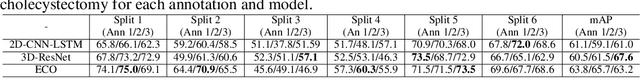

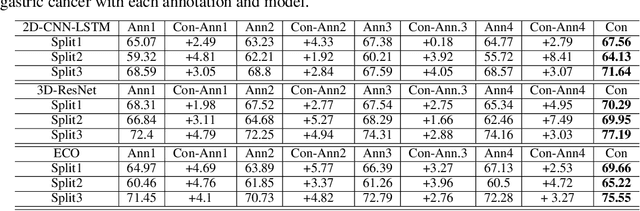

Abstract:As the area of application of deep neural networks expands to areas requiring expertise, e.g., in medicine and law, more exquisite annotation processes for expert knowledge training are required. In particular, it is difficult to guarantee generalization performance in the clinical field in the case of expert knowledge training where opinions may differ even among experts on annotations. To raise the issue of the annotation generation process for expertise training of CNNs, we verified the annotations for surgical phase recognition of laparoscopic cholecystectomy and subtotal gastrectomy for gastric cancer. We produce calibrated annotations for the seven phases of cholecystectomy by analyzing the discrepancies of previously annotated labels and by discussing the criteria of surgical phases. For gastrectomy for gastric cancer has more complex twenty-one surgical phases, we generate consensus annotation by the revision process with five specialists. By training the CNN-based surgical phase recognition networks with revised annotations, we achieved improved generalization performance over models trained with original annotation under the same cross-validation settings. We showed that the expertise data annotation pipeline for deep neural networks should be more rigorous based on the type of problem to apply clinical field.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge