Sumedha Singla

Deep Learning for Medical Imaging From Diagnosis Prediction to its Counterfactual Explanation

Sep 07, 2022

Abstract:Deep neural networks (DNN) have achieved unprecedented performance in computer-vision tasks almost ubiquitously in business, technology, and science. While substantial efforts are made to engineer highly accurate architectures and provide usable model explanations, most state-of-the-art approaches are first designed for natural vision and then translated to the medical domain. This dissertation seeks to address this gap by proposing novel architectures that integrate the domain-specific constraints of medical imaging into the DNN model and explanation design.

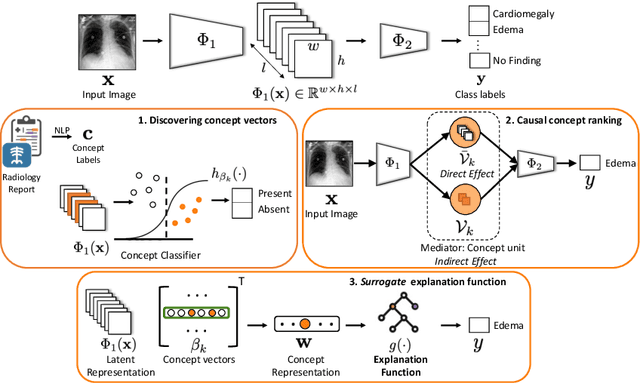

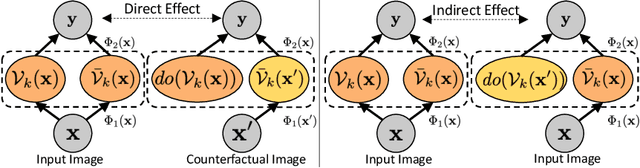

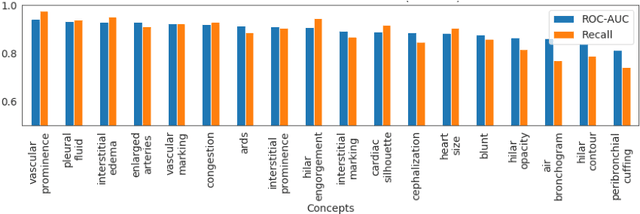

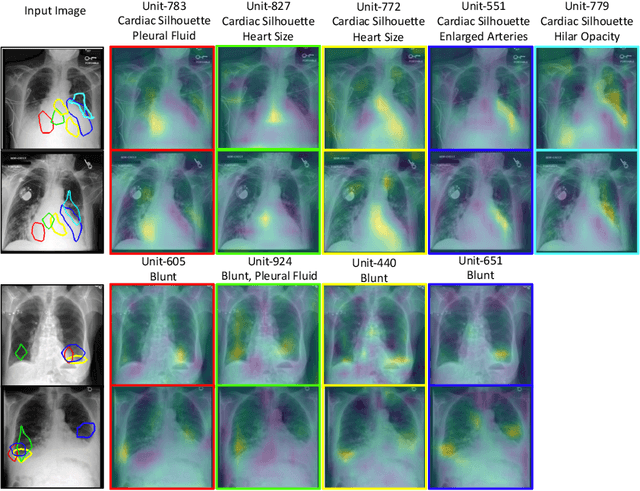

Using Causal Analysis for Conceptual Deep Learning Explanation

Jul 10, 2021

Abstract:Model explainability is essential for the creation of trustworthy Machine Learning models in healthcare. An ideal explanation resembles the decision-making process of a domain expert and is expressed using concepts or terminology that is meaningful to the clinicians. To provide such an explanation, we first associate the hidden units of the classifier to clinically relevant concepts. We take advantage of radiology reports accompanying the chest X-ray images to define concepts. We discover sparse associations between concepts and hidden units using a linear sparse logistic regression. To ensure that the identified units truly influence the classifier's outcome, we adopt tools from Causal Inference literature and, more specifically, mediation analysis through counterfactual interventions. Finally, we construct a low-depth decision tree to translate all the discovered concepts into a straightforward decision rule, expressed to the radiologist. We evaluated our approach on a large chest x-ray dataset, where our model produces a global explanation consistent with clinical knowledge.

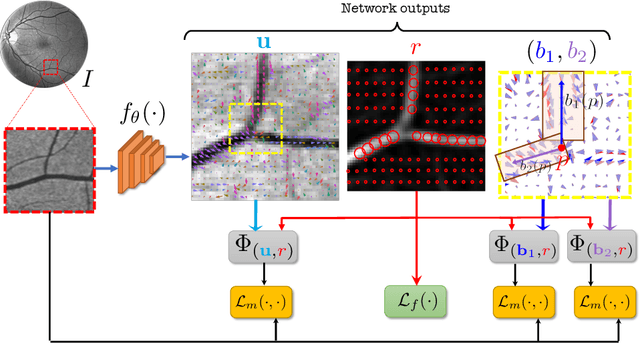

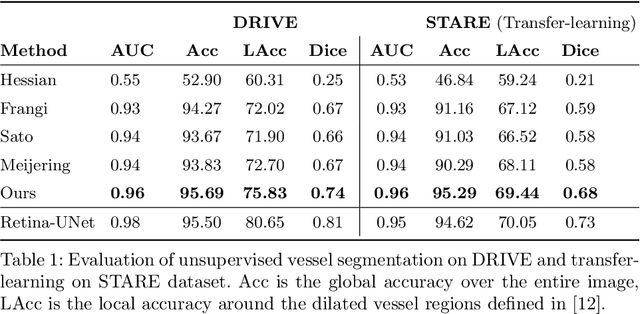

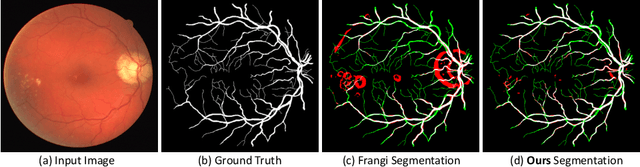

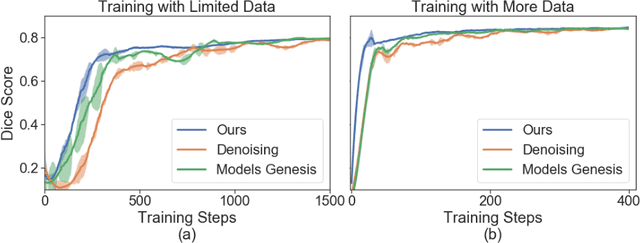

Self-Supervised Vessel Enhancement Using Flow-Based Consistencies

Jan 13, 2021

Abstract:Vessel segmenting is an essential task in many clinical applications. Although supervised methods have achieved state-of-art performance, acquiring expert annotation is laborious and mostly limited for two-dimensional datasets with a small sample size. On the contrary, unsupervised methods rely on handcrafted features to detect tube-like structures such as vessels. However, those methods require complex pipelines involving several hyper-parameters and design choices rendering the procedure sensitive, dataset-specific, and not generalizable. Also, unsupervised methods usually underperform supervised methods. We propose a self-supervised method with a limited number of hyper-parameters that is generalizable. Our method uses tube-like structure properties, such as connectivity, profile consistency, and bifurcation, to introduce inductive bias into a learning algorithm. To model those properties, we generate a vector field that we refer to as a flow. Our experiments on various datasets in 2D and 3D show that our method reduces the gap between supervised and unsupervised methods. Unlike generic self-supervised methods, the learned features are transferable for supervised approaches, which is essential when the number of annotated data is limited.

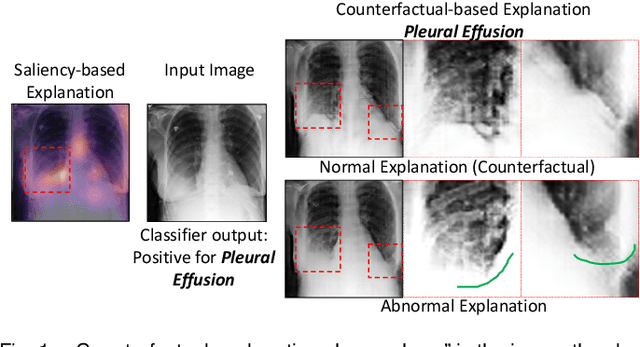

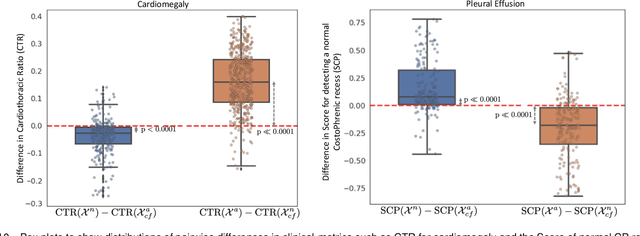

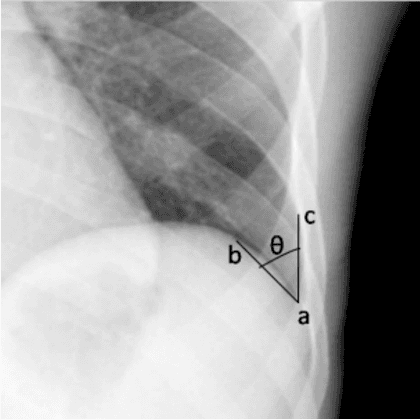

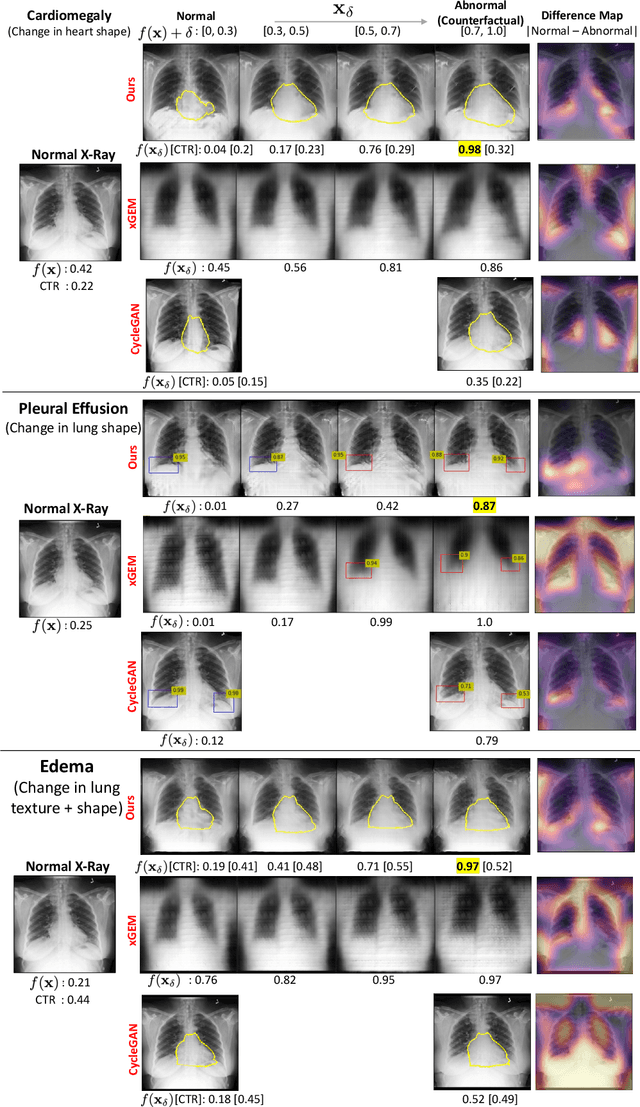

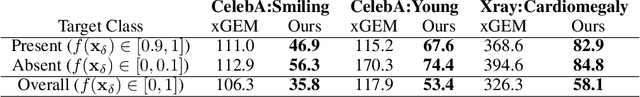

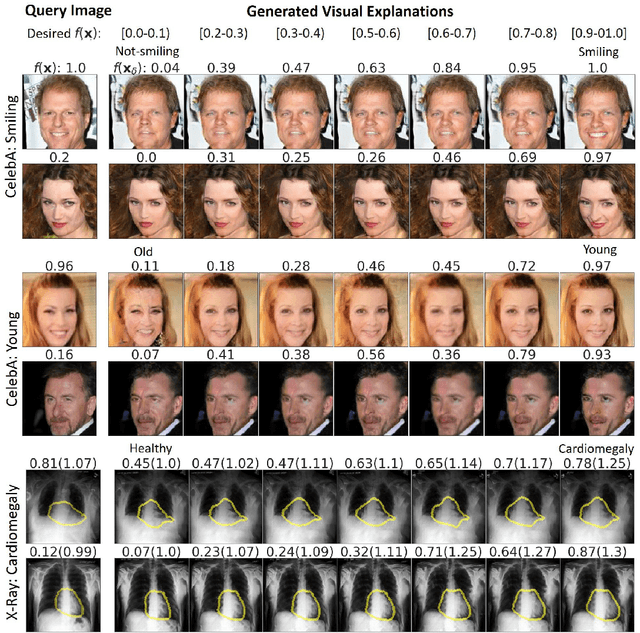

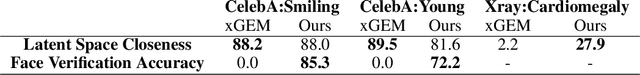

Explaining the Black-box Smoothly- A Counterfactual Approach

Jan 11, 2021

Abstract:We propose a BlackBox \emph{Counterfactual Explainer} that is explicitly developed for medical imaging applications. Classical approaches (e.g. saliency maps) assessing feature importance do not explain \emph{how} and \emph{why} variations in a particular anatomical region is relevant to the outcome, which is crucial for transparent decision making in healthcare application. Our framework explains the outcome by gradually \emph{exaggerating} the semantic effect of the given outcome label. Given a query input to a classifier, Generative Adversarial Networks produce a progressive set of perturbations to the query image that gradually changes the posterior probability from its original class to its negation. We design the loss function to ensure that essential and potentially relevant details, such as support devices, are preserved in the counterfactually generated images. We provide an extensive evaluation of different classification tasks on the chest X-Ray images. Our experiments show that a counterfactually generated visual explanation is consistent with the disease's clinical relevant measurements, both quantitatively and qualitatively.

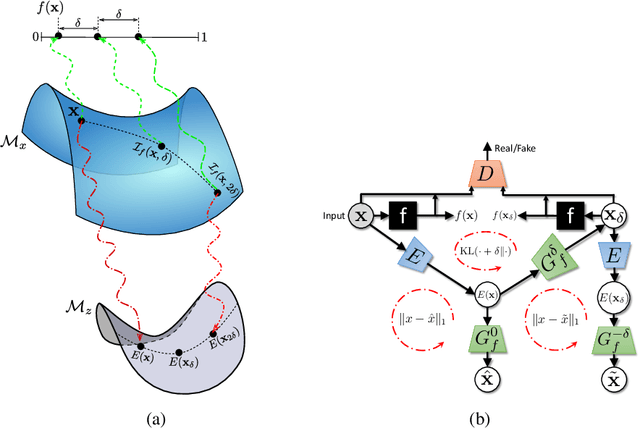

Explanation by Progressive Exaggeration

Nov 05, 2019

Abstract:As machine learning methods see greater adoption and implementation in high stakes applications such as medical image diagnosis, the need for model interpretability and explanation has become more critical. Classical approaches that assess feature importance (e.g. saliency maps) do not explain how and why a particular region of an image is relevant to the prediction. We propose a method that explains the outcome of a classification black-box by gradually exaggerating the semantic effect of a given class. Given a query input to a classifier, our method produces a progressive set of plausible variations of that query, which gradually changes the posterior probability from its original class to its negation. These counter-factually generated samples preserve features unrelated to the classification decision, such that a user can employ our method as a "tuning knob" to traverse a data manifold while crossing the decision boundary. Our method is model agnostic and only requires the output value and gradient of the predictor with respect to its input.

Subject2Vec: Generative-Discriminative Approach from a Set of Image Patches to a Vector

Jun 28, 2018

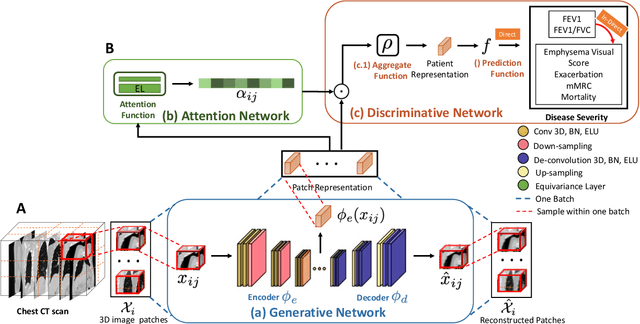

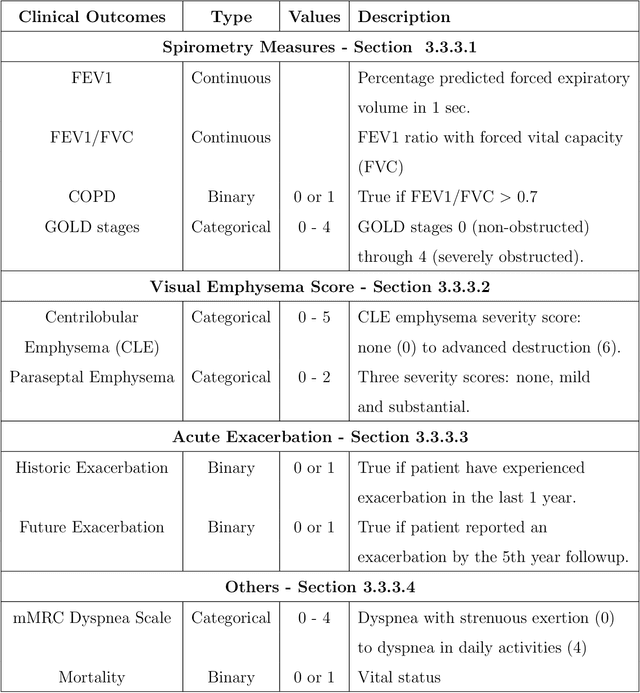

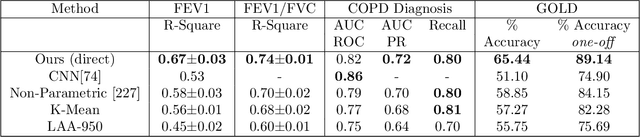

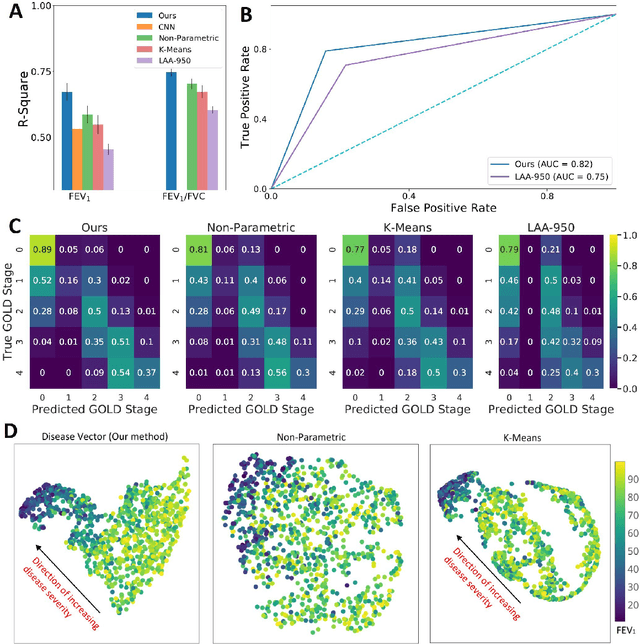

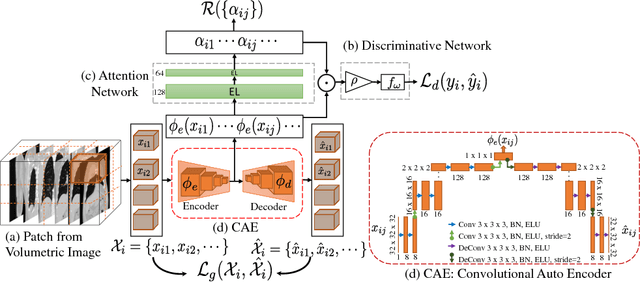

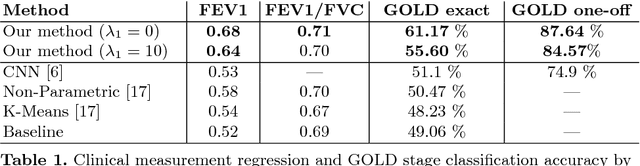

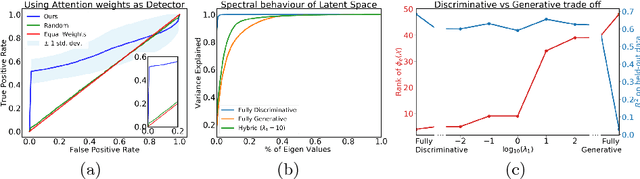

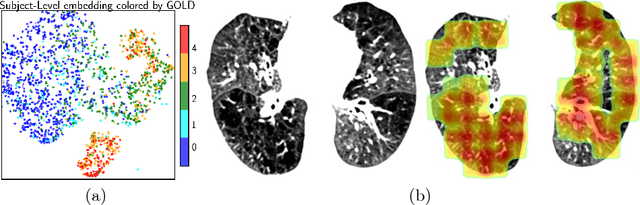

Abstract:We propose an attention-based method that aggregates local image features to a subject-level representation for predicting disease severity. In contrast to classical deep learning that requires a fixed dimensional input, our method operates on a set of image patches; hence it can accommodate variable length input image without image resizing. The model learns a clinically interpretable subject-level representation that is reflective of the disease severity. Our model consists of three mutually dependent modules which regulate each other: (1) a discriminative network that learns a fixed-length representation from local features and maps them to disease severity; (2) an attention mechanism that provides interpretability by focusing on the areas of the anatomy that contribute the most to the prediction task; and (3) a generative network that encourages the diversity of the local latent features. The generative term ensures that the attention weights are non-degenerate while maintaining the relevance of the local regions to the disease severity. We train our model end-to-end in the context of a large-scale lung CT study of Chronic Obstructive Pulmonary Disease (COPD). Our model gives state-of-the art performance in predicting clinical measures of severity for COPD. The distribution of the attention provides the regional relevance of lung tissue to the clinical measurements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge