Suman Raj Bista

Evaluating the Impact of Semantic Segmentation and Pose Estimation on Dense Semantic SLAM

Sep 16, 2021

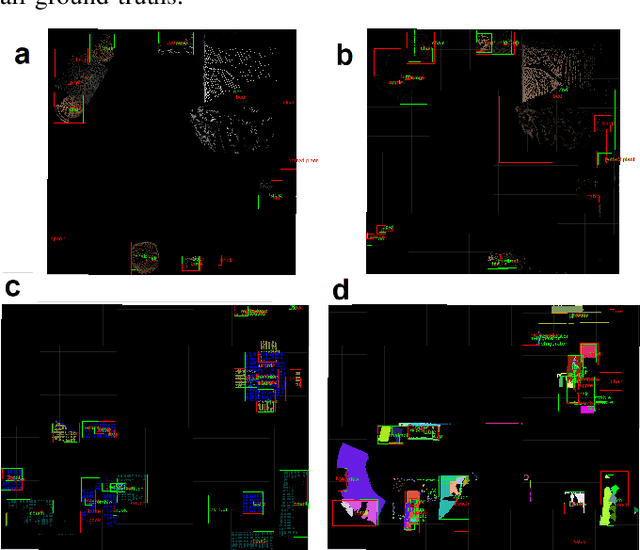

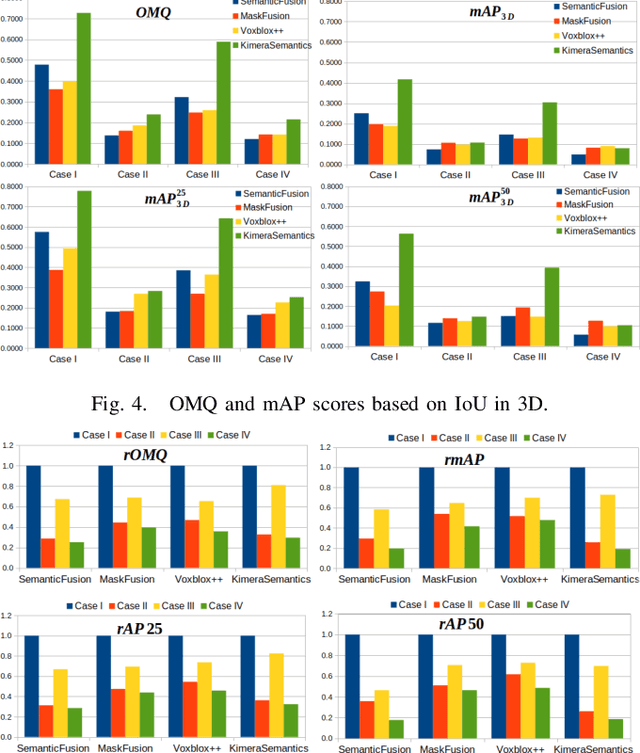

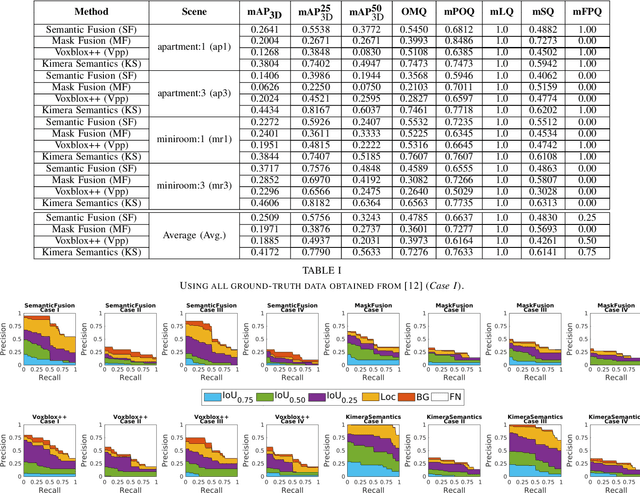

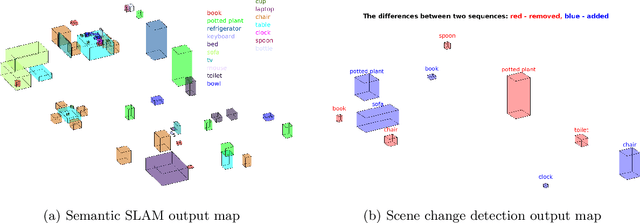

Abstract:Recent Semantic SLAM methods combine classical geometry-based estimation with deep learning-based object detection or semantic segmentation. In this paper we evaluate the quality of semantic maps generated by state-of-the-art class- and instance-aware dense semantic SLAM algorithms whose codes are publicly available and explore the impacts both semantic segmentation and pose estimation have on the quality of semantic maps. We obtain these results by providing algorithms with ground-truth pose and/or semantic segmentation data available from simulated environments. We establish that semantic segmentation is the largest source of error through our experiments, dropping mAP and OMQ performance by up to 74.3% and 71.3% respectively.

The Robotic Vision Scene Understanding Challenge

Sep 11, 2020

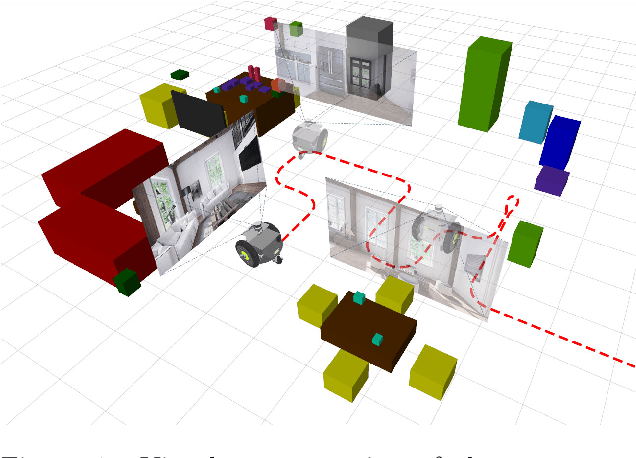

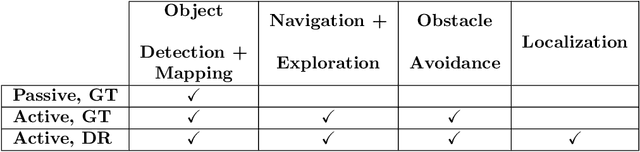

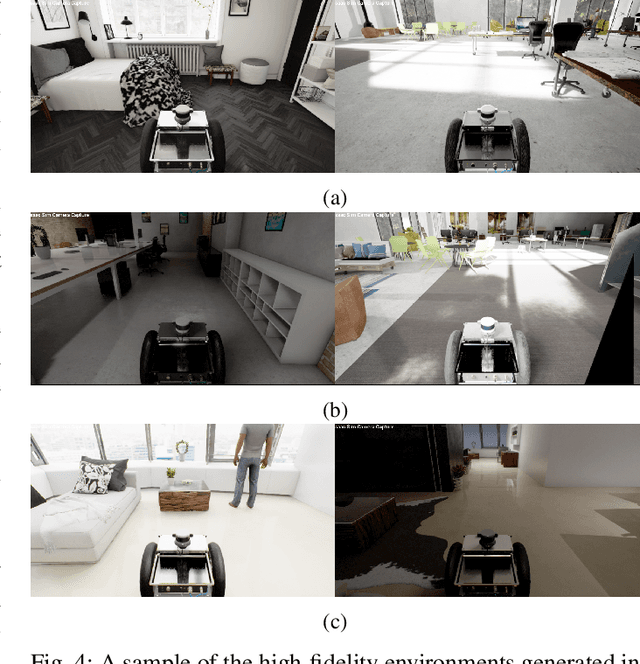

Abstract:Being able to explore an environment and understand the location and type of all objects therein is important for indoor robotic platforms that must interact closely with humans. However, it is difficult to evaluate progress in this area due to a lack of standardized testing which is limited due to the need for active robot agency and perfect object ground-truth. To help provide a standard for testing scene understanding systems, we present a new robot vision scene understanding challenge using simulation to enable repeatable experiments with active robot agency. We provide two challenging task types, three difficulty levels, five simulated environments and a new evaluation measure for evaluating 3D cuboid object maps. Our aim is to drive state-of-the-art research in scene understanding through enabling evaluation and comparison of active robotic vision systems.

BenchBot: Evaluating Robotics Research in Photorealistic 3D Simulation and on Real Robots

Aug 03, 2020

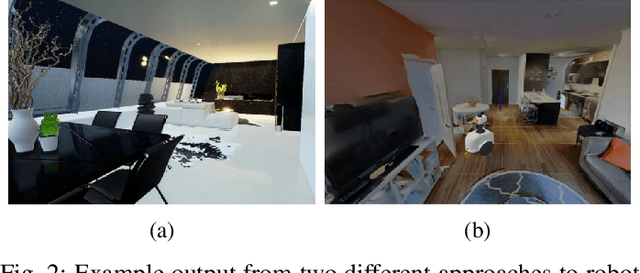

Abstract:We introduce BenchBot, a novel software suite for benchmarking the performance of robotics research across both photorealistic 3D simulations and real robot platforms. BenchBot provides a simple interface to the sensorimotor capabilities of a robot when solving robotics research problems; an interface that is consistent regardless of whether the target platform is simulated or a real robot. In this paper we outline the BenchBot system architecture, and explore the parallels between its user-centric design and an ideal research development process devoid of tangential robot engineering challenges. The paper describes the research benefits of using the BenchBot system, including: enhanced capacity to focus solely on research problems, direct quantitative feedback to inform research development, tools for deriving comprehensive performance characteristics, and submission formats which promote sharability and repeatability of research outcomes. BenchBot is publicly available (http://benchbot.org), and we encourage its use in the research community for comprehensively evaluating the simulated and real world performance of novel robotic algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge