Sulabh Kumra

Learning Multi-step Robotic Manipulation Policies from Visual Observation of Scene and Q-value Predictions of Previous Action

Feb 23, 2022

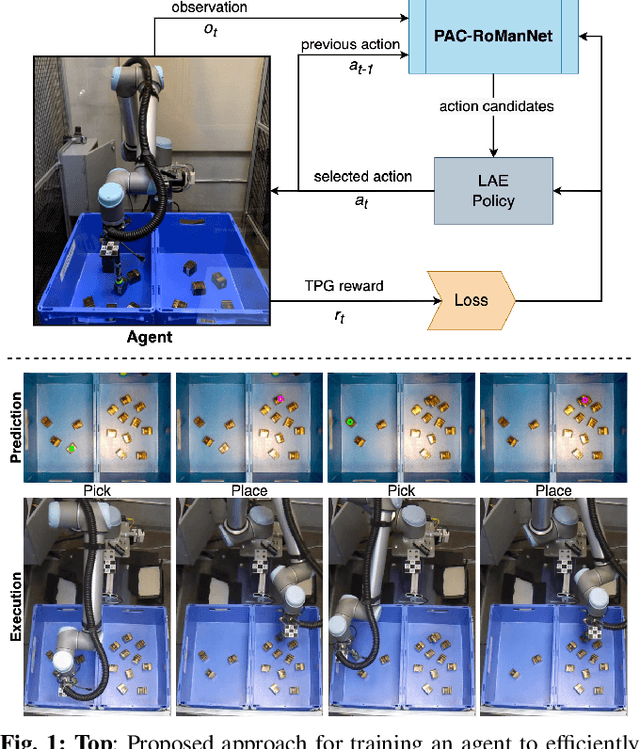

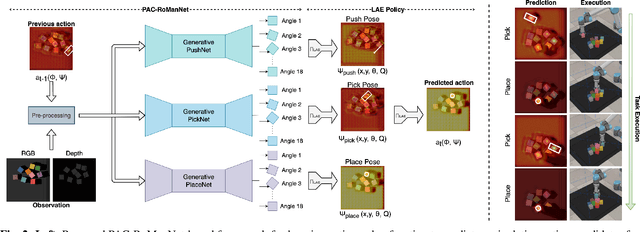

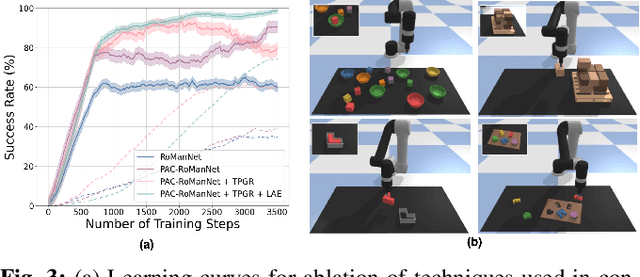

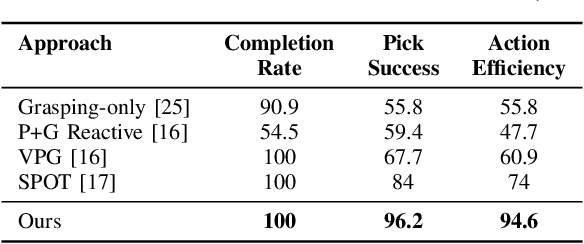

Abstract:In this work, we focus on multi-step manipulation tasks that involve long-horizon planning and considers progress reversal. Such tasks interlace high-level reasoning that consists of the expected states that can be attained to achieve an overall task and low-level reasoning that decides what actions will yield these states. We propose a sample efficient Previous Action Conditioned Robotic Manipulation Network (PAC-RoManNet) to learn the action-value functions and predict manipulation action candidates from visual observation of the scene and action-value predictions of the previous action. We define a Task Progress based Gaussian (TPG) reward function that computes the reward based on actions that lead to successful motion primitives and progress towards the overall task goal. To balance the ratio of exploration/exploitation, we introduce a Loss Adjusted Exploration (LAE) policy that determines actions from the action candidates according to the Boltzmann distribution of loss estimates. We demonstrate the effectiveness of our approach by training PAC-RoManNet to learn several challenging multi-step robotic manipulation tasks in both simulation and real-world. Experimental results show that our method outperforms the existing methods and achieves state-of-the-art performance in terms of success rate and action efficiency. The ablation studies show that TPG and LAE are especially beneficial for tasks like multiple block stacking. Additional experiments on Ravens-10 benchmark tasks suggest good generalizability of the proposed PAC-RoManNet.

Learning Robotic Manipulation Tasks through Visual Planning

Mar 02, 2021

Abstract:Multi-step manipulation tasks in unstructured environments are extremely challenging for a robot to learn. Such tasks interlace high-level reasoning that consists of the expected states that can be attained to achieve an overall task and low-level reasoning that decides what actions will yield these states. We propose a model-free deep reinforcement learning method to learn these multi-step manipulation tasks. We introduce a Robotic Manipulation Network (RoManNet) which is a vision-based deep reinforcement learning algorithm to learn the action-value functions and project manipulation action candidates. We define a Task Progress based Gaussian (TPG) reward function that computes the reward based on actions that lead to successful motion primitives and progress towards the overall task goal. We further introduce a Loss Adjusted Exploration (LAE) policy that determines actions from the action candidates according to the Boltzmann distribution of loss estimates. We demonstrate the effectiveness of our approaches by training RoManNet to learn several challenging multi-step robotic manipulation tasks. Empirical results show that our method outperforms the existing methods and achieves state-of-the-art results. The ablation studies show that TPG and LAE are especially beneficial for tasks like multiple block stacking. Code is available at: https://github.com/skumra/romannet

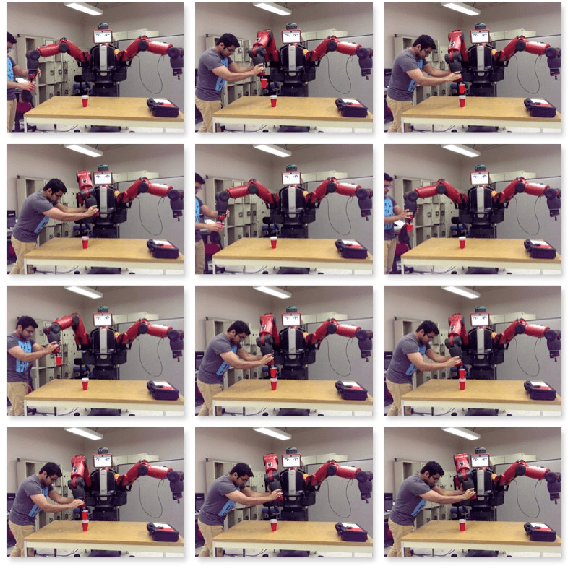

Robotic Grasping using Deep Reinforcement Learning

Jul 09, 2020

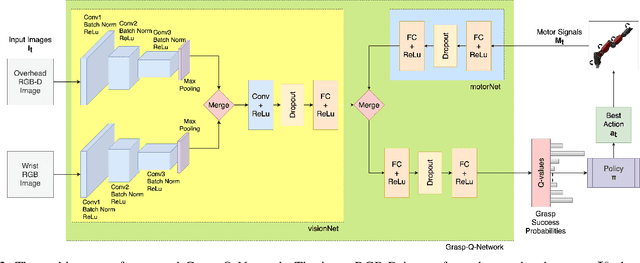

Abstract:In this work, we present a deep reinforcement learning based method to solve the problem of robotic grasping using visio-motor feedback. The use of a deep learning based approach reduces the complexity caused by the use of hand-designed features. Our method uses an off-policy reinforcement learning framework to learn the grasping policy. We use the double deep Q-learning framework along with a novel Grasp-Q-Network to output grasp probabilities used to learn grasps that maximize the pick success. We propose a visual servoing mechanism that uses a multi-view camera setup that observes the scene which contains the objects of interest. We performed experiments using a Baxter Gazebo simulated environment as well as on the actual robot. The results show that our proposed method outperforms the baseline Q-learning framework and increases grasping accuracy by adapting a multi-view model in comparison to a single-view model.

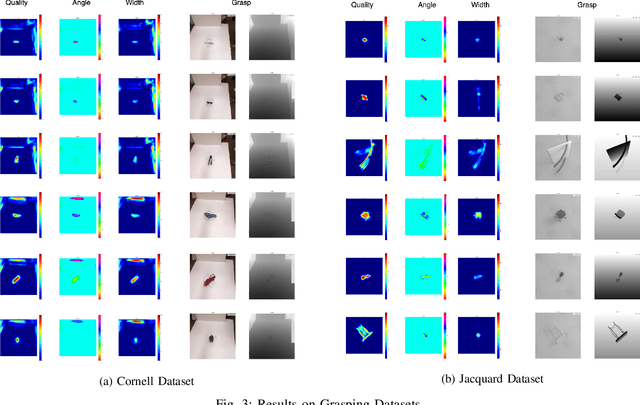

Antipodal Robotic Grasping using Generative Residual Convolutional Neural Network

Sep 11, 2019

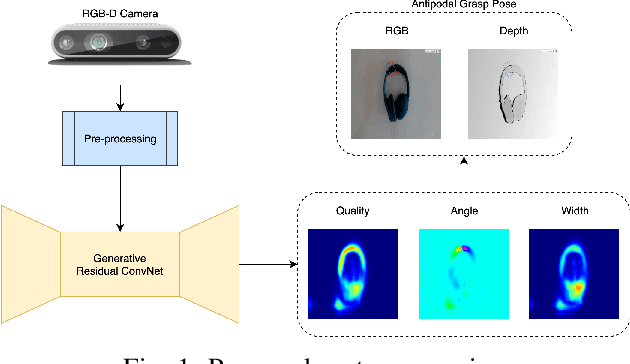

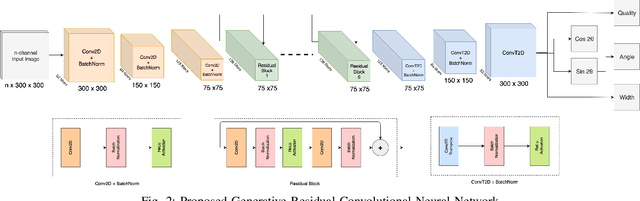

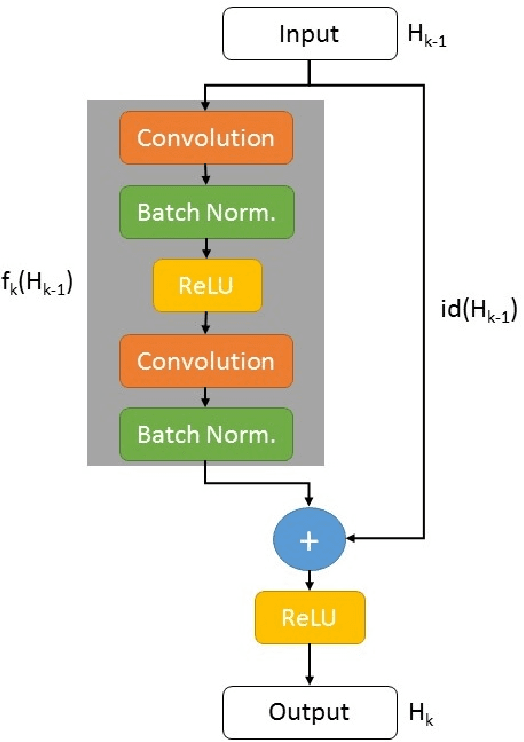

Abstract:In this paper, we tackle the problem of generating antipodal robotic grasps for unknown objects from n-channel image of the scene. We propose a novel Generative Residual Convolutional Neural Network (GR-ConvNet) model that can generate robust antipodal grasps from n-channel input at realtime speeds (~20ms). We evaluate the proposed model architecture on standard datasets and previously unseen household objects. We achieved state-of-the-art accuracy of 97.7% and 94.6% on Cornell and Jacquard grasping datasets respectively. We also demonstrate a 93.5% grasp success rate on previously unseen real-world objects. Our open-source implementation of GR-ConvNet can be found at github.com/skumra/robotic-grasping.

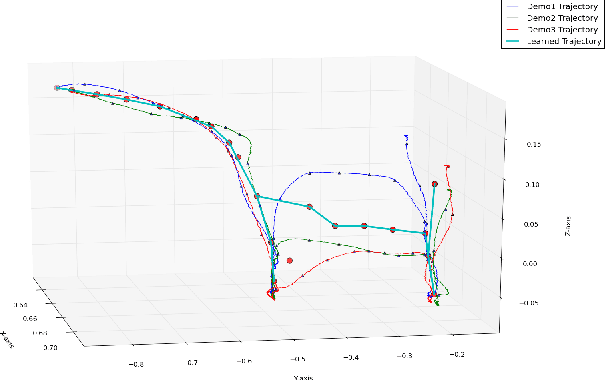

Collaborative Robot Learning from Demonstrations using Hidden Markov Model State Distribution

Sep 27, 2018

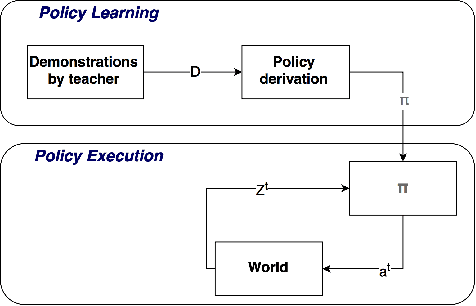

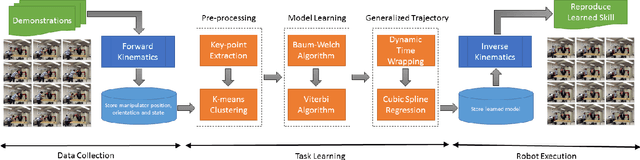

Abstract:In robotics, there is need of an interactive and expedite learning method as experience is expensive. Robot Learning from Demonstration (RLfD) enables a robot to learn a policy from demonstrations performed by teacher. RLfD enables a human user to add new capabilities to a robot in an intuitive manner, without explicitly reprogramming it. In this work, we present a novel interactive framework, where a collaborative robot learns skills for trajectory based tasks from demonstrations performed by a human teacher. The robot extracts features from each demonstration called as key-points and learns a model of the demonstrated skill using Hidden Markov Model (HMM). Our experimental results show that the learned model can be used to produce a generalized trajectory based skill.

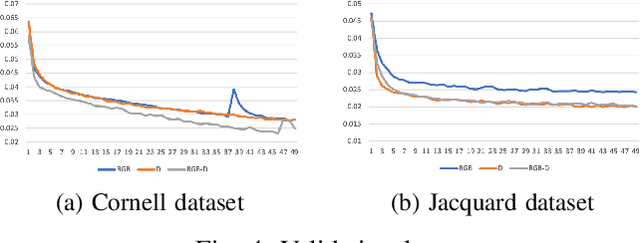

Robotic Grasp Detection using Deep Convolutional Neural Networks

Jul 21, 2017

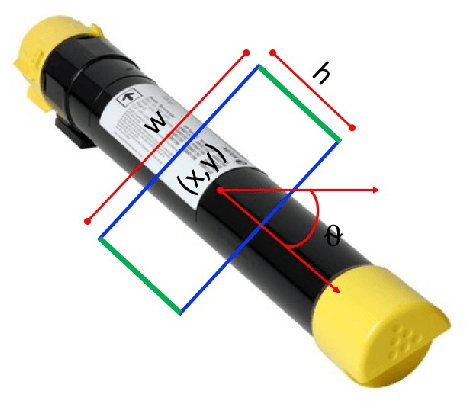

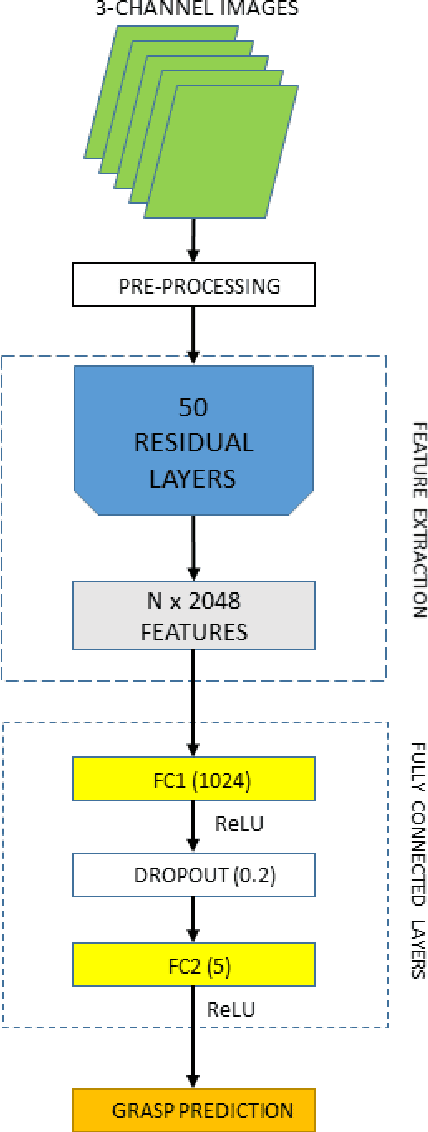

Abstract:Deep learning has significantly advanced computer vision and natural language processing. While there have been some successes in robotics using deep learning, it has not been widely adopted. In this paper, we present a novel robotic grasp detection system that predicts the best grasping pose of a parallel-plate robotic gripper for novel objects using the RGB-D image of the scene. The proposed model uses a deep convolutional neural network to extract features from the scene and then uses a shallow convolutional neural network to predict the grasp configuration for the object of interest. Our multi-modal model achieved an accuracy of 89.21% on the standard Cornell Grasp Dataset and runs at real-time speeds. This redefines the state-of-the-art for robotic grasp detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge