Sueda Taner

Learning Sparsifying Transforms for mmWave Communication via $\ell^4$-Norm Maximization

Jan 08, 2026Abstract:The high directionality of wave propagation at millimeter-wave (mmWave) carrier frequencies results in only a small number of significant transmission paths between user equipments and the basestation (BS). This sparse nature of wave propagation is revealed in the beamspace domain, which is traditionally obtained by taking the spatial discrete Fourier transform (DFT) across a uniform linear antenna array at the BS, where each DFT output is associated with a distinct beam. In recent years, beamspace processing has emerged as a promising technique to reduce baseband complexity and power consumption in all-digital massive multiuser (MU) multiple-input multiple-output (MIMO) systems operating at mmWave frequencies. However, it remains unclear whether the DFT is the optimal sparsifying transform for finite-dimensional antenna arrays. In this paper, we extend the framework of Zhai et al. for complete dictionary learning via $\ell^4$-norm maximization to the complex case in order to learn new sparsifying transforms. We provide a theoretical foundation for $\ell^4$-norm maximization and propose two suitable learning algorithms. We then utilize these algorithms (i) to assess the optimality of the DFT for sparsifying channel vectors theoretically and via simulations and (ii) to learn improved sparsifying transforms for real-world and synthetically generated channel vectors.

CSI-Based User Positioning, Channel Charting, and Device Classification with an NVIDIA 5G Testbed

Dec 11, 2025Abstract:Channel-state information (CSI)-based sensing will play a key role in future cellular systems. However, no CSI dataset has been published from a real-world 5G NR system that facilitates the development and validation of suitable sensing algorithms. To close this gap, we publish three real-world wideband multi-antenna multi-open RAN radio unit (O-RU) CSI datasets from the 5G NR uplink channel: an indoor lab/office room dataset, an outdoor campus courtyard dataset, and a device classification dataset with six commercial-off-the-shelf (COTS) user equipments (UEs). These datasets have been recorded using a software-defined 5G NR testbed based on NVIDIA Aerial RAN CoLab Over-the-Air (ARC-OTA) with COTS hardware, which we have deployed at ETH Zurich. We demonstrate the utility of these datasets for three CSI-based sensing tasks: neural UE positioning, channel charting in real-world coordinates, and closed-set device classification. For all these tasks, our results show high accuracy: neural UE positioning achieves 0.6cm (indoor) and 5.7cm (outdoor) mean absolute error, channel charting in real-world coordinates achieves 73cm mean absolute error (outdoor), and device classification achieves 99% (same day) and 95% (next day) accuracy. The CSI datasets, ground-truth UE position labels, CSI features, and simulation code are publicly available at https://caez.ethz.ch

CSI2Vec: Towards a Universal CSI Feature Representation for Positioning and Channel Charting

Jun 05, 2025Abstract:Natural language processing techniques, such as Word2Vec, have demonstrated exceptional capabilities in capturing semantic and syntactic relationships of text through vector embeddings. Inspired by this technique, we propose CSI2Vec, a self-supervised framework for generating universal and robust channel state information (CSI) representations tailored to CSI-based positioning (POS) and channel charting (CC). CSI2Vec learns compact vector embeddings across various wireless scenarios, capturing spatial relationships between user equipment positions without relying on CSI reconstruction or ground-truth position information. We implement CSI2Vec as a neural network that is trained across various deployment setups (i.e., the spatial arrangement of radio equipment and scatterers) and radio setups (RSs) (i.e., the specific hardware used), ensuring robustness to aspects such as differences in the environment, the number of used antennas, or allocated set of subcarriers. CSI2Vec abstracts the RS by generating compact vector embeddings that capture essential spatial information, avoiding the need for full CSI transmission or reconstruction while also reducing complexity and improving processing efficiency of downstream tasks. Simulations with ray-tracing and real-world CSI datasets demonstrate CSI2Vec's effectiveness in maintaining excellent POS and CC performance while reducing computational demands and storage.

Cauchy-Schwarz Regularizers

Mar 03, 2025

Abstract:We introduce a novel class of regularization functions, called Cauchy-Schwarz (CS) regularizers, which can be designed to induce a wide range of properties in solution vectors of optimization problems. To demonstrate the versatility of CS regularizers, we derive regularization functions that promote discrete-valued vectors, eigenvectors of a given matrix, and orthogonal matrices. The resulting CS regularizers are simple, differentiable, and can be free of spurious stationary points, making them suitable for gradient-based solvers and large-scale optimization problems. In addition, CS regularizers automatically adapt to the appropriate scale, which is, for example, beneficial when discretizing the weights of neural networks. To demonstrate the efficacy of CS regularizers, we provide results for solving underdetermined systems of linear equations and weight quantization in neural networks. Furthermore, we discuss specializations, variations, and generalizations, which lead to an even broader class of new and possibly more powerful regularizers.

Channel Charting for Streaming CSI Data

Dec 07, 2023

Abstract:Channel charting (CC) applies dimensionality reduction to channel state information (CSI) data at the infrastructure basestation side with the goal of extracting pseudo-position information for each user. The self-supervised nature of CC enables predictive tasks that depend on user position without requiring any ground-truth position information. In this work, we focus on the practically relevant streaming CSI data scenario, in which CSI is constantly estimated. To deal with storage limitations, we develop a novel streaming CC architecture that maintains a small core CSI dataset from which the channel charts are learned. Curation of the core CSI dataset is achieved using a min-max-similarity criterion. Numerical validation with measured CSI data demonstrates that our method approaches the accuracy obtained from the complete CSI dataset while using only a fraction of CSI storage and avoiding catastrophic forgetting of old CSI data.

Channel Charting in Real-World Coordinates

Aug 28, 2023

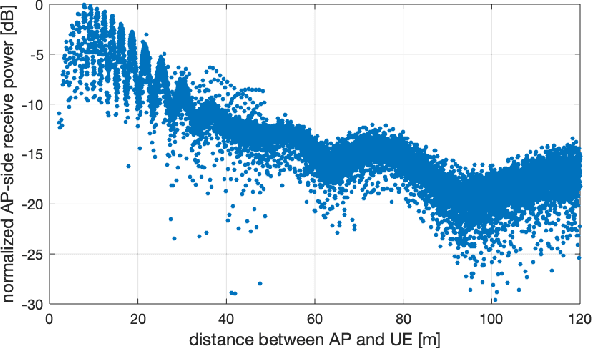

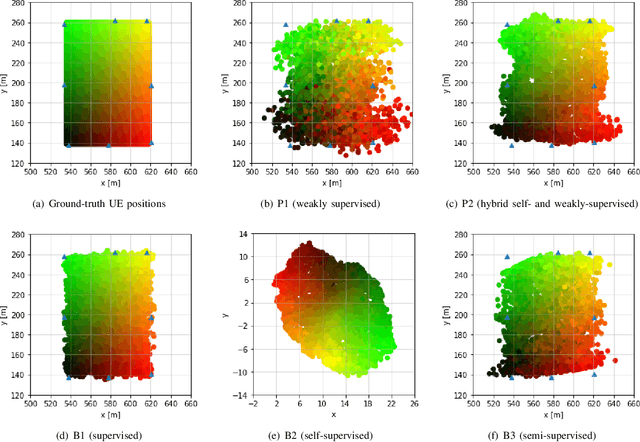

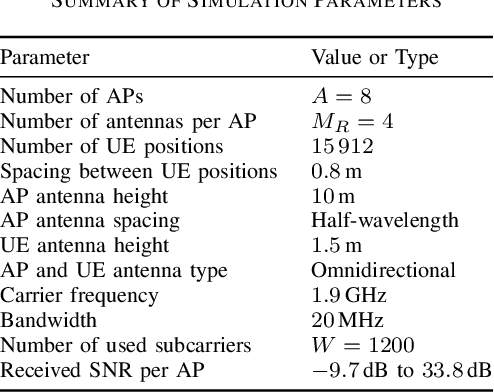

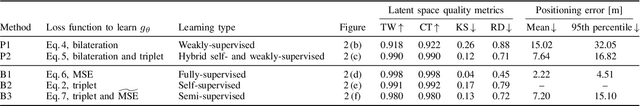

Abstract:Channel charting is an emerging self-supervised method that maps channel state information (CSI) to a low-dimensional latent space, which represents pseudo-positions of user equipments (UEs). While this latent space preserves local geometry, i.e., nearby UEs are nearby in latent space, the pseudo-positions are in arbitrary coordinates and global geometry is not preserved. In order to enable channel charting in real-world coordinates, we propose a novel bilateration loss for multipoint wireless systems in which only the access point (AP) locations are known--no geometrical models or ground-truth UE position information is required. The idea behind this bilateration loss is to compare the received power at pairs of APs in order to determine whether a UE should be placed closer to one AP or the other in latent space. We demonstrate the efficacy of our method using channel vectors from a commercial ray-tracer.

Alternating Projections Method for Joint Precoding and Peak-to-Average-Power Ratio Reduction

Dec 23, 2022Abstract:Orthogonal frequency-division multiplexing (OFDM) time-domain signals exhibit high peak-to-average (power) ratio (PAR), which requires linear radio-frequency chains to avoid an increase in error-vector magnitude (EVM) and out-of-band (OOB) emissions. In this paper, we propose a novel joint PAR reduction and precoding algorithm that relaxes these linearity requirements in massive multiuser (MU) multiple-input multiple-output (MIMO) wireless systems. Concretely, we develop a novel alternating projections method, which limits the PAR and transmit power increase while simultaneously suppressing MU interference. We provide a theoretical foundation of our algorithm and provide simulation results for a massive MU-MIMO-OFDM scenario. Our results demonstrate significant PAR reduction while limiting the transmit power, without causing EVM or OOB emissions.

Feature Learning for Neural-Network-Based Positioning with Channel State Information

Oct 28, 2021

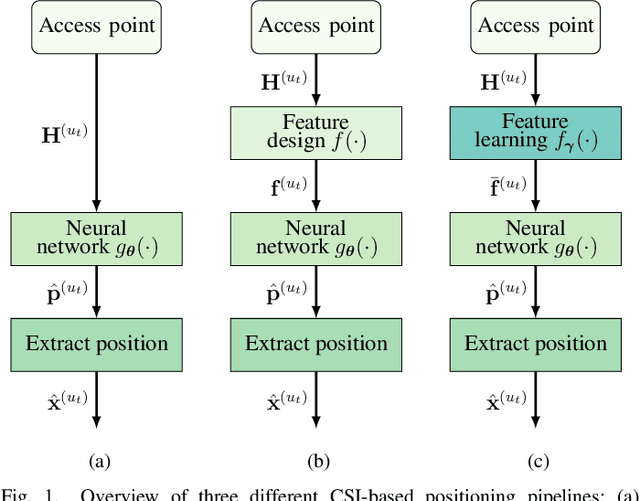

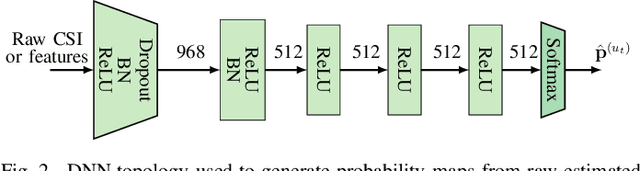

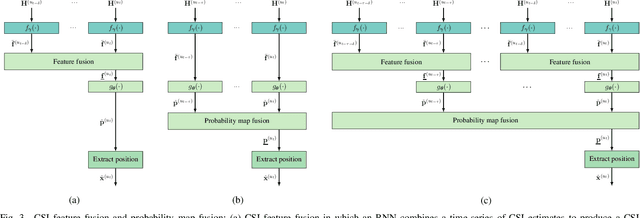

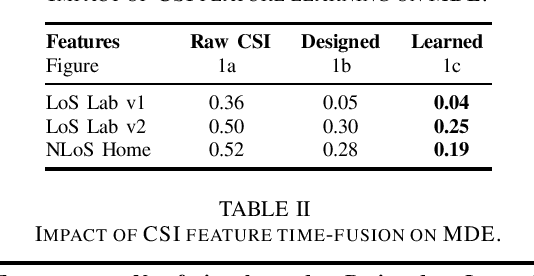

Abstract:Recent channel state information (CSI)-based positioning pipelines rely on deep neural networks (DNNs) in order to learn a mapping from estimated CSI to position. Since real-world communication transceivers suffer from hardware impairments, CSI-based positioning systems typically rely on features that are designed by hand. In this paper, we propose a CSI-based positioning pipeline that directly takes raw CSI measurements and learns features using a structured DNN in order to generate probability maps describing the likelihood of the transmitter being at pre-defined grid points. To further improve the positioning accuracy of moving user equipments, we propose to fuse a time-series of learned CSI features or a time-series of probability maps. To demonstrate the efficacy of our methods, we perform experiments with real-world indoor line-of-sight (LoS) and non-LoS channel measurements. We show that CSI feature learning and time-series fusion can reduce the mean distance error by up to 2.5$\boldsymbol\times$ compared to the state-of-the-art.

Hardware-Aware Beamspace Precoding for All-Digital mmWave Massive MU-MIMO

Aug 13, 2021

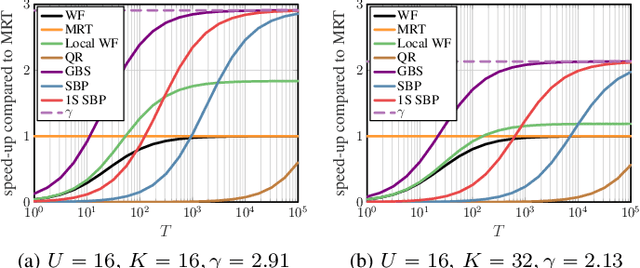

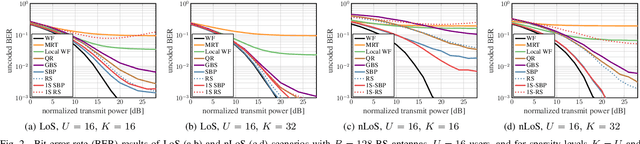

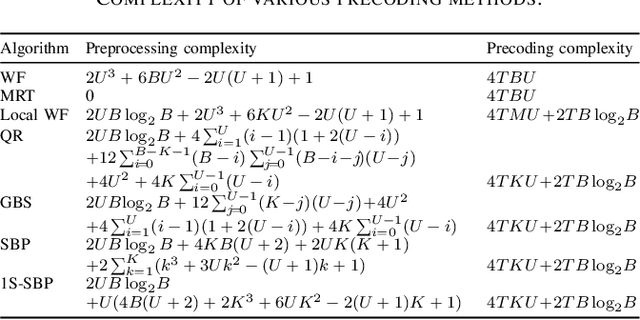

Abstract:Massive multi-user multiple-input multiple-output (MU-MIMO) wireless systems operating at millimeter-wave (mmWave) frequencies enable simultaneous wideband data transmission to a large number of users. In order to reduce the complexity of MU precoding in all-digital basestation architectures, we propose a two-stage precoding architecture that first performs precoding using a sparse matrix in the beamspace domain, followed by an inverse fast Fourier transform that converts the result to the antenna domain. The sparse precoding matrix requires a small number of multipliers and enables regular hardware architectures, which allows the design of hardware-efficient all-digital precoders. Simulation results demonstrate that our methods approach the error-rate of conventional Wiener filter precoding with more than 2x reduced complexity.

$\ell^p\!-\!\ell^q$-Norm Minimization for Joint Precoding and Peak-to-Average-Power Ratio Reduction

Jul 14, 2021

Abstract:Wireless communication systems that rely on orthogonal frequency-division multiplexing (OFDM) suffer from a high peak-to-average (power) ratio (PAR), which necessitates power-inefficient radio-frequency (RF) chains to avoid an increase in error-vector magnitude (EVM) and out-of-band (OOB) emissions. The situation is further aggravated in massive multiuser (MU) multiple-input multiple-output (MIMO) systems that would require hundreds of linear RF chains. In this paper, we present a novel approach to joint precoding and PAR reduction that builds upon a novel $\ell^p\!-\!\ell^q$-norm formulation, which is able to find minimum PAR solutions while suppressing MU interference. We provide a theoretical underpinning of our approach and provide simulation results for a massive MU-MIMO-OFDM system that demonstrate significant reductions in PAR at low complexity, without causing an increase in EVM or OOB emissions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge