Su Young Kim

Ask Me What You Need: Product Retrieval using Knowledge from GPT-3

Jul 06, 2022

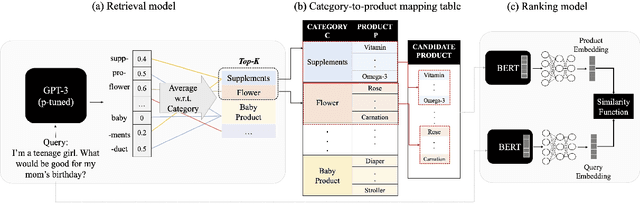

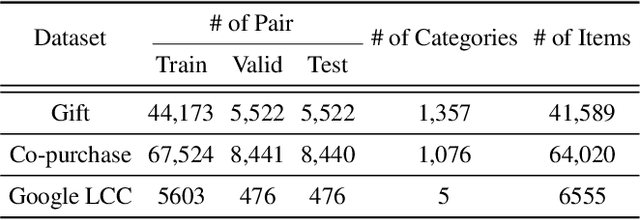

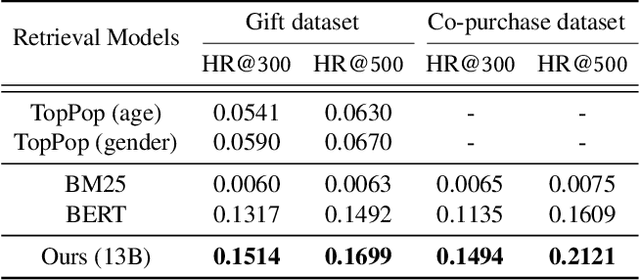

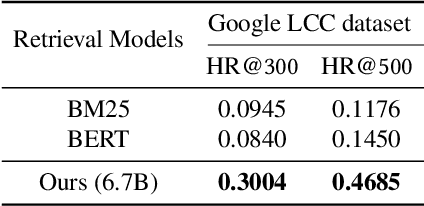

Abstract:As online merchandise become more common, many studies focus on embedding-based methods where queries and products are represented in the semantic space. These methods alleviate the problem of vocab mismatch between the language of queries and products. However, past studies usually dealt with queries that precisely describe the product, and there still exists the need to answer imprecise queries that may require common sense knowledge, i.e., 'what should I get my mom for Mother's Day.' In this paper, we propose a GPT-3 based product retrieval system that leverages the knowledge-base (KB) of GPT-3 for question answering; users do not need to know the specific illustrative keywords for a product when querying. Our method tunes prompt tokens of GPT-3 to prompt knowledge and render answers that are mapped directly to products without further processing. Our method shows consistent performance improvement on two real-world and one public dataset, compared to the baseline methods. We provide an in-depth discussion on leveraging GPT-3 knowledge into a question answering based retrieval system.

Scaling Law for Recommendation Models: Towards General-purpose User Representations

Dec 01, 2021

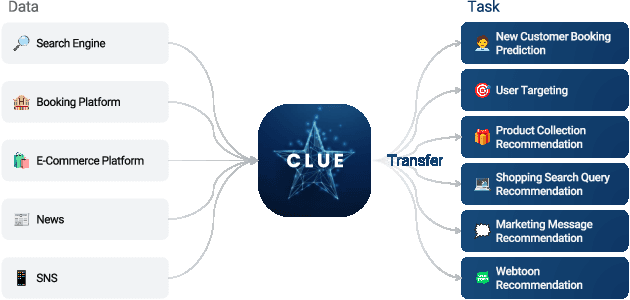

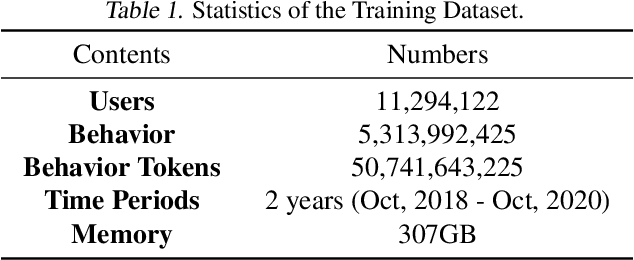

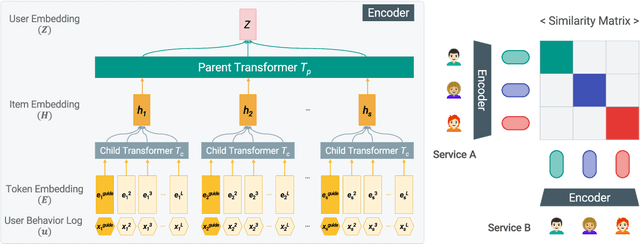

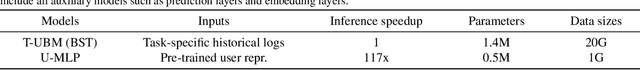

Abstract:A recent trend shows that a general class of models, e.g., BERT, GPT-3, CLIP, trained on broad data at scale have shown a great variety of functionalities with a single learning architecture. In this work, we explore the possibility of general-purpose user representation learning by training a universal user encoder at large scales. We demonstrate that the scaling law holds in the user modeling areas, where the training error scales as a power-law with the amount of compute. Our Contrastive Learning User Encoder (CLUE), optimizes task-agnostic objectives, and the resulting user embeddings stretches our expectation of what is possible to do in various downstream tasks. CLUE also shows great transferability to other domains and systems, as performances on an online experiment shows significant improvements in online Click-Through-Rate (CTR). Furthermore, we also investigate how the performance changes according to the scale-up factors, i.e., model capacity, sequence length and batch size. Finally, we discuss the broader impacts of CLUE in general.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge