Su Li

Occlusion-Aware 3D Motion Interpretation for Abnormal Behavior Detection

Jul 23, 2024Abstract:Estimating abnormal posture based on 3D pose is vital in human pose analysis, yet it presents challenges, especially when reconstructing 3D human poses from monocular datasets with occlusions. Accurate reconstructions enable the restoration of 3D movements, which assist in the extraction of semantic details necessary for analyzing abnormal behaviors. However, most existing methods depend on predefined key points as a basis for estimating the coordinates of occluded joints, where variations in data quality have adversely affected the performance of these models. In this paper, we present OAD2D, which discriminates against motion abnormalities based on reconstructing 3D coordinates of mesh vertices and human joints from monocular videos. The OAD2D employs optical flow to capture motion prior information in video streams, enriching the information on occluded human movements and ensuring temporal-spatial alignment of poses. Moreover, we reformulate the abnormal posture estimation by coupling it with Motion to Text (M2T) model in which, the VQVAE is employed to quantize motion features. This approach maps motion tokens to text tokens, allowing for a semantically interpretable analysis of motion, and enhancing the generalization of abnormal posture detection boosted by Language model. Our approach demonstrates the robustness of abnormal behavior detection against severe and self-occlusions, as it reconstructs human motion trajectories in global coordinates to effectively mitigate occlusion issues. Our method, validated using the Human3.6M, 3DPW, and NTU RGB+D datasets, achieves a high $F_1-$Score of 0.94 on the NTU RGB+D dataset for medical condition detection. And we will release all of our code and data.

Extract fetal ECG from single-lead abdominal ECG by de-shape short time Fourier transform and nonlocal median

Sep 09, 2016

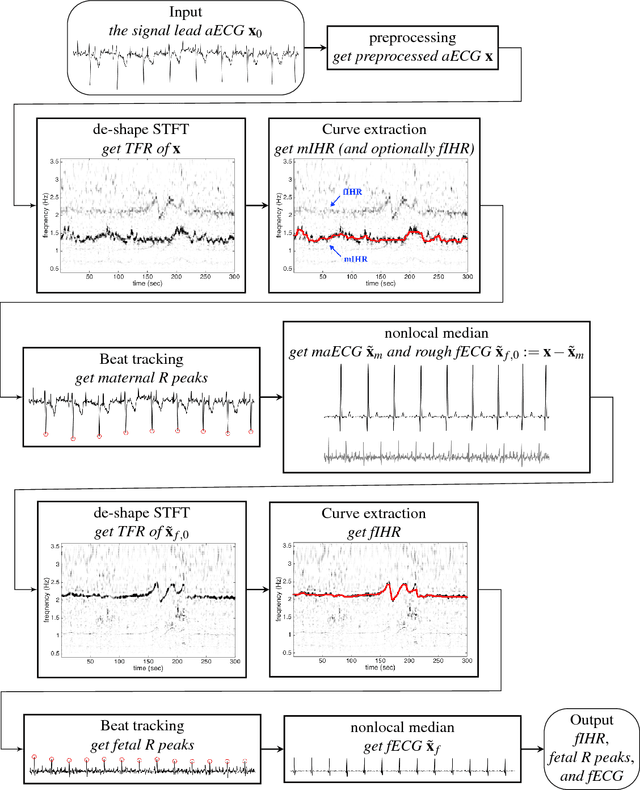

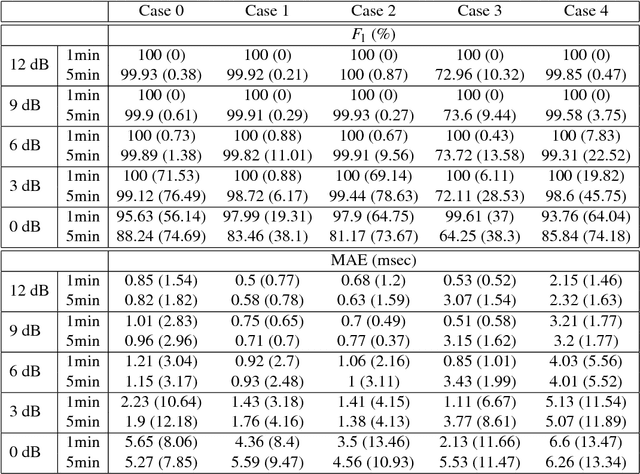

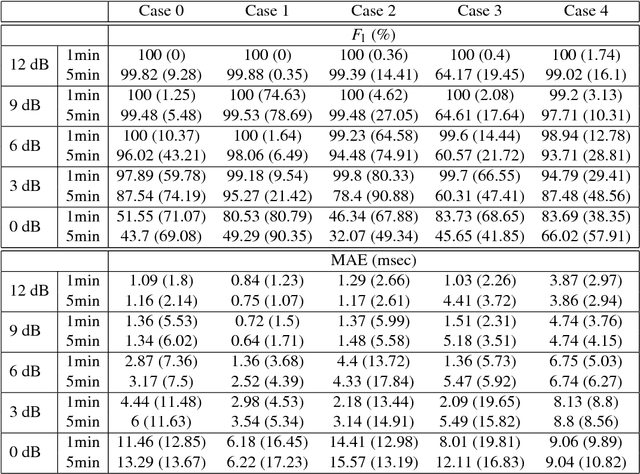

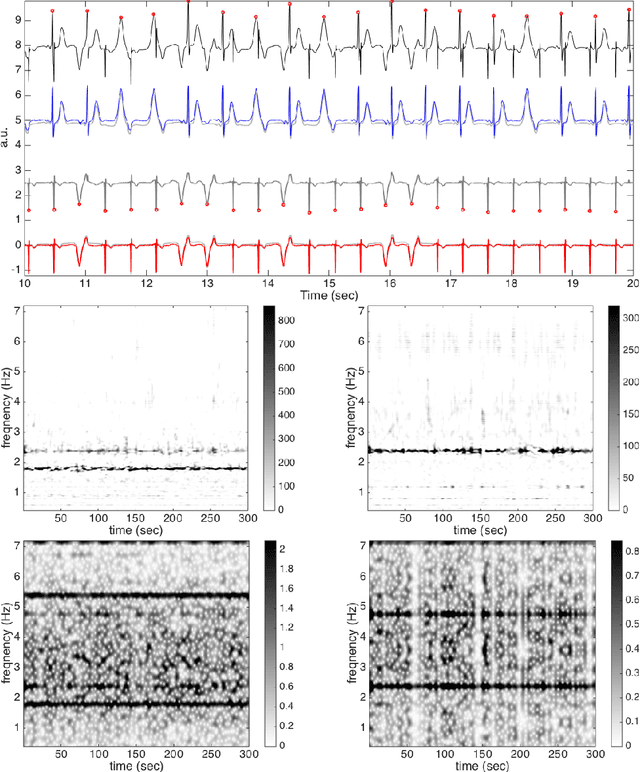

Abstract:The multiple fundamental frequency detection problem and the source separation problem from a single-channel signal containing multiple oscillatory components and a nonstationary noise are both challenging tasks. To extract the fetal electrocardiogram (ECG) from a single-lead maternal abdominal ECG, we face both challenges. In this paper, we propose a novel method to extract the fetal ECG signal from the single channel maternal abdominal ECG signal, without any additional measurement. The algorithm is composed of three main ingredients. First, the maternal and fetal heart rates are estimated by the de-shape short time Fourier transform, which is a recently proposed nonlinear time-frequency analysis technique; second, the beat tracking technique is applied to accurately obtain the maternal and fetal R peaks; third, the maternal and fetal ECG waveforms are established by the nonlocal median. The algorithm is evaluated on a simulated fetal ECG signal database ({\em fecgsyn} database), and tested on two real databases with the annotation provided by experts ({\em adfecgdb} database and {\em CinC2013} database). In general, the algorithm could be applied to solve other detection and source separation problems, and reconstruct the time-varying wave-shape function of each oscillatory component.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge