Stephen Nogar

Robust Active Visual Perching with Quadrotors on Inclined Surfaces

Apr 05, 2022

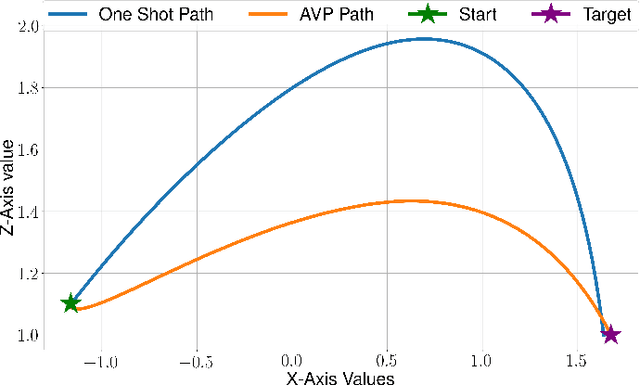

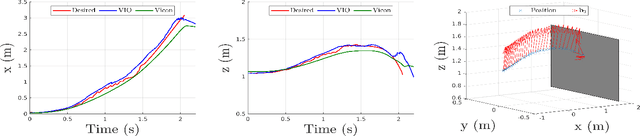

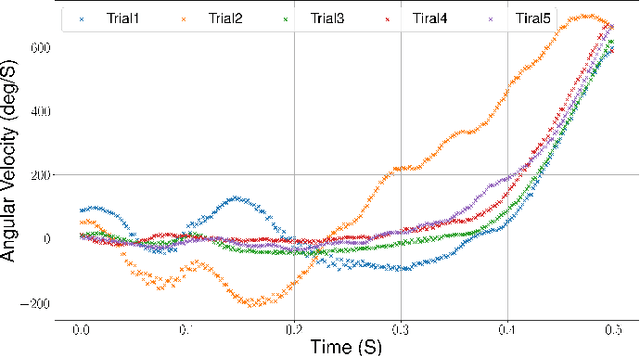

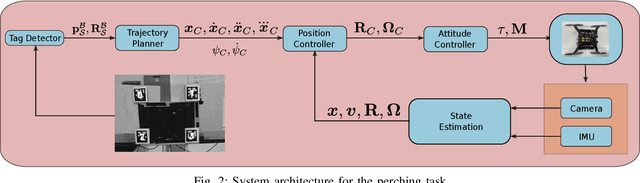

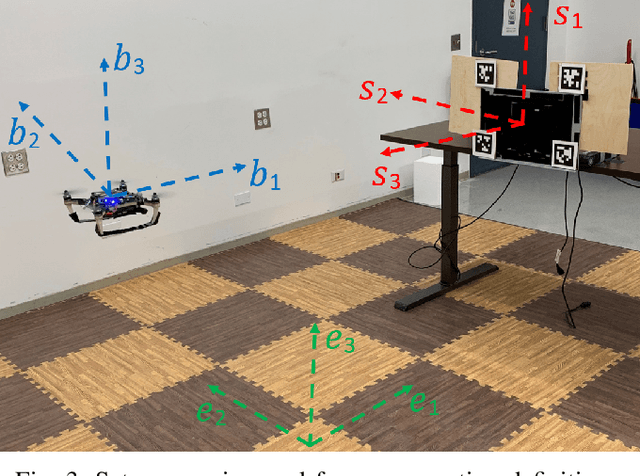

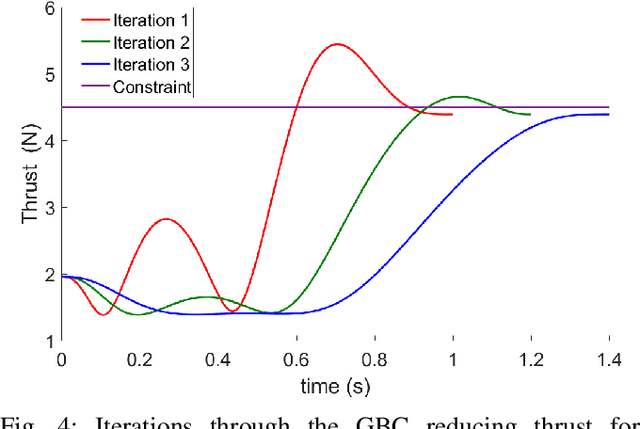

Abstract:Autonomous Micro Aerial Vehicles are deployed for a variety tasks including surveillance and monitoring. Perching and staring allow the vehicle to monitor targets without flying, saving battery power and increasing the overall mission time without the need to frequently replace batteries. This paper addresses the Active Visual Perching (AVP) control problem to autonomously perch on inclined surfaces up to $90^\circ$. Our approach generates dynamically feasible trajectories to navigate and perch on a desired target location, while taking into account actuator and Field of View (FoV) constraints. By replanning in mid-flight, we take advantage of more accurate target localization increasing the perching maneuver's robustness to target localization or control errors. We leverage the Karush-Kuhn-Tucker (KKT) conditions to identify the compatibility between planning objectives and the visual sensing constraint during the planned maneuver. Furthermore, we experimentally identify the corresponding boundary conditions that maximizes the spatio-temporal target visibility during the perching maneuver. The proposed approach works on-board in real-time with significant computational constraints relying exclusively on cameras and an Inertial Measurement Unit (IMU). Experimental results validate the proposed approach and shows the higher success rate as well as increased target interception precision and accuracy with respect to a one-shot planning approach, while still retaining aggressive capabilities with flight envelopes that include large excursions from the hover position on inclined surfaces up to 90$^\circ$, angular speeds up to 750~deg/s, and accelerations up to 10~m/s$^2$.

Aggressive Visual Perching with Quadrotors on Inclined Surfaces

Jul 23, 2021

Abstract:Autonomous Micro Aerial Vehicles (MAVs) have the potential to be employed for surveillance and monitoring tasks. By perching and staring on one or multiple locations aerial robots can save energy while concurrently increasing their overall mission time without actively flying. In this paper, we address the estimation, planning, and control problems for autonomous perching on inclined surfaces with small quadrotors using visual and inertial sensing. We focus on planning and executing of dynamically feasible trajectories to navigate and perch to a desired target location with on board sensing and computation. Our planner also supports certain classes of nonlinear global constraints by leveraging an efficient algorithm that we have mathematically verified. The on board cameras and IMU are concurrently used for state estimation and to infer the relative robot/target localization. The proposed solution runs in real-time on board a limited computational unit. Experimental results validate the proposed approach by tackling aggressive perching maneuvers with flight envelopes that include large excursions from the hover position on inclined surfaces up to 90$^\circ$, angular rates up to 600~deg/s, and accelerations up to 10m/s^2.

A Research Platform for Multi-Robot Dialogue with Humans

Oct 12, 2019

Abstract:This paper presents a research platform that supports spoken dialogue interaction with multiple robots. The demonstration showcases our crafted MultiBot testing scenario in which users can verbally issue search, navigate, and follow instructions to two robotic teammates: a simulated ground robot and an aerial robot. This flexible language and robotic platform takes advantage of existing tools for speech recognition and dialogue management that are compatible with new domains, and implements an inter-agent communication protocol (tactical behavior specification), where verbal instructions are encoded for tasks assigned to the appropriate robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge