Stephan Alexander Braun

ExAID: A Multimodal Explanation Framework for Computer-Aided Diagnosis of Skin Lesions

Jan 04, 2022

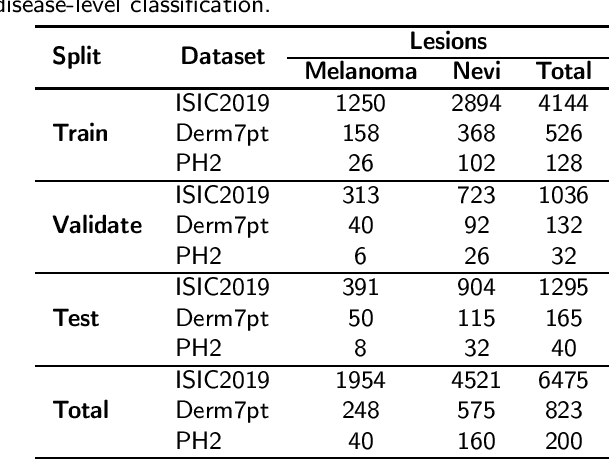

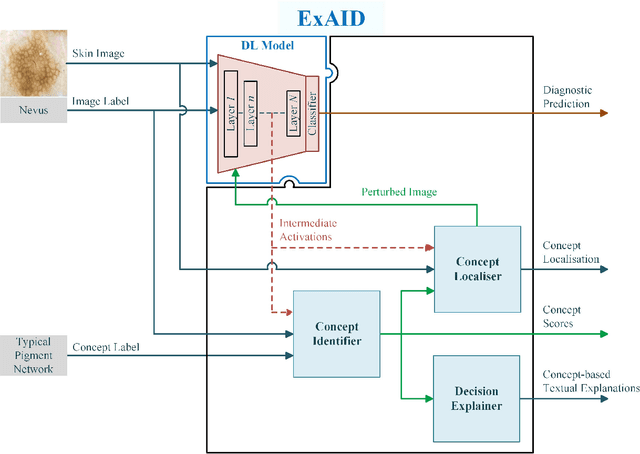

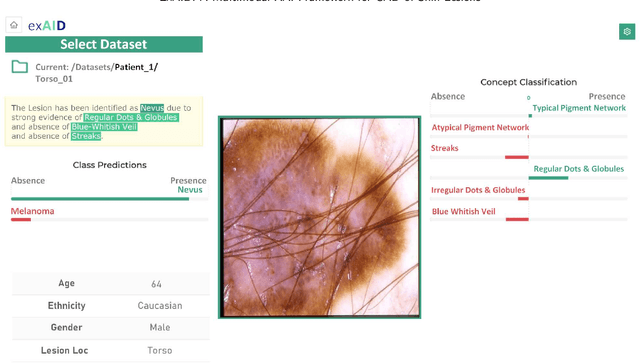

Abstract:One principal impediment in the successful deployment of AI-based Computer-Aided Diagnosis (CAD) systems in clinical workflows is their lack of transparent decision making. Although commonly used eXplainable AI methods provide some insight into opaque algorithms, such explanations are usually convoluted and not readily comprehensible except by highly trained experts. The explanation of decisions regarding the malignancy of skin lesions from dermoscopic images demands particular clarity, as the underlying medical problem definition is itself ambiguous. This work presents ExAID (Explainable AI for Dermatology), a novel framework for biomedical image analysis, providing multi-modal concept-based explanations consisting of easy-to-understand textual explanations supplemented by visual maps justifying the predictions. ExAID relies on Concept Activation Vectors to map human concepts to those learnt by arbitrary Deep Learning models in latent space, and Concept Localization Maps to highlight concepts in the input space. This identification of relevant concepts is then used to construct fine-grained textual explanations supplemented by concept-wise location information to provide comprehensive and coherent multi-modal explanations. All information is comprehensively presented in a diagnostic interface for use in clinical routines. An educational mode provides dataset-level explanation statistics and tools for data and model exploration to aid medical research and education. Through rigorous quantitative and qualitative evaluation of ExAID, we show the utility of multi-modal explanations for CAD-assisted scenarios even in case of wrong predictions. We believe that ExAID will provide dermatologists an effective screening tool that they both understand and trust. Moreover, it will be the basis for similar applications in other biomedical imaging fields.

On Interpretability of Deep Learning based Skin Lesion Classifiers using Concept Activation Vectors

May 05, 2020

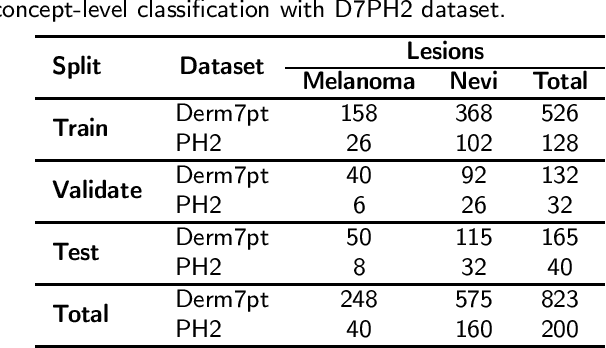

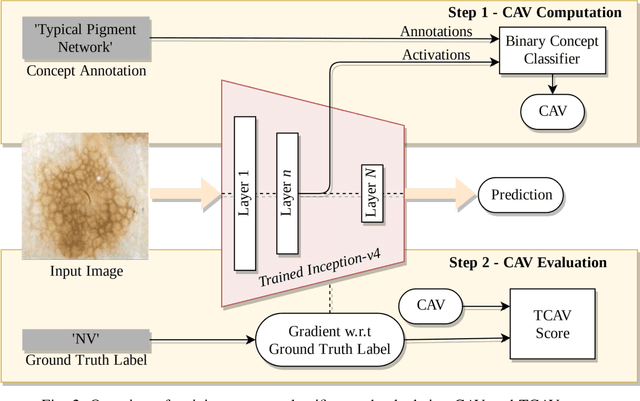

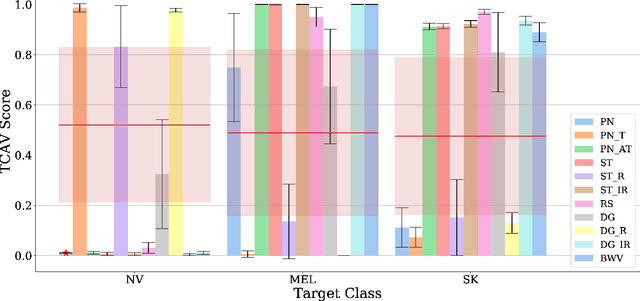

Abstract:Deep learning based medical image classifiers have shown remarkable prowess in various application areas like ophthalmology, dermatology, pathology, and radiology. However, the acceptance of these Computer-Aided Diagnosis (CAD) systems in real clinical setups is severely limited primarily because their decision-making process remains largely obscure. This work aims at elucidating a deep learning based medical image classifier by verifying that the model learns and utilizes similar disease-related concepts as described and employed by dermatologists. We used a well-trained and high performing neural network developed by REasoning for COmplex Data (RECOD) Lab for classification of three skin tumours, i.e. Melanocytic Naevi, Melanoma and Seborrheic Keratosis and performed a detailed analysis on its latent space. Two well established and publicly available skin disease datasets, PH2 and derm7pt, are used for experimentation. Human understandable concepts are mapped to RECOD image classification model with the help of Concept Activation Vectors (CAVs), introducing a novel training and significance testing paradigm for CAVs. Our results on an independent evaluation set clearly shows that the classifier learns and encodes human understandable concepts in its latent representation. Additionally, TCAV scores (Testing with CAVs) suggest that the neural network indeed makes use of disease-related concepts in the correct way when making predictions. We anticipate that this work can not only increase confidence of medical practitioners on CAD but also serve as a stepping stone for further development of CAV-based neural network interpretation methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge