Sravanthi Rajanala

Session Context Embedding for Intent Understanding in Product Search

Jun 03, 2024

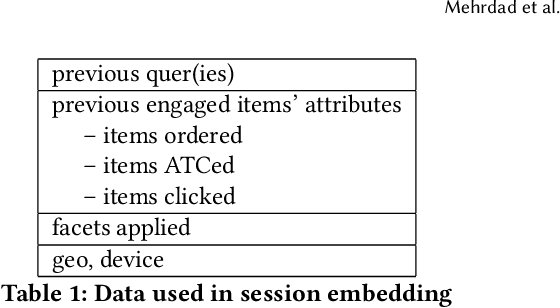

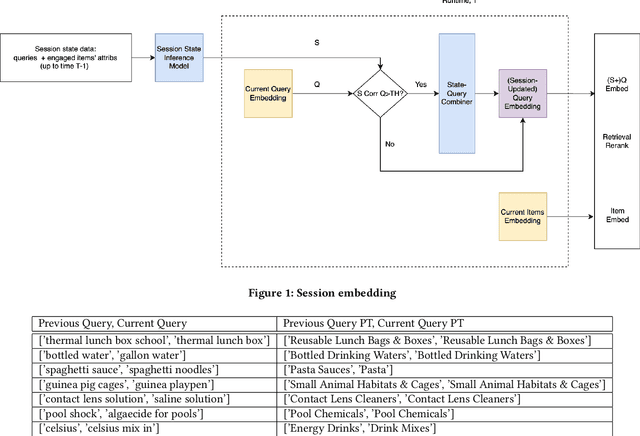

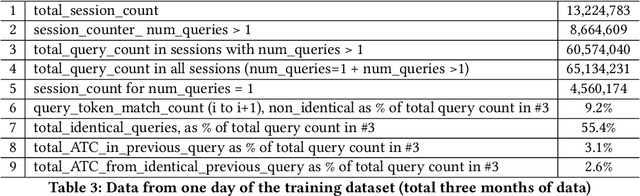

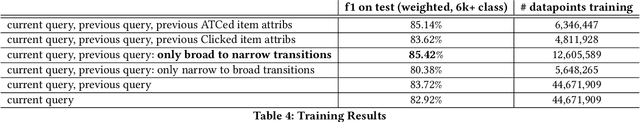

Abstract:It is often noted that single query-item pair relevance training in search does not capture the customer intent. User intent can be better deduced from a series of engagements (Clicks, ATCs, Orders) in a given search session. We propose a novel method for vectorizing session context for capturing and utilizing context in retrieval and rerank. In the runtime, session embedding is an alternative to query embedding, saved and updated after each request in the session, it can be used for retrieval and ranking. We outline session embedding's solution to session-based intent understanding and its architecture, the background to this line of thought in search and recommendation, detail the methodologies implemented, and finally present the results of an implementation of session embedding for query product type classification. We demonstrate improvements over strategies ignoring session context in the runtime for user intent understanding.

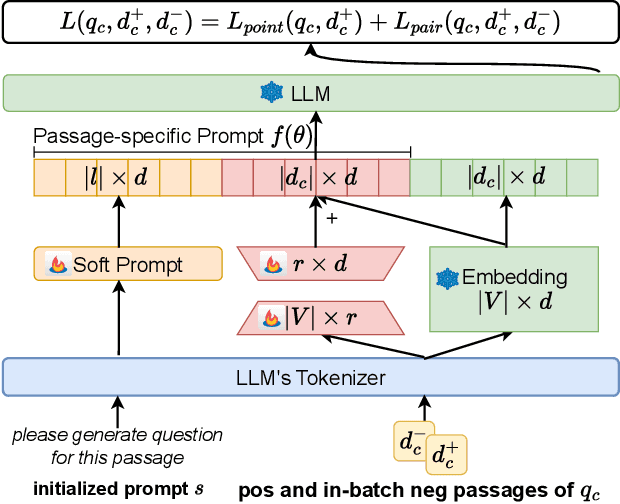

Passage-specific Prompt Tuning for Passage Reranking in Question Answering with Large Language Models

May 31, 2024

Abstract:Effective passage retrieval and reranking methods have been widely utilized to identify suitable candidates in open-domain question answering tasks, recent studies have resorted to LLMs for reranking the retrieved passages by the log-likelihood of the question conditioned on each passage. Although these methods have demonstrated promising results, the performance is notably sensitive to the human-written prompt (or hard prompt), and fine-tuning LLMs can be computationally intensive and time-consuming. Furthermore, this approach limits the leverage of question-passage relevance pairs and passage-specific knowledge to enhance the ranking capabilities of LLMs. In this paper, we propose passage-specific prompt tuning for reranking in open-domain question answering (PSPT): a parameter-efficient method that fine-tunes learnable passage-specific soft prompts, incorporating passage-specific knowledge from a limited set of question-passage relevance pairs. The method involves ranking retrieved passages based on the log-likelihood of the model generating the question conditioned on each passage and the learned soft prompt. We conducted extensive experiments utilizing the Llama-2-chat-7B model across three publicly available open-domain question answering datasets and the results demonstrate the effectiveness of the proposed approach.

Q-PEFT: Query-dependent Parameter Efficient Fine-tuning for Text Reranking with Large Language Models

Apr 12, 2024Abstract:Parameter Efficient Fine-Tuning (PEFT) methods have been extensively utilized in Large Language Models (LLMs) to improve the down-streaming tasks without the cost of fine-tuing the whole LLMs. Recent studies have shown how to effectively use PEFT for fine-tuning LLMs in ranking tasks with convincing performance; there are some limitations, including the learned prompt being fixed for different documents, overfitting to specific tasks, and low adaptation ability. In this paper, we introduce a query-dependent parameter efficient fine-tuning (Q-PEFT) approach for text reranking to leak the information of the true queries to LLMs and then make the generation of true queries from input documents much easier. Specifically, we utilize the query to extract the top-$k$ tokens from concatenated documents, serving as contextual clues. We further augment Q-PEFT by substituting the retrieval mechanism with a multi-head attention layer to achieve end-to-end training and cover all the tokens in the documents, guiding the LLMs to generate more document-specific synthetic queries, thereby further improving the reranking performance. Extensive experiments are conducted on four public datasets, demonstrating the effectiveness of our proposed approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge