Sourav Sanyal

Real-Time Neuromorphic Navigation: Guiding Physical Robots with Event-Based Sensing and Task-Specific Reconfigurable Autonomy Stack

Mar 11, 2025

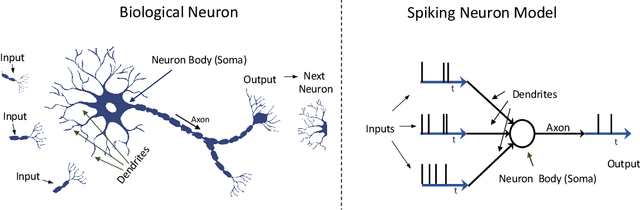

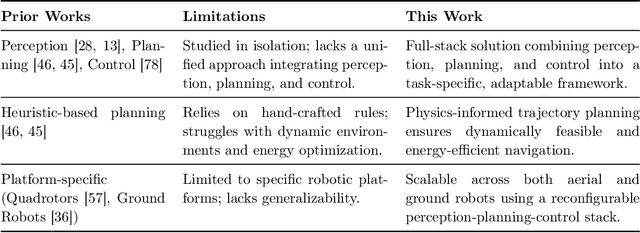

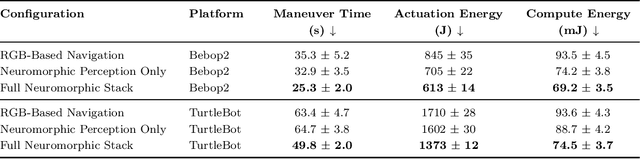

Abstract:Neuromorphic vision, inspired by biological neural systems, has recently gained significant attention for its potential in enhancing robotic autonomy. This paper presents a systematic exploration of a proposed Neuromorphic Navigation framework that uses event-based neuromorphic vision to enable efficient, real-time navigation in robotic systems. We discuss the core concepts of neuromorphic vision and navigation, highlighting their impact on improving robotic perception and decision-making. The proposed reconfigurable Neuromorphic Navigation framework adapts to the specific needs of both ground robots (Turtlebot) and aerial robots (Bebop2 quadrotor), addressing the task-specific design requirements (algorithms) for optimal performance across the autonomous navigation stack -- Perception, Planning, and Control. We demonstrate the versatility and the effectiveness of the framework through two case studies: a Turtlebot performing local replanning for real-time navigation and a Bebop2 quadrotor navigating through moving gates. Our work provides a scalable approach to task-specific, real-time robot autonomy leveraging neuromorphic systems, paving the way for energy-efficient autonomous navigation.

Energy-Efficient Autonomous Aerial Navigation with Dynamic Vision Sensors: A Physics-Guided Neuromorphic Approach

Feb 09, 2025

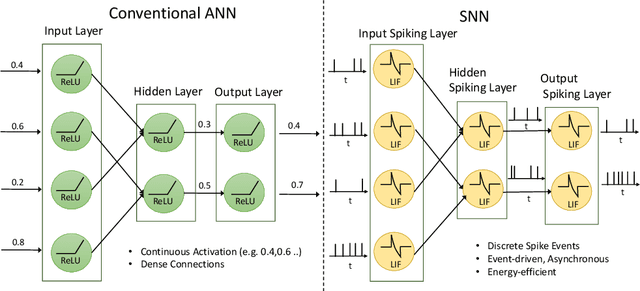

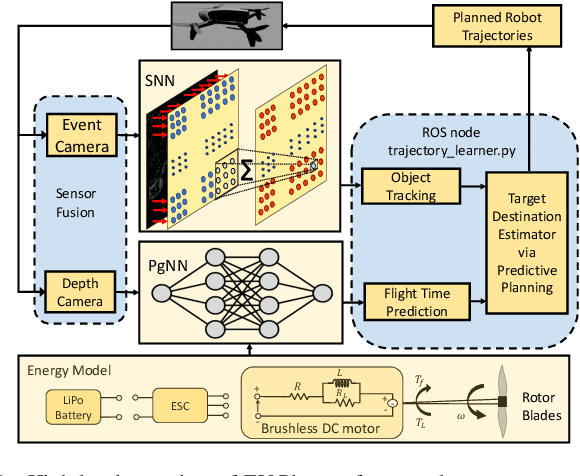

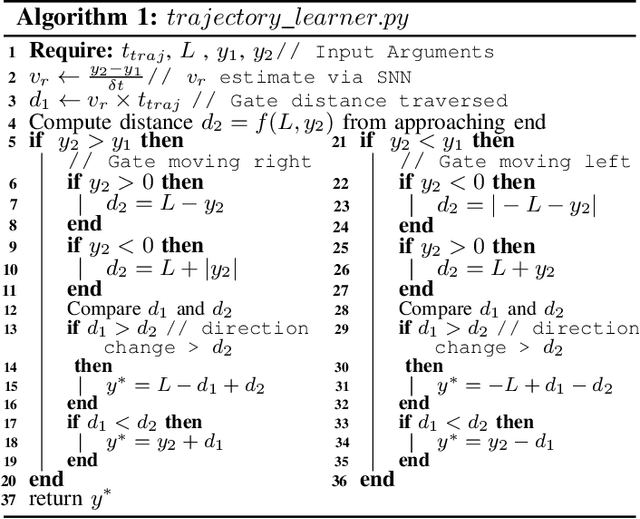

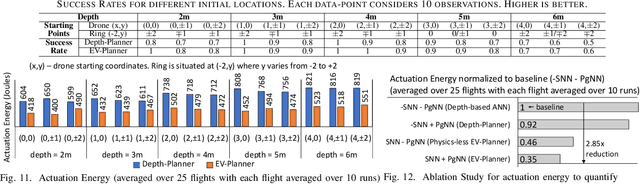

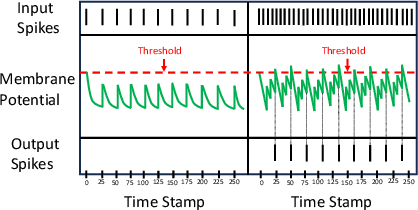

Abstract:Vision-based object tracking is a critical component for achieving autonomous aerial navigation, particularly for obstacle avoidance. Neuromorphic Dynamic Vision Sensors (DVS) or event cameras, inspired by biological vision, offer a promising alternative to conventional frame-based cameras. These cameras can detect changes in intensity asynchronously, even in challenging lighting conditions, with a high dynamic range and resistance to motion blur. Spiking neural networks (SNNs) are increasingly used to process these event-based signals efficiently and asynchronously. Meanwhile, physics-based artificial intelligence (AI) provides a means to incorporate system-level knowledge into neural networks via physical modeling. This enhances robustness, energy efficiency, and provides symbolic explainability. In this work, we present a neuromorphic navigation framework for autonomous drone navigation. The focus is on detecting and navigating through moving gates while avoiding collisions. We use event cameras for detecting moving objects through a shallow SNN architecture in an unsupervised manner. This is combined with a lightweight energy-aware physics-guided neural network (PgNN) trained with depth inputs to predict optimal flight times, generating near-minimum energy paths. The system is implemented in the Gazebo simulator and integrates a sensor-fused vision-to-planning neuro-symbolic framework built with the Robot Operating System (ROS) middleware. This work highlights the future potential of integrating event-based vision with physics-guided planning for energy-efficient autonomous navigation, particularly for low-latency decision-making.

Neuro-LIFT: A Neuromorphic, LLM-based Interactive Framework for Autonomous Drone FlighT at the Edge

Jan 31, 2025

Abstract:The integration of human-intuitive interactions into autonomous systems has been limited. Traditional Natural Language Processing (NLP) systems struggle with context and intent understanding, severely restricting human-robot interaction. Recent advancements in Large Language Models (LLMs) have transformed this dynamic, allowing for intuitive and high-level communication through speech and text, and bridging the gap between human commands and robotic actions. Additionally, autonomous navigation has emerged as a central focus in robotics research, with artificial intelligence (AI) increasingly being leveraged to enhance these systems. However, existing AI-based navigation algorithms face significant challenges in latency-critical tasks where rapid decision-making is critical. Traditional frame-based vision systems, while effective for high-level decision-making, suffer from high energy consumption and latency, limiting their applicability in real-time scenarios. Neuromorphic vision systems, combining event-based cameras and spiking neural networks (SNNs), offer a promising alternative by enabling energy-efficient, low-latency navigation. Despite their potential, real-world implementations of these systems, particularly on physical platforms such as drones, remain scarce. In this work, we present Neuro-LIFT, a real-time neuromorphic navigation framework implemented on a Parrot Bebop2 quadrotor. Leveraging an LLM for natural language processing, Neuro-LIFT translates human speech into high-level planning commands which are then autonomously executed using event-based neuromorphic vision and physics-driven planning. Our framework demonstrates its capabilities in navigating in a dynamic environment, avoiding obstacles, and adapting to human instructions in real-time.

ASMA: An Adaptive Safety Margin Algorithm for Vision-Language Drone Navigation via Scene-Aware Control Barrier Functions

Sep 16, 2024Abstract:In the rapidly evolving field of vision-language navigation (VLN), ensuring robust safety mechanisms remains an open challenge. Control barrier functions (CBFs) are efficient tools which guarantee safety by solving an optimal control problem. In this work, we consider the case of a teleoperated drone in a VLN setting, and add safety features by formulating a novel scene-aware CBF using ego-centric observations obtained through an RGB-D sensor. As a baseline, we implement a vision-language understanding module which uses the contrastive language image pretraining (CLIP) model to query about a user-specified (in natural language) landmark. Using the YOLO (You Only Look Once) object detector, the CLIP model is queried for verifying the cropped landmark, triggering downstream navigation. To improve navigation safety of the baseline, we propose ASMA -- an Adaptive Safety Margin Algorithm -- that crops the drone's depth map for tracking moving object(s) to perform scene-aware CBF evaluation on-the-fly. By identifying potential risky observations from the scene, ASMA enables real-time adaptation to unpredictable environmental conditions, ensuring optimal safety bounds on a VLN-powered drone actions. Using the robot operating system (ROS) middleware on a parrot bebop2 quadrotor in the gazebo environment, ASMA offers 59.4% - 61.8% increase in success rates with insignificant 5.4% - 8.2% increases in trajectory lengths compared to the baseline CBF-less VLN while recovering from unsafe situations.

Real-Time Neuromorphic Navigation: Integrating Event-Based Vision and Physics-Driven Planning on a Parrot Bebop2 Quadrotor

Jul 01, 2024

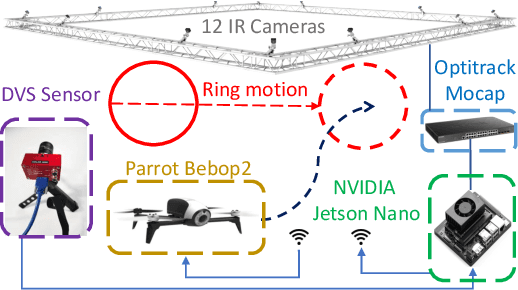

Abstract:In autonomous aerial navigation, real-time and energy-efficient obstacle avoidance remains a significant challenge, especially in dynamic and complex indoor environments. This work presents a novel integration of neuromorphic event cameras with physics-driven planning algorithms implemented on a Parrot Bebop2 quadrotor. Neuromorphic event cameras, characterized by their high dynamic range and low latency, offer significant advantages over traditional frame-based systems, particularly in poor lighting conditions or during high-speed maneuvers. We use a DVS camera with a shallow Spiking Neural Network (SNN) for event-based object detection of a moving ring in real-time in an indoor lab. Further, we enhance drone control with physics-guided empirical knowledge inside a neural network training mechanism, to predict energy-efficient flight paths to fly through the moving ring. This integration results in a real-time, low-latency navigation system capable of dynamically responding to environmental changes while minimizing energy consumption. We detail our hardware setup, control loop, and modifications necessary for real-world applications, including the challenges of sensor integration without burdening the flight capabilities. Experimental results demonstrate the effectiveness of our approach in achieving robust, collision-free, and energy-efficient flight paths, showcasing the potential of neuromorphic vision and physics-driven planning in enhancing autonomous navigation systems.

EV-Planner: Energy-Efficient Robot Navigation via Event-Based Physics-Guided Neuromorphic Planner

Jul 21, 2023

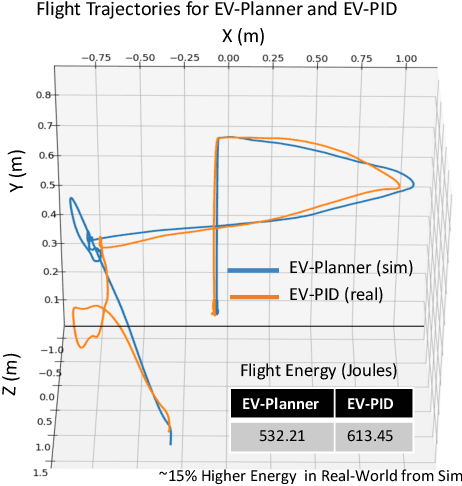

Abstract:Vision-based object tracking is an essential precursor to performing autonomous aerial navigation in order to avoid obstacles. Biologically inspired neuromorphic event cameras are emerging as a powerful alternative to frame-based cameras, due to their ability to asynchronously detect varying intensities (even in poor lighting conditions), high dynamic range, and robustness to motion blur. Spiking neural networks (SNNs) have gained traction for processing events asynchronously in an energy-efficient manner. On the other hand, physics-based artificial intelligence (AI) has gained prominence recently, as they enable embedding system knowledge via physical modeling inside traditional analog neural networks (ANNs). In this letter, we present an event-based physics-guided neuromorphic planner (EV-Planner) to perform obstacle avoidance using neuromorphic event cameras and physics-based AI. We consider the task of autonomous drone navigation where the mission is to detect moving gates and fly through them while avoiding a collision. We use event cameras to perform object detection using a shallow spiking neural network in an unsupervised fashion. Utilizing the physical equations of the brushless DC motors present in the drone rotors, we train a lightweight energy-aware physics-guided neural network with depth inputs. This predicts the optimal flight time responsible for generating near-minimum energy paths. We spawn the drone in the Gazebo simulator and implement a sensor-fused vision-to-planning neuro-symbolic framework using Robot Operating System (ROS). Simulation results for safe collision-free flight trajectories are presented with performance analysis and potential future research directions

RAMP-Net: A Robust Adaptive MPC for Quadrotors via Physics-informed Neural Network

Sep 19, 2022

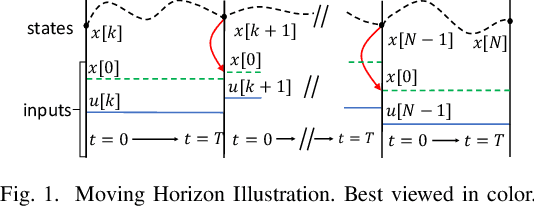

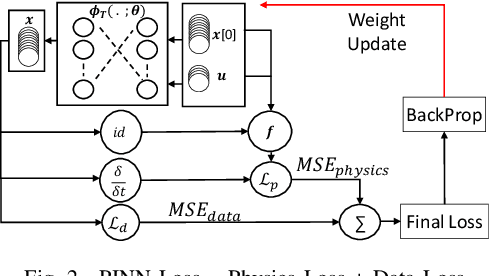

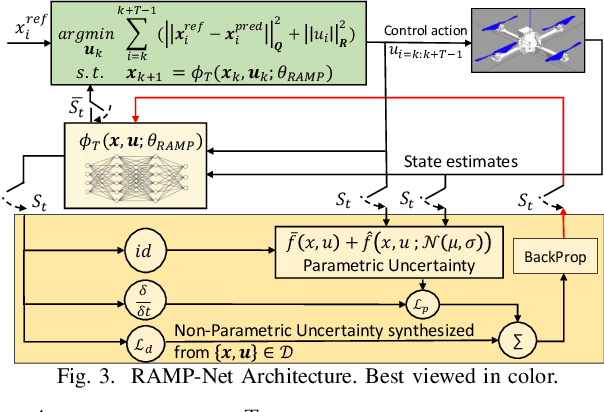

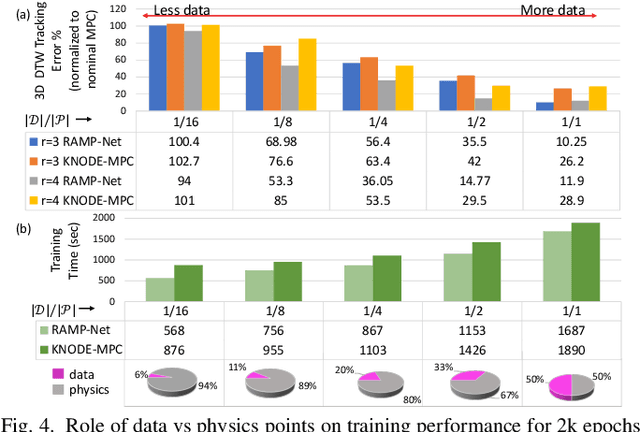

Abstract:Model Predictive Control (MPC) is a state-of-the-art (SOTA) control technique which requires solving hard constrained optimization problems iteratively. For uncertain dynamics, analytical model based robust MPC imposes additional constraints, increasing the hardness of the problem. The problem exacerbates in performance-critical applications, when more compute is required in lesser time. Data-driven regression methods such as Neural Networks have been proposed in the past to approximate system dynamics. However, such models rely on high volumes of labeled data, in the absence of symbolic analytical priors. This incurs non-trivial training overheads. Physics-informed Neural Networks (PINNs) have gained traction for approximating non-linear system of ordinary differential equations (ODEs), with reasonable accuracy. In this work, we propose a Robust Adaptive MPC framework via PINNs (RAMP-Net), which uses a neural network trained partly from simple ODEs and partly from data. A physics loss is used to learn simple ODEs representing ideal dynamics. Having access to analytical functions inside the loss function acts as a regularizer, enforcing robust behavior for parametric uncertainties. On the other hand, a regular data loss is used for adapting to residual disturbances (non-parametric uncertainties), unaccounted during mathematical modelling. Experiments are performed in a simulated environment for trajectory tracking of a quadrotor. We report 7.8% to 43.2% and 8.04% to 61.5% reduction in tracking errors for speeds ranging from 0.5 to 1.75 m/s compared to two SOTA regression based MPC methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge