Sonam Damani

AutoTask: Task Aware Multi-Faceted Single Model for Multi-Task Ads Relevance

Jul 09, 2024

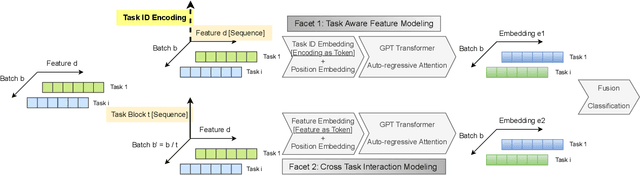

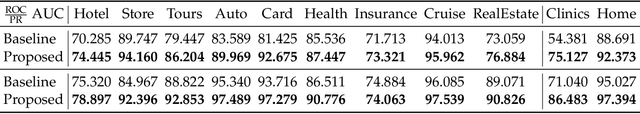

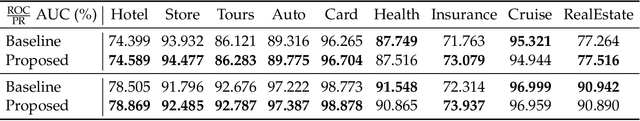

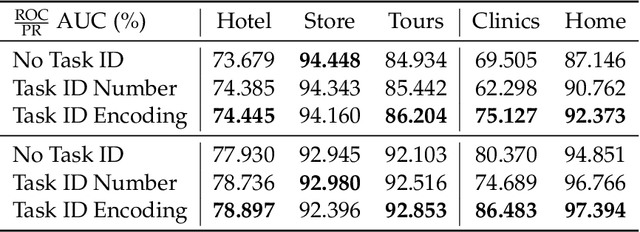

Abstract:Ads relevance models are crucial in determining the relevance between user search queries and ad offers, often framed as a classification problem. The complexity of modeling increases significantly with multiple ad types and varying scenarios that exhibit both similarities and differences. In this work, we introduce a novel multi-faceted attention model that performs task aware feature combination and cross task interaction modeling. Our technique formulates the feature combination problem as "language" modeling with auto-regressive attentions across both feature and task dimensions. Specifically, we introduce a new dimension of task ID encoding for task representations, thereby enabling precise relevance modeling across diverse ad scenarios with substantial improvement in generality capability for unseen tasks. We demonstrate that our model not only effectively handles the increased computational and maintenance demands as scenarios proliferate, but also outperforms generalized DNN models and even task-specific models across a spectrum of ad applications using a single unified model.

LoPT: Low-Rank Prompt Tuning for Parameter Efficient Language Models

Jun 27, 2024Abstract:In prompt tuning, a prefix or suffix text is added to the prompt, and the embeddings (soft prompts) or token indices (hard prompts) of the prefix/suffix are optimized to gain more control over language models for specific tasks. This approach eliminates the need for hand-crafted prompt engineering or explicit model fine-tuning. Prompt tuning is significantly more parameter-efficient than model fine-tuning, as it involves optimizing partial inputs of language models to produce desired outputs. In this work, we aim to further reduce the amount of trainable parameters required for a language model to perform well on specific tasks. We propose Low-rank Prompt Tuning (LoPT), a low-rank model for prompts that achieves efficient prompt optimization. The proposed method demonstrates similar outcomes to full parameter prompt tuning while reducing the number of trainable parameters by a factor of 5. It also provides promising results compared to the state-of-the-art methods that would require 10 to 20 times more parameters.

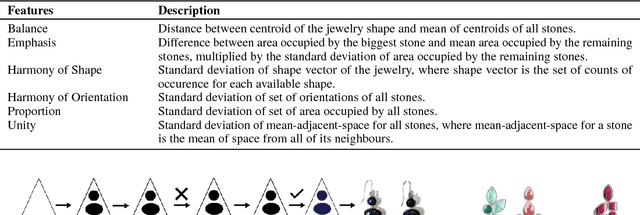

Using AI to Design Stone Jewelry

Nov 21, 2018

Abstract:Jewelry has been an integral part of human culture since ages. One of the most popular styles of jewelry is created by putting together precious and semi-precious stones in diverse patterns. While technology is finding its way in the production process of such jewelry, designing it remains a time-consuming and involved task. In this paper, we propose a unique approach using optimization methods coupled with machine learning techniques to generate novel stone jewelry designs at scale. Our evaluation shows that designs generated by our approach are highly likeable and visually appealing.

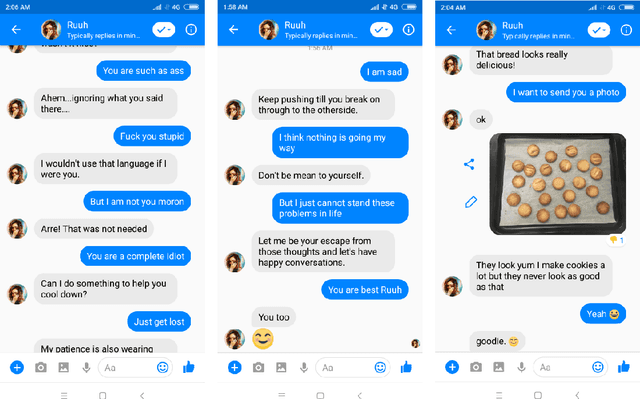

Ruuh: A Deep Learning Based Conversational Social Agent

Oct 22, 2018

Abstract:Dialogue systems and conversational agents are becoming increasingly popular in the modern society but building an agent capable of holding intelligent conversation with its users is a challenging problem for artificial intelligence. In this demo, we demonstrate a deep learning based conversational social agent called "Ruuh" (facebook.com/Ruuh) designed by a team at Microsoft India to converse on a wide range of topics. Ruuh needs to think beyond the utilitarian notion of merely generating "relevant" responses and meet a wider range of user social needs, like expressing happiness when user's favorite team wins, sharing a cute comment on showing the pictures of the user's pet and so on. The agent also needs to detect and respond to abusive language, sensitive topics and trolling behavior of the users. Many of these problems pose significant research challenges which will be demonstrated in our demo. Our agent has interacted with over 2 million real world users till date which has generated over 150 million user conversations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge