Ankush Chatterjee

CARE: A QLoRA-Fine Tuned Multi-Domain Chatbot With Fast Learning On Minimal Hardware

Mar 18, 2025

Abstract:Large Language models have demonstrated excellent domain-specific question-answering capabilities when finetuned with a particular dataset of that specific domain. However, fine-tuning the models requires a significant amount of training time and a considerable amount of hardware. In this work, we propose CARE (Customer Assistance and Response Engine), a lightweight model made by fine-tuning Phi3.5-mini on very minimal hardware and data, designed to handle queries primarily across three domains: telecommunications support, medical support, and banking support. For telecommunications and banking, the chatbot addresses issues and problems faced by customers regularly in the above-mentioned domains. In the medical domain, CARE provides preliminary support by offering basic diagnoses and medical suggestions that a user might take before consulting a healthcare professional. Since CARE is built on Phi3.5-mini, it can be used even on mobile devices, increasing its usability. Our research also shows that CARE performs relatively well on various medical benchmarks, indicating that it can be used to make basic medical suggestions.

Ruuh: A Deep Learning Based Conversational Social Agent

Oct 22, 2018

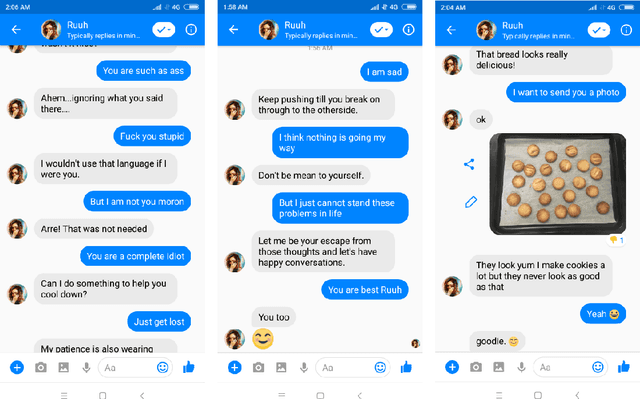

Abstract:Dialogue systems and conversational agents are becoming increasingly popular in the modern society but building an agent capable of holding intelligent conversation with its users is a challenging problem for artificial intelligence. In this demo, we demonstrate a deep learning based conversational social agent called "Ruuh" (facebook.com/Ruuh) designed by a team at Microsoft India to converse on a wide range of topics. Ruuh needs to think beyond the utilitarian notion of merely generating "relevant" responses and meet a wider range of user social needs, like expressing happiness when user's favorite team wins, sharing a cute comment on showing the pictures of the user's pet and so on. The agent also needs to detect and respond to abusive language, sensitive topics and trolling behavior of the users. Many of these problems pose significant research challenges which will be demonstrated in our demo. Our agent has interacted with over 2 million real world users till date which has generated over 150 million user conversations.

A Sentiment-and-Semantics-Based Approach for Emotion Detection in Textual Conversations

Mar 30, 2018

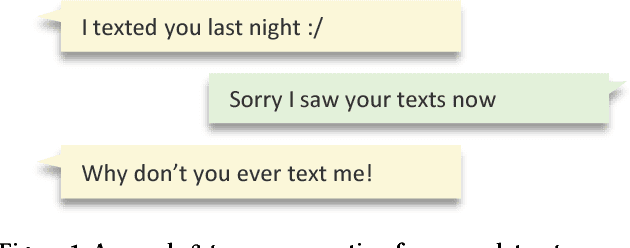

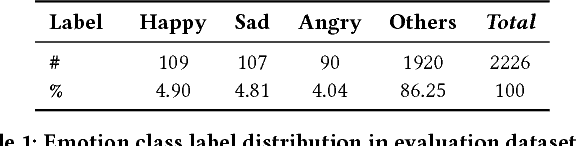

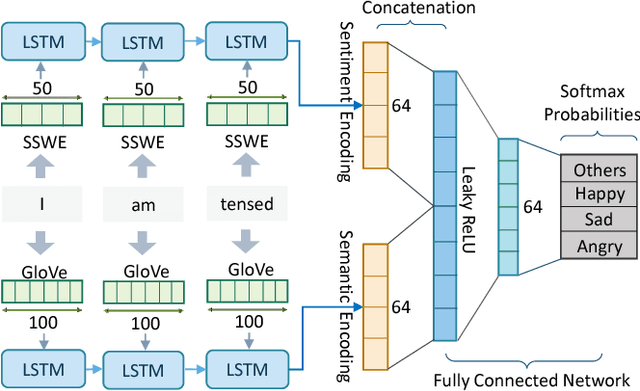

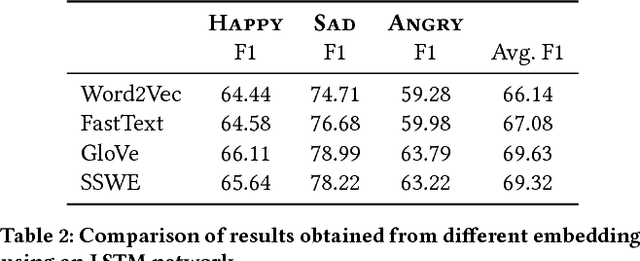

Abstract:Emotions are physiological states generated in humans in reaction to internal or external events. They are complex and studied across numerous fields including computer science. As humans, on reading "Why don't you ever text me!" we can either interpret it as a sad or angry emotion and the same ambiguity exists for machines. Lack of facial expressions and voice modulations make detecting emotions from text a challenging problem. However, as humans increasingly communicate using text messaging applications, and digital agents gain popularity in our society, it is essential that these digital agents are emotion aware, and respond accordingly. In this paper, we propose a novel approach to detect emotions like happy, sad or angry in textual conversations using an LSTM based Deep Learning model. Our approach consists of semi-automated techniques to gather training data for our model. We exploit advantages of semantic and sentiment based embeddings and propose a solution combining both. Our work is evaluated on real-world conversations and significantly outperforms traditional Machine Learning baselines as well as other off-the-shelf Deep Learning models.

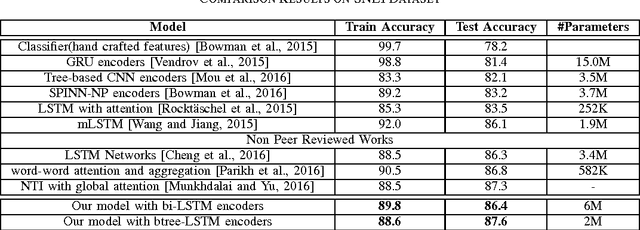

A Neural Architecture Mimicking Humans End-to-End for Natural Language Inference

Jan 27, 2017

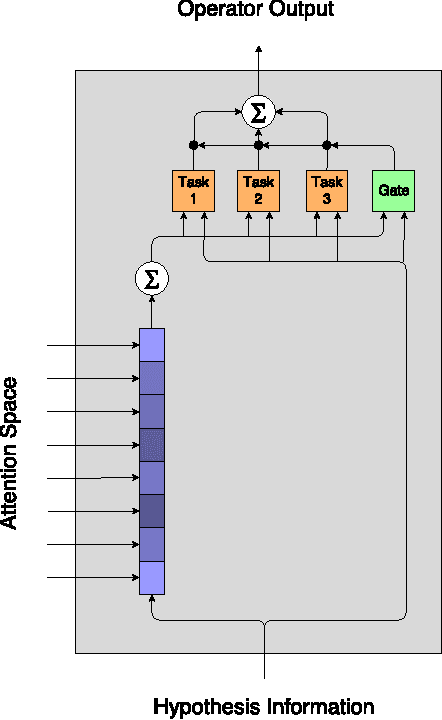

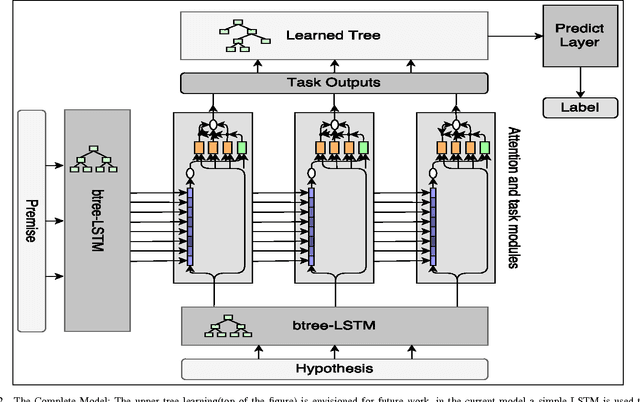

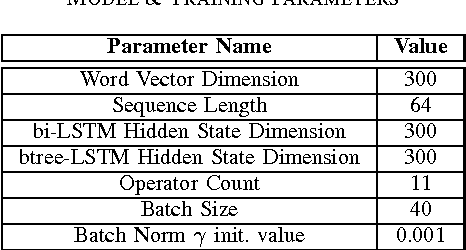

Abstract:In this work we use the recent advances in representation learning to propose a neural architecture for the problem of natural language inference. Our approach is aligned to mimic how a human does the natural language inference process given two statements. The model uses variants of Long Short Term Memory (LSTM), attention mechanism and composable neural networks, to carry out the task. Each part of our model can be mapped to a clear functionality humans do for carrying out the overall task of natural language inference. The model is end-to-end differentiable enabling training by stochastic gradient descent. On Stanford Natural Language Inference(SNLI) dataset, the proposed model achieves better accuracy numbers than all published models in literature.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge