Sivanathan Kandhasamy

AutoDRIVE: A Comprehensive, Flexible and Integrated Cyber-Physical Ecosystem for Enhancing Autonomous Driving Research and Education

Dec 10, 2022Abstract:Prototyping and validating hardware-software components, sub-systems and systems within the intelligent transportation system-of-systems framework requires a modular yet flexible and open-access ecosystem. This work presents our attempt towards developing such a comprehensive research and education ecosystem, called AutoDRIVE, for synergistically prototyping, simulating and deploying cyber-physical solutions pertaining to autonomous driving as well as smart city management. AutoDRIVE features both software as well as hardware-in-the-loop testing interfaces with openly accessible scaled vehicle and infrastructure components. The ecosystem is compatible with a variety of development frameworks, and supports both single and multi-agent paradigms through local as well as distributed computing. Most critically, AutoDRIVE is intended to be modularly expandable to explore emergent technologies, and this work highlights various complementary features and capabilities of the proposed ecosystem by demonstrating four such deployment use-cases: (i) autonomous parking using probabilistic robotics approach for mapping, localization, path planning and control; (ii) behavioral cloning using computer vision and deep imitation learning; (iii) intersection traversal using vehicle-to-vehicle communication and deep reinforcement learning; and (iv) smart city management using vehicle-to-infrastructure communication and internet-of-things.

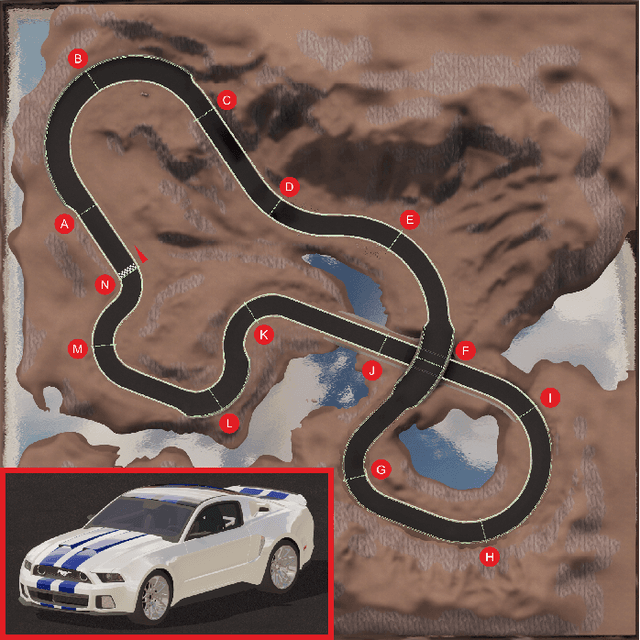

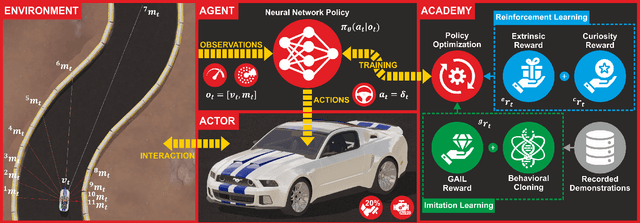

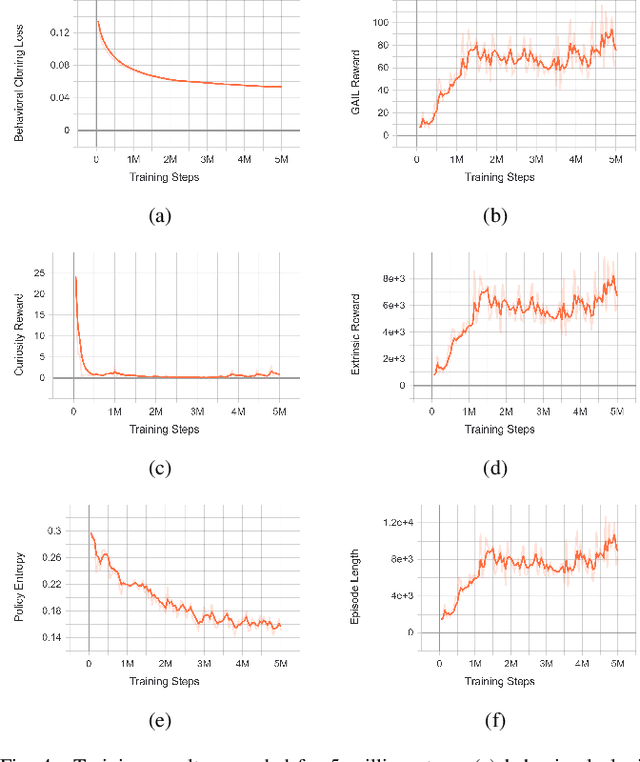

Autonomous Racing using a Hybrid Imitation-Reinforcement Learning Architecture

Oct 11, 2021

Abstract:In this work, we present a rigorous end-to-end control strategy for autonomous vehicles aimed at minimizing lap times in a time attack racing event. We also introduce AutoRACE Simulator developed as a part of this research project, which was employed to simulate accurate vehicular and environmental dynamics along with realistic audio-visual effects. We adopted a hybrid imitation-reinforcement learning architecture and crafted a novel reward function to train a deep neural network policy to drive (using imitation learning) and race (using reinforcement learning) a car autonomously in less than 20 hours. Deployment results were reported as a direct comparison of 10 autonomous laps against 100 manual laps by 10 different human players. The autonomous agent not only exhibited superior performance by gaining 0.96 seconds over the best manual lap, but it also dominated the human players by 1.46 seconds with regard to the mean lap time. This dominance could be justified in terms of better trajectory optimization and lower reaction time of the autonomous agent.

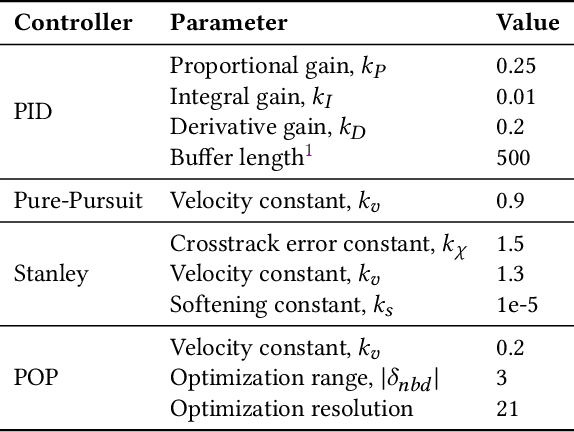

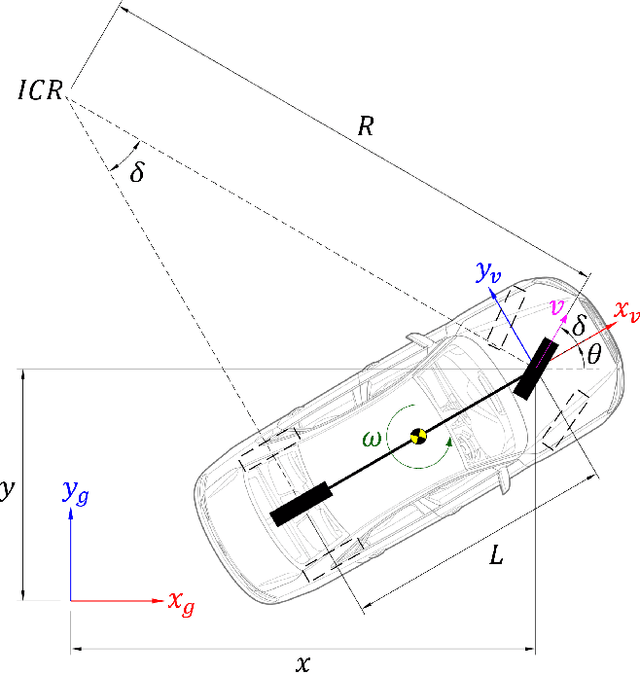

Proximally Optimal Predictive Control Algorithm for Path Tracking of Self-Driving Cars

Mar 24, 2021

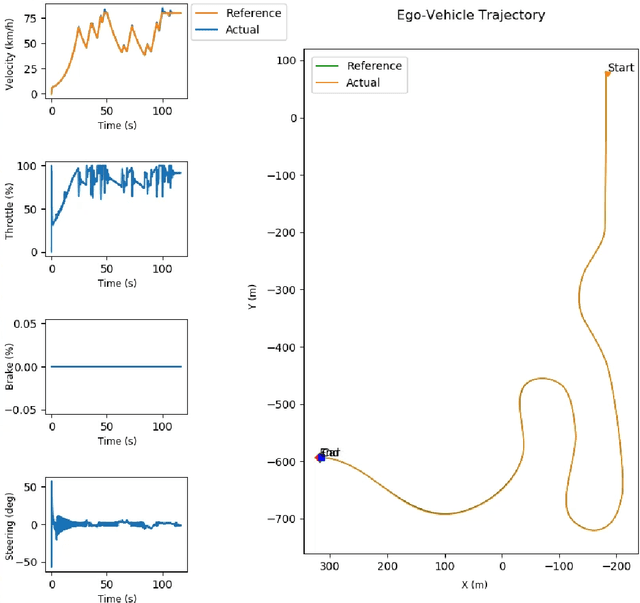

Abstract:This work presents proximally optimal predictive control algorithm, which is essentially a model-based lateral controller for steered autonomous vehicles that selects an optimal steering command within the neighborhood of previous steering angle based on the predicted vehicle location. The proposed algorithm was formulated with an aim of overcoming the limitations associated with the existing control laws for autonomous steering - namely PID, Pure-Pursuit and Stanley controllers. Particularly, our approach was aimed at bridging the gap between tracking efficiency and computational cost, thereby ensuring effective path tracking in real-time. The effectiveness of our approach was investigated through a series of dynamic simulation experiments pertaining to autonomous path tracking, employing an adaptive control law for longitudinal motion control of the vehicle. We measured the latency of the proposed algorithm in order to comment on its real-time factor and validated our approach by comparing it against the established control laws in terms of both crosstrack and heading errors recorded throughout the respective path tracking simulations.

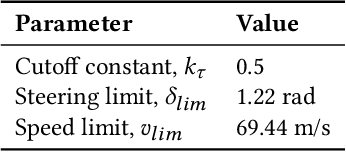

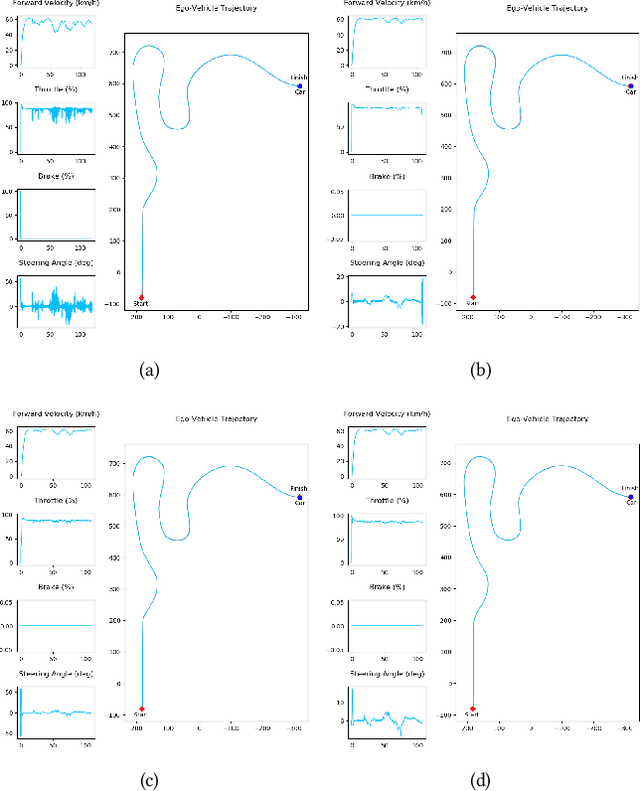

Control Strategies for Autonomous Vehicles

Nov 17, 2020

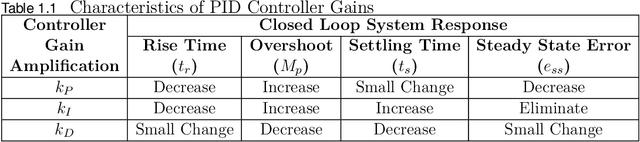

Abstract:This chapter focuses on the self-driving technology from a control perspective and investigates the control strategies used in autonomous vehicles and advanced driver-assistance systems from both theoretical and practical viewpoints. First, we introduce the self-driving technology as a whole, including perception, planning and control techniques required for accomplishing the challenging task of autonomous driving. We then dwell upon each of these operations to explain their role in the autonomous system architecture, with a prime focus on control strategies. The core portion of this chapter commences with detailed mathematical modeling of autonomous vehicles followed by a comprehensive discussion on control strategies. The chapter covers longitudinal as well as lateral control strategies for autonomous vehicles with coupled and de-coupled control schemes. We as well discuss some of the machine learning techniques applied to autonomous vehicle control task. Finally, we briefly summarize some of the research works that our team has carried out at the Autonomous Systems Lab and conclude the chapter with a few thoughtful remarks.

Decentralized Motion Planning for Multi-Robot Navigation using Deep Reinforcement Learning

Nov 11, 2020

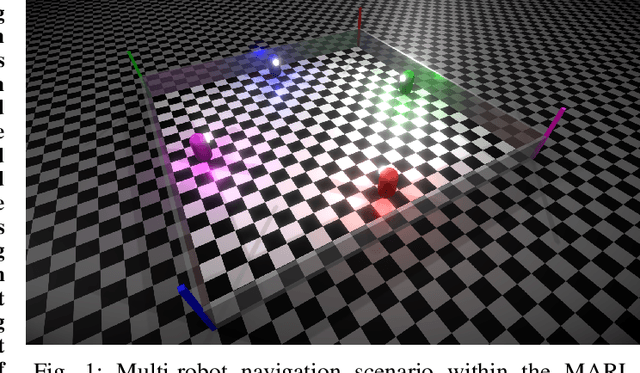

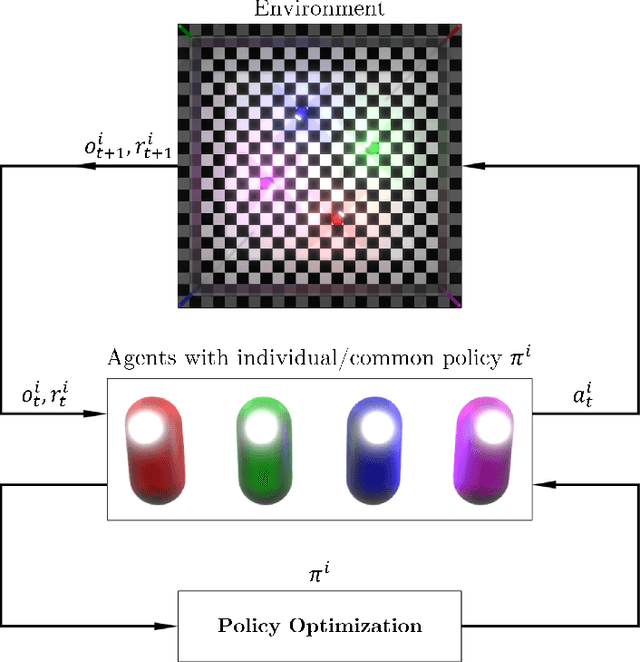

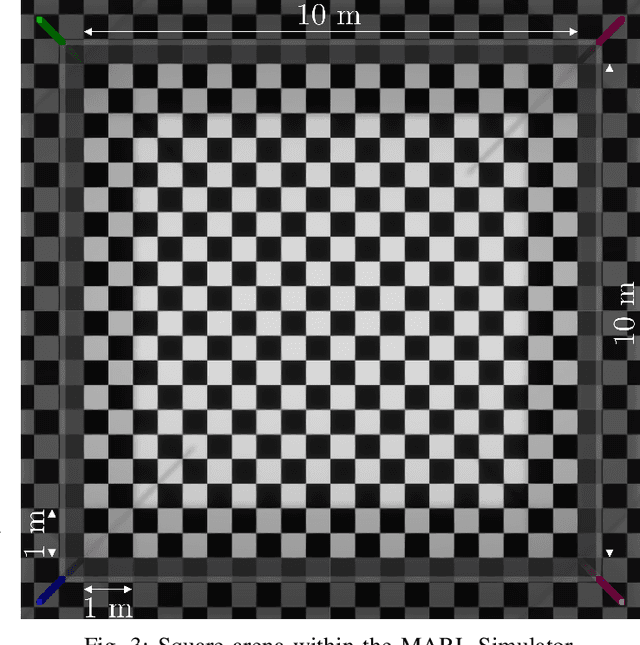

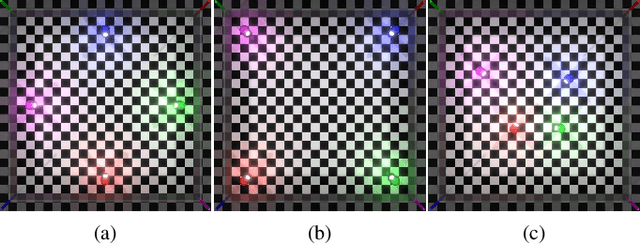

Abstract:This work presents a decentralized motion planning framework for addressing the task of multi-robot navigation using deep reinforcement learning. A custom simulator was developed in order to experimentally investigate the navigation problem of 4 cooperative non-holonomic robots sharing limited state information with each other in 3 different settings. The notion of decentralized motion planning with common and shared policy learning was adopted, which allowed robust training and testing of this approach in a stochastic environment since the agents were mutually independent and exhibited asynchronous motion behavior. The task was further aggravated by providing the agents with a sparse observation space and requiring them to generate continuous action commands so as to efficiently, yet safely navigate to their respective goal locations, while avoiding collisions with other dynamic peers and static obstacles at all times. The experimental results are reported in terms of quantitative measures and qualitative remarks for both training and deployment phases.

Robust Behavioral Cloning for Autonomous Vehicles using End-to-End Imitation Learning

Oct 09, 2020

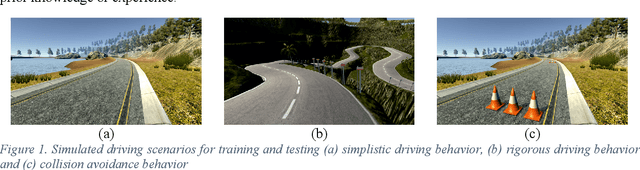

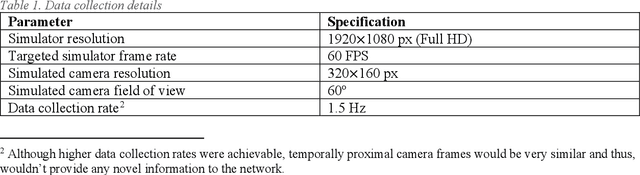

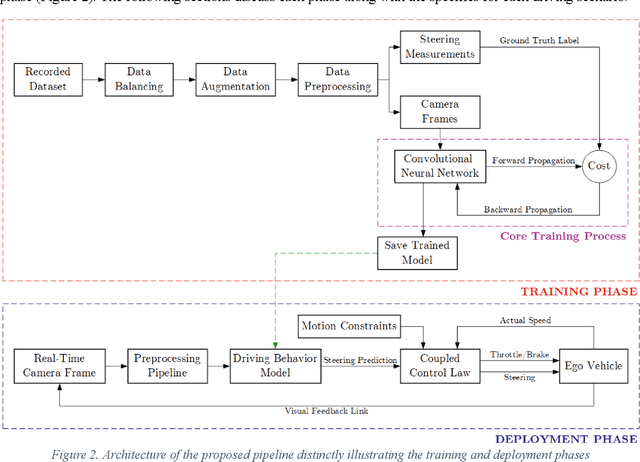

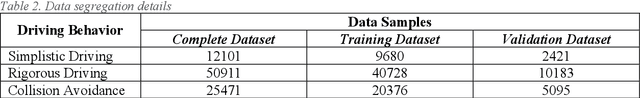

Abstract:In this work, we present a robust pipeline for cloning driving behavior of a human using end-to-end imitation learning. The proposed pipeline was employed to train and deploy three distinct driving behavior models onto a simulated vehicle. The training phase comprised of data collection, balancing, augmentation, preprocessing and training a neural network, following which, the trained model was deployed onto the ego vehicle to predict steering commands based on the feed from an onboard camera. A novel coupled control law was formulated to generate longitudinal control commands on-the-go based on the predicted steering angle and other parameters such as actual speed of the ego vehicle and the prescribed constraints for speed and steering. We analyzed computational efficiency of the pipeline and evaluated robustness of the trained models through exhaustive experimentation. Even a relatively shallow convolutional neural network model was able to learn key driving behaviors from sparsely labelled datasets and was tolerant to environmental variations during deployment of the said driving behaviors.

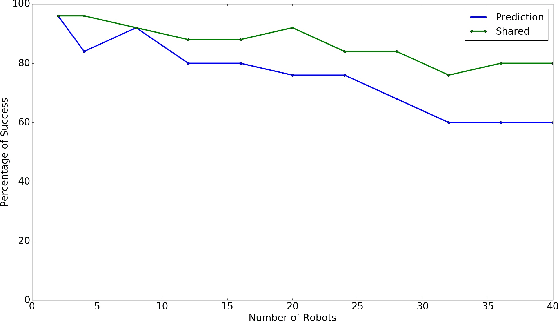

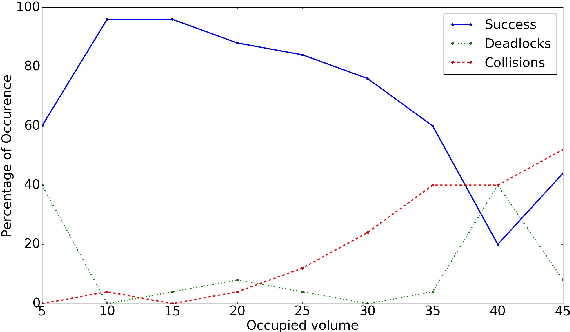

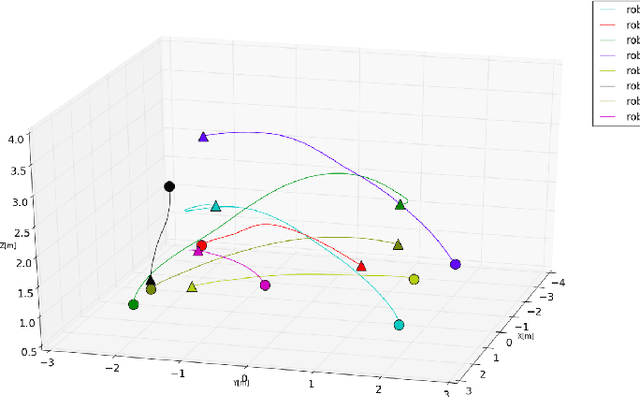

Towards Scalable Continuous-Time Trajectory Optimization for Multi-Robot Navigation

Oct 29, 2019

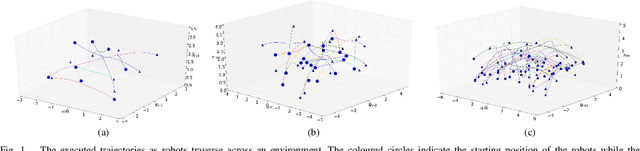

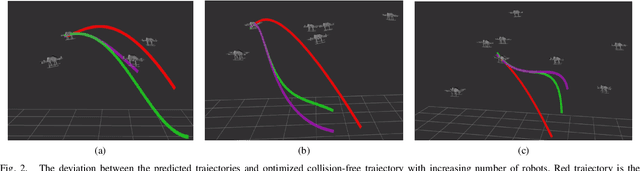

Abstract:Scalable multi-robot transition is essential for ubiquitous adoption of robots. As a step towards it, a computationally efficient decentralized algorithm for continuous-time trajectory optimization in multi-robot scenarios based upon model predictive control is introduced. The robots communicate only their current states and goals rather than sharing their whole trajectory; using this data each robot predicts a continuous-time trajectory for every other robot exploiting optimal control based motion primitives that are corrected for spatial inter-robot interactions using least squares. A non linear program (NLP) is formulated for collision avoidance with the predicted trajectories of other robots. The NLP is condensed by using time as a parametrization resulting in an unconstrained optimization problem and can be solved in a fast and efficient manner. Additionally, the algorithm resizes the robot to accommodate it's trajectory tracking error. The algorithm was tested in simulations on Gazebo with aerial robots. Early results indicate that the proposed algorithm is efficient for upto forty homogeneous robots and twenty one heterogeneous robots occupying 20\% of the available space.

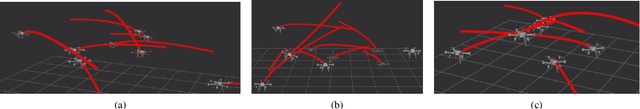

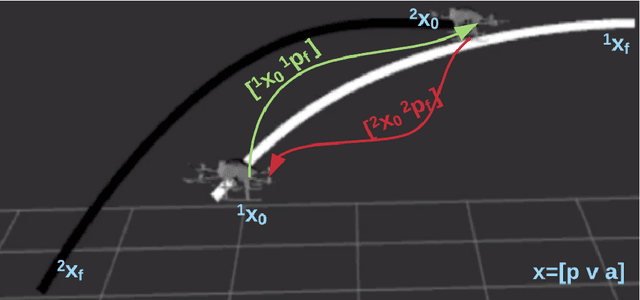

Continuous-Time Trajectory Optimization for Decentralized Multi-Robot Navigation

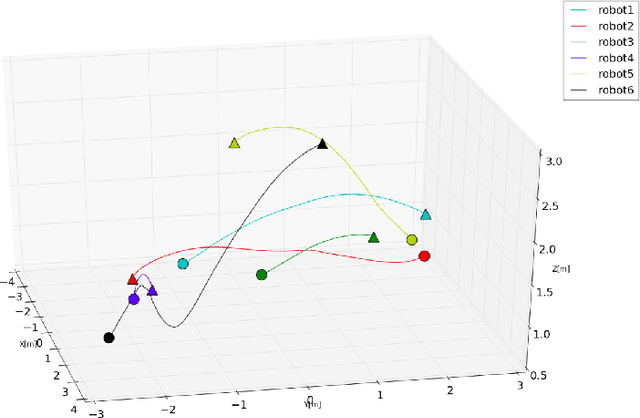

Sep 05, 2019

Abstract:Multi-robot systems have begun to permeate into a variety of different fields, but collision-free navigation in a decentralized manner is still an arduous task. Typically, the navigation of high speed multi-robot systems demands replanning of trajectories to avoid collisions with one another. This paper presents an online replanning algorithm for trajectory optimization in labeled multi-robot scenarios. With reliable communication of states among robots, each robot predicts a smooth continuous-time trajectory for every other remaining robots. Based on the knowledge of these predicted trajectories, each robot then plans a collision-free trajectory for itself. The collision-free trajectory optimization problem is cast as a non linear program (NLP) by exploiting polynomial based trajectory generation. The algorithm was tested in simulations on Gazebo with aerial robots.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge