Robust Behavioral Cloning for Autonomous Vehicles using End-to-End Imitation Learning

Paper and Code

Oct 09, 2020

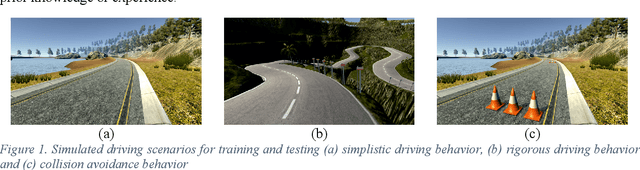

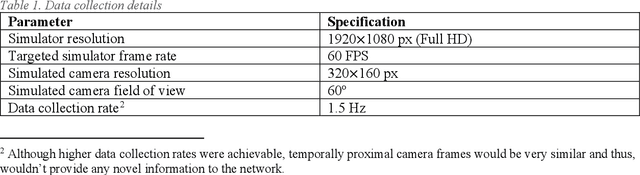

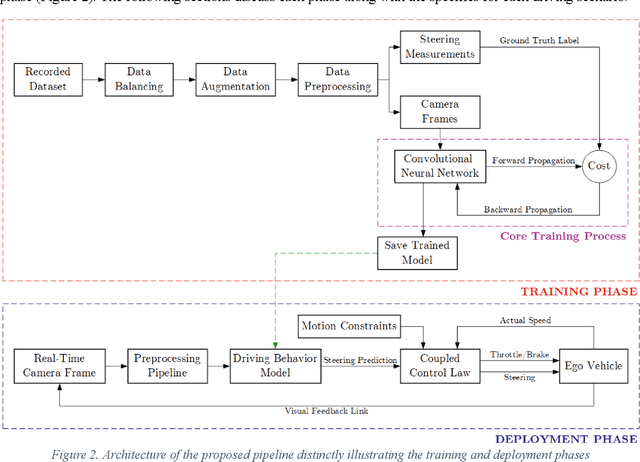

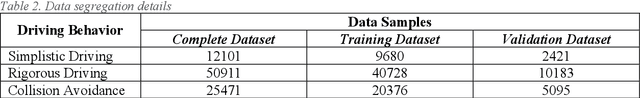

In this work, we present a robust pipeline for cloning driving behavior of a human using end-to-end imitation learning. The proposed pipeline was employed to train and deploy three distinct driving behavior models onto a simulated vehicle. The training phase comprised of data collection, balancing, augmentation, preprocessing and training a neural network, following which, the trained model was deployed onto the ego vehicle to predict steering commands based on the feed from an onboard camera. A novel coupled control law was formulated to generate longitudinal control commands on-the-go based on the predicted steering angle and other parameters such as actual speed of the ego vehicle and the prescribed constraints for speed and steering. We analyzed computational efficiency of the pipeline and evaluated robustness of the trained models through exhaustive experimentation. Even a relatively shallow convolutional neural network model was able to learn key driving behaviors from sparsely labelled datasets and was tolerant to environmental variations during deployment of the said driving behaviors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge