Simon Warchol

School of Engineering and Applied Sciences, Harvard University

Is What You Ask For What You Get? Investigating Concept Associations in Text-to-Image Models

Oct 06, 2024Abstract:Text-to-image (T2I) models are increasingly used in impactful real-life applications. As such, there is a growing need to audit these models to ensure that they generate desirable, task-appropriate images. However, systematically inspecting the associations between prompts and generated content in a human-understandable way remains challenging. To address this, we propose \emph{Concept2Concept}, a framework where we characterize conditional distributions of vision language models using interpretable concepts and metrics that can be defined in terms of these concepts. This characterization allows us to use our framework to audit models and prompt-datasets. To demonstrate, we investigate several case studies of conditional distributions of prompts, such as user defined distributions or empirical, real world distributions. Lastly, we implement Concept2Concept as an open-source interactive visualization tool facilitating use by non-technical end-users. Warning: This paper contains discussions of harmful content, including CSAM and NSFW material, which may be disturbing to some readers.

Scope2Screen: Focus+Context Techniques for Pathology Tumor Assessment in Multivariate Image Data

Oct 10, 2021

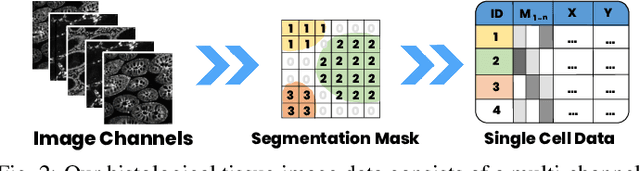

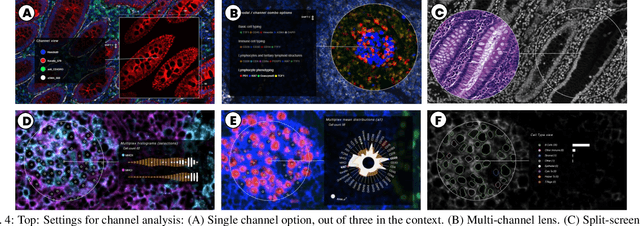

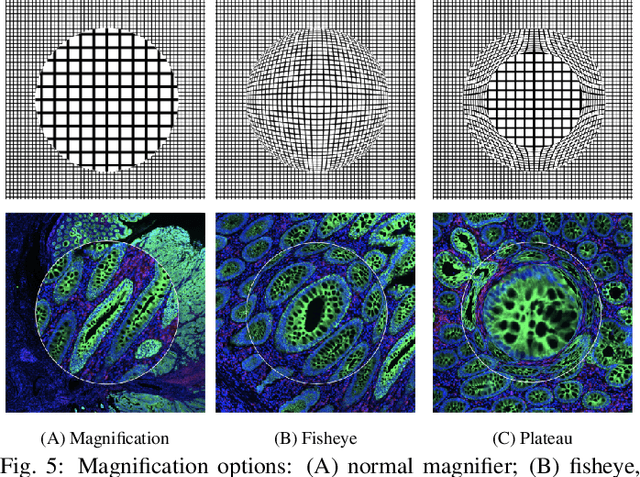

Abstract:Inspection of tissues using a light microscope is the primary method of diagnosing many diseases, notably cancer. Highly multiplexed tissue imaging builds on this foundation, enabling the collection of up to 60 channels of molecular information plus cell and tissue morphology using antibody staining. This provides unique insight into disease biology and promises to help with the design of patient-specific therapies. However, a substantial gap remains with respect to visualizing the resulting multivariate image data and effectively supporting pathology workflows in digital environments on screen. We, therefore, developed Scope2Screen, a scalable software system for focus+context exploration and annotation of whole-slide, high-plex, tissue images. Our approach scales to analyzing 100GB images of 10^9 or more pixels per channel, containing millions of cells. A multidisciplinary team of visualization experts, microscopists, and pathologists identified key image exploration and annotation tasks involving finding, magnifying, quantifying, and organizing ROIs in an intuitive and cohesive manner. Building on a scope2screen metaphor, we present interactive lensing techniques that operate at single-cell and tissue levels. Lenses are equipped with task-specific functionality and descriptive statistics, making it possible to analyze image features, cell types, and spatial arrangements (neighborhoods) across image channels and scales. A fast sliding-window search guides users to regions similar to those under the lens; these regions can be analyzed and considered either separately or as part of a larger image collection. A novel snapshot method enables linked lens configurations and image statistics to be saved, restored, and shared. We validate our designs with domain experts and apply Scope2Screen in two case studies involving lung and colorectal cancers to discover cancer-relevant image features.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge