Simon O'Keefe

A perspective on physical reservoir computing with nanomagnetic devices

Dec 09, 2022

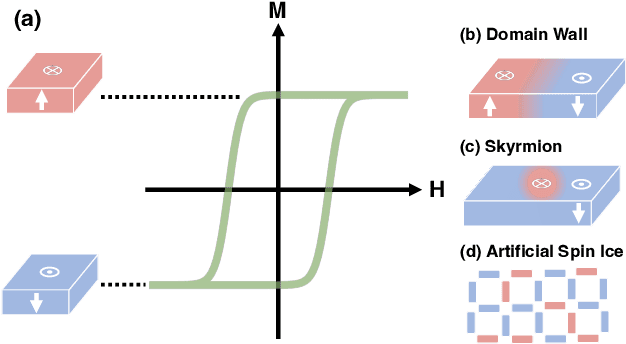

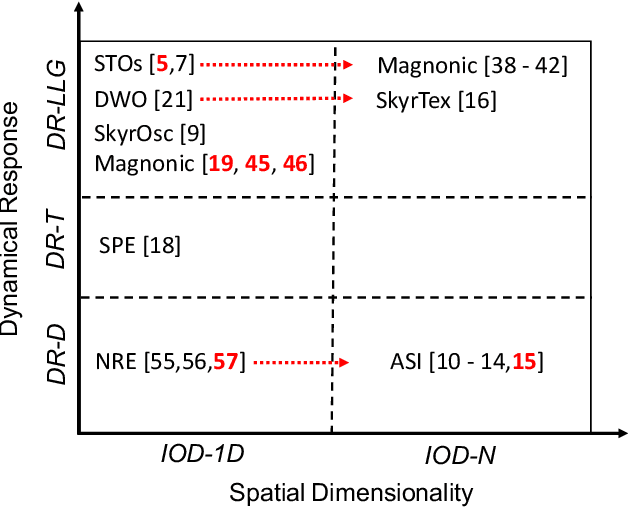

Abstract:Neural networks have revolutionized the area of artificial intelligence and introduced transformative applications to almost every scientific field and industry. However, this success comes at a great price; the energy requirements for training advanced models are unsustainable. One promising way to address this pressing issue is by developing low-energy neuromorphic hardware that directly supports the algorithm's requirements. The intrinsic non-volatility, non-linearity, and memory of spintronic devices make them appealing candidates for neuromorphic devices. Here we focus on the reservoir computing paradigm, a recurrent network with a simple training algorithm suitable for computation with spintronic devices since they can provide the properties of non-linearity and memory. We review technologies and methods for developing neuromorphic spintronic devices and conclude with critical open issues to address before such devices become widely used.

Reservoir Computing with Thin-film Ferromagnetic Devices

Jan 29, 2021

Abstract:Advances in artificial intelligence are driven by technologies inspired by the brain, but these technologies are orders of magnitude less powerful and energy efficient than biological systems. Inspired by the nonlinear dynamics of neural networks, new unconventional computing hardware has emerged with the potential for extreme parallelism and ultra-low power consumption. Physical reservoir computing demonstrates this with a variety of unconventional systems from optical-based to spintronic. Reservoir computers provide a nonlinear projection of the task input into a high-dimensional feature space by exploiting the system's internal dynamics. A trained readout layer then combines features to perform tasks, such as pattern recognition and time-series analysis. Despite progress, achieving state-of-the-art performance without external signal processing to the reservoir remains challenging. Here we show, through simulation, that magnetic materials in thin-film geometries can realise reservoir computers with greater than or similar accuracy to digital recurrent neural networks. Our results reveal that basic spin properties of magnetic films generate the required nonlinear dynamics and memory to solve machine learning tasks. Furthermore, we show that neuromorphic hardware can be reduced in size by removing the need for discrete neural components and external processing. The natural dynamics and nanoscale size of magnetic thin-films present a new path towards fast energy-efficient computing with the potential to innovate portable smart devices, self driving vehicles, and robotics.

Deep Learning and Word Embeddings for Tweet Classification for Crisis Response

Mar 26, 2019

Abstract:Tradition tweet classification models for crisis response focus on convolutional layers and domain-specific word embeddings. In this paper, we study the application of different neural networks with general-purpose and domain-specific word embeddings to investigate their ability to improve the performance of tweet classification models. We evaluate four tweet classification models on CrisisNLP dataset and obtain comparable results which indicates that general-purpose word embedding such as GloVe can be used instead of domain-specific word embedding especially with Bi-LSTM where results reported the highest performance of 62.04% F1 score.

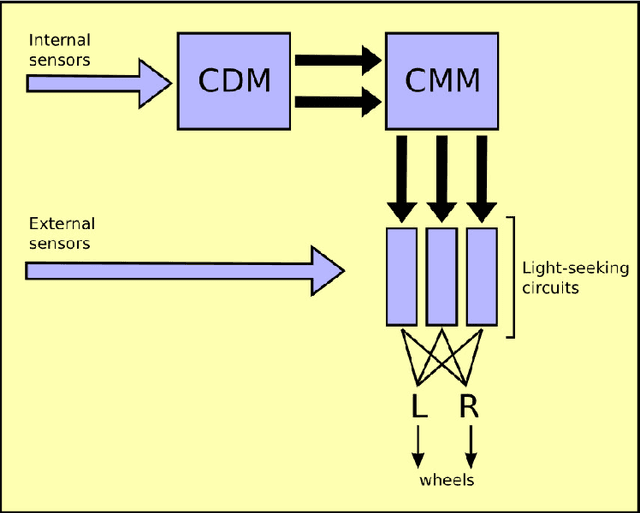

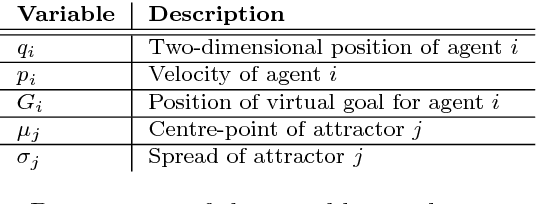

Cognitively-inspired homeostatic architecture can balance conflicting needs in robots

Nov 25, 2018

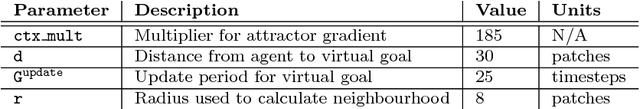

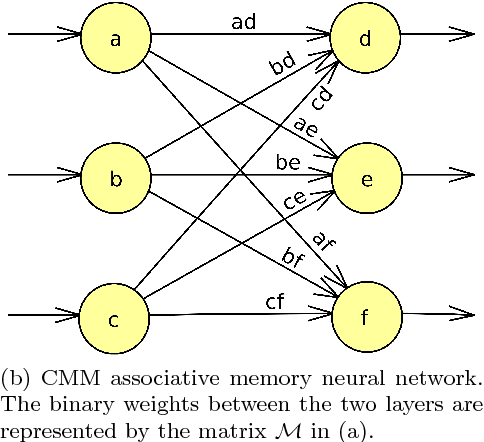

Abstract:Autonomous robots require the ability to balance conflicting needs, such as whether to charge a battery rather than complete a task. Nature has evolved a mechanism for achieving this in the form of homeostasis. This paper presents CogSis, a cognition-inspired architecture for artificial homeostasis. CogSis provides a robot with the ability to balance conflicting needs so that it can maintain its internal state, while still completing its tasks. Through the use of an associative memory neural network, a robot running CogSis is able to learn about its environment rapidly by making associations between sensors. Results show that a Pi-Swarm robot running CogSis can balance charging its battery with completing a task, and can balance conflicting needs, such as charging its battery without overheating. The lab setup consists of a charging station and high-temperature region, demarcated with coloured lamps. The robot associates the colour of a lamp with the effect it has on the robot's internal environment (for example, charging the battery). The robot can then seek out that colour again when it runs low on charge. This work is the first control architecture that takes inspiration directly from distributed cognition. The result is an architecture that is able to learn and apply environmental knowledge rapidly, implementing homeostatic behaviour and balancing conflicting decisions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge