Simon Canales

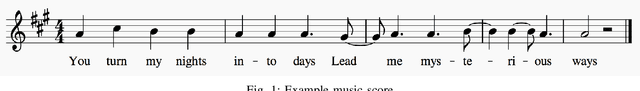

Deep Attention-Based Alignment Network for Melody Generation from Incomplete Lyrics

Jan 23, 2023

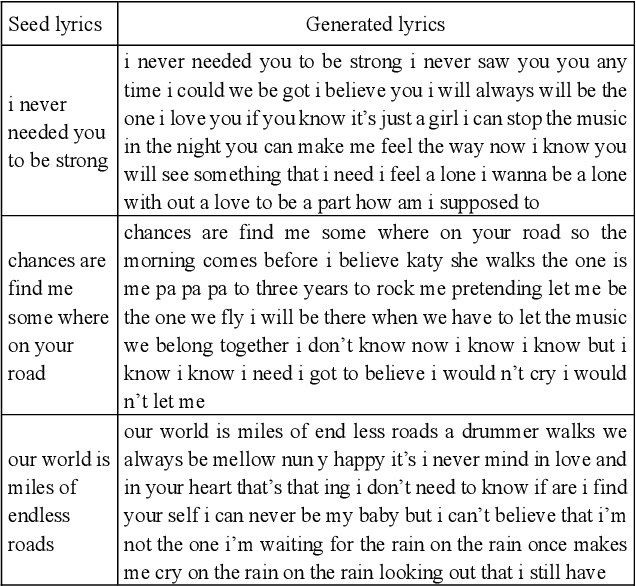

Abstract:We propose a deep attention-based alignment network, which aims to automatically predict lyrics and melody with given incomplete lyrics as input in a way similar to the music creation of humans. Most importantly, a deep neural lyrics-to-melody net is trained in an encoder-decoder way to predict possible pairs of lyrics-melody when given incomplete lyrics (few keywords). The attention mechanism is exploited to align the predicted lyrics with the melody during the lyrics-to-melody generation. The qualitative and quantitative evaluation metrics reveal that the proposed method is indeed capable of generating proper lyrics and corresponding melody for composing new songs given a piece of incomplete seed lyrics.

Automatic Neural Lyrics and Melody Composition

Nov 12, 2020

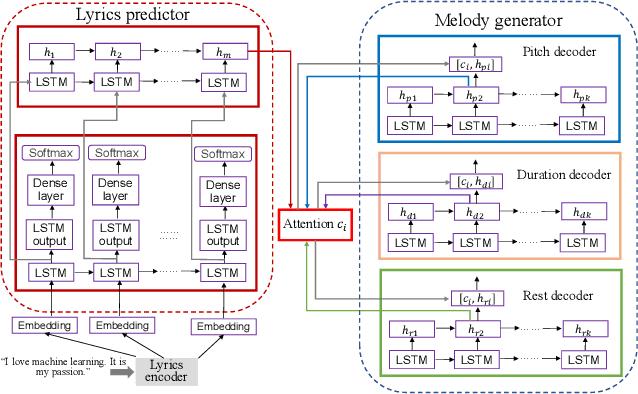

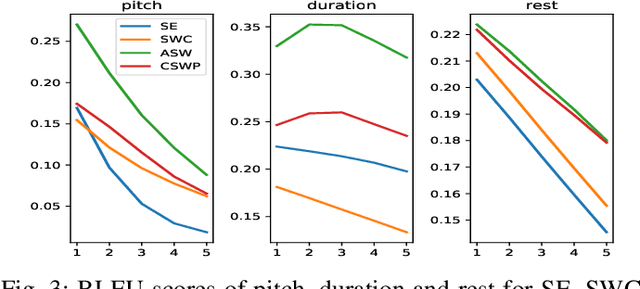

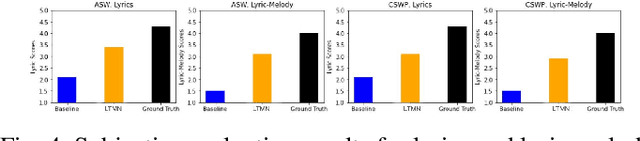

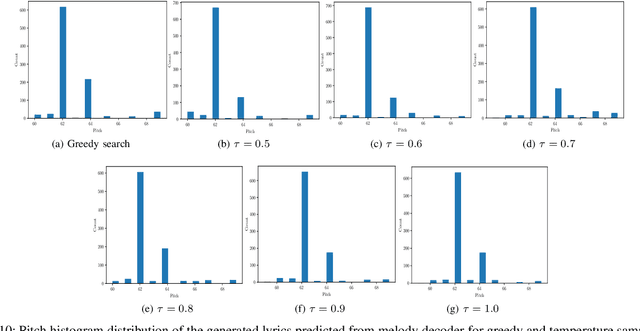

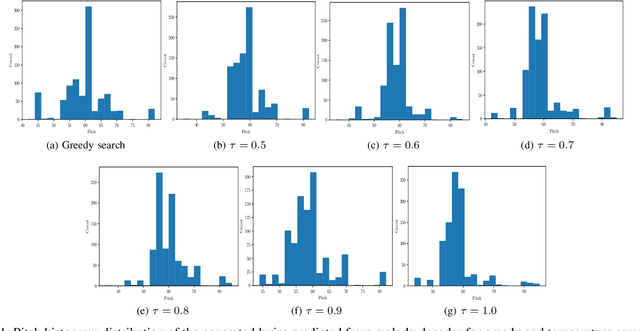

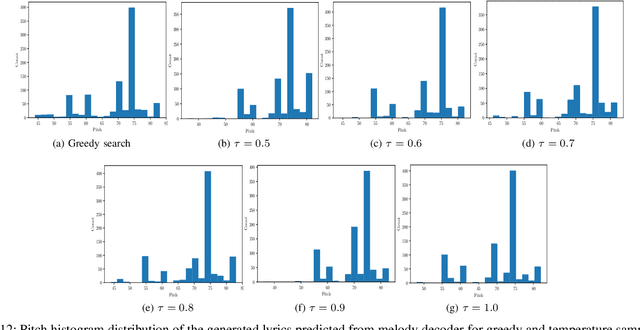

Abstract:In this paper, we propose a technique to address the most challenging aspect of algorithmic songwriting process, which enables the human community to discover original lyrics, and melodies suitable for the generated lyrics. The proposed songwriting system, Automatic Neural Lyrics and Melody Composition (AutoNLMC) is an attempt to make the whole process of songwriting automatic using artificial neural networks. Our lyric to vector (lyric2vec) model trained on a large set of lyric-melody pairs dataset parsed at syllable, word and sentence levels are large scale embedding models enable us to train data driven model such as recurrent neural networks for popular English songs. AutoNLMC is a encoder-decoder sequential recurrent neural network model consisting of a lyric generator, a lyric encoder and melody decoder trained end-to-end. AutoNLMC is designed to generate both lyrics and corresponding melody automatically for an amateur or a person without music knowledge. It can also take lyrics from professional lyric writer to generate matching melodies. The qualitative and quantitative evaluation measures revealed that the proposed method is indeed capable of generating original lyrics and corresponding melody for composing new songs.

Conditional LSTM-GAN for Melody Generation from Lyrics

Aug 15, 2019

Abstract:Melody generation from lyrics has been a challenging research issue in the field of artificial intelligence and music, which enables to learn and discover latent relationship between interesting lyrics and accompanying melody. Unfortunately, the limited availability of paired lyrics-melody dataset with alignment information has hindered the research progress. To address this problem, we create a large dataset consisting of 12,197 MIDI songs each with paired lyrics and melody alignment through leveraging different music sources where alignment relationship between syllables and music attributes is extracted. Most importantly, we propose a novel deep generative model, conditional Long Short-Term Memory - Generative Adversarial Network (LSTM-GAN) for melody generation from lyrics, which contains a deep LSTM generator and a deep LSTM discriminator both conditioned on lyrics. In particular, lyrics-conditioned melody and alignment relationship between syllables of given lyrics and notes of predicted melody are generated simultaneously. Experimental results have proved the effectiveness of our proposed lyrics-to-melody generative model, where plausible and tuneful sequences can be inferred from lyrics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge