Simo Hosio

From Voice to Value: Leveraging AI to Enhance Spoken Online Reviews on the Go

Dec 06, 2024Abstract:Online reviews help people make better decisions. Review platforms usually depend on typed input, where leaving a good review requires significant effort because users must carefully organize and articulate their thoughts. This may discourage users from leaving comprehensive and high-quality reviews, especially when they are on the go. To address this challenge, we developed Vocalizer, a mobile application that enables users to provide reviews through voice input, with enhancements from a large language model (LLM). In a longitudinal study, we analysed user interactions with the app, focusing on AI-driven features that help refine and improve reviews. Our findings show that users frequently utilized the AI agent to add more detailed information to their reviews. We also show how interactive AI features can improve users self-efficacy and willingness to share reviews online. Finally, we discuss the opportunities and challenges of integrating AI assistance into review-writing systems.

LMLPA: Language Model Linguistic Personality Assessment

Oct 23, 2024

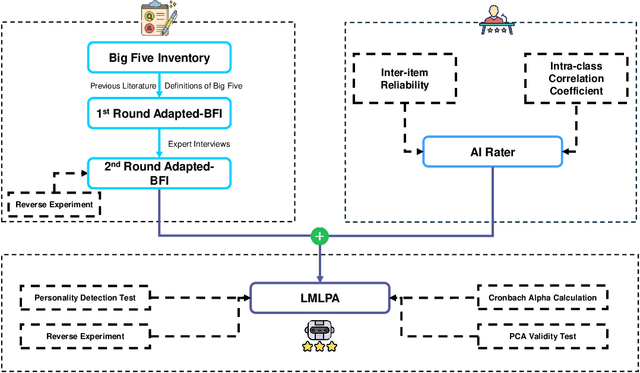

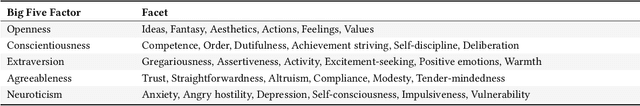

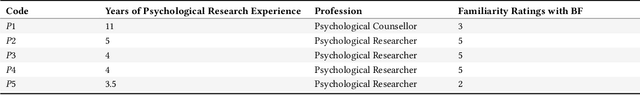

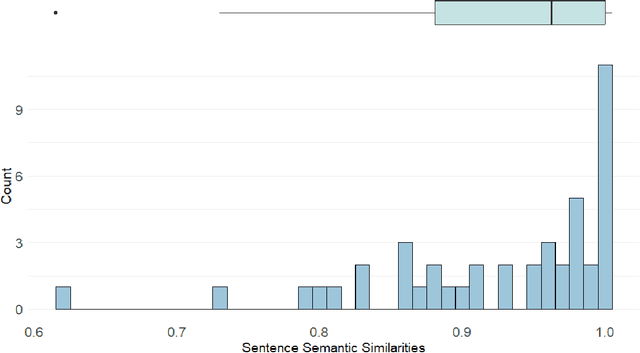

Abstract:Large Language Models (LLMs) are increasingly used in everyday life and research. One of the most common use cases is conversational interactions, enabled by the language generation capabilities of LLMs. Just as between two humans, a conversation between an LLM-powered entity and a human depends on the personality of the conversants. However, measuring the personality of a given LLM is currently a challenge. This paper introduces the Language Model Linguistic Personality Assessment (LMLPA), a system designed to evaluate the linguistic personalities of LLMs. Our system helps to understand LLMs' language generation capabilities by quantitatively assessing the distinct personality traits reflected in their linguistic outputs. Unlike traditional human-centric psychometrics, the LMLPA adapts a personality assessment questionnaire, specifically the Big Five Inventory, to align with the operational capabilities of LLMs, and also incorporates the findings from previous language-based personality measurement literature. To mitigate sensitivity to the order of options, our questionnaire is designed to be open-ended, resulting in textual answers. Thus, the AI rater is needed to transform ambiguous personality information from text responses into clear numerical indicators of personality traits. Utilising Principal Component Analysis and reliability validations, our findings demonstrate that LLMs possess distinct personality traits that can be effectively quantified by the LMLPA. This research contributes to Human-Computer Interaction and Human-Centered AI, providing a robust framework for future studies to refine AI personality assessments and expand their applications in multiple areas, including education and manufacturing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge