Simiao Zhang

LeetDecoding: A PyTorch Library for Exponentially Decaying Causal Linear Attention with CUDA Implementations

Jan 05, 2025

Abstract:The machine learning and data science community has made significant while dispersive progress in accelerating transformer-based large language models (LLMs), and one promising approach is to replace the original causal attention in a generative pre-trained transformer (GPT) with \emph{exponentially decaying causal linear attention}. In this paper, we present LeetDecoding, which is the first Python package that provides a large set of computation routines for this fundamental operator. The launch of LeetDecoding was motivated by the current lack of (1) clear understanding of the complexity regarding this operator, (2) a comprehensive collection of existing computation methods (usually spread in seemingly unrelated fields), and (3) CUDA implementations for fast inference on GPU. LeetDecoding's design is easy to integrate with existing linear-attention LLMs, and allows for researchers to benchmark and evaluate new computation methods for exponentially decaying causal linear attention. The usage of LeetDecoding does not require any knowledge of GPU programming and the underlying complexity analysis, intentionally making LeetDecoding accessible to LLM practitioners. The source code of LeetDecoding is provided at \href{https://github.com/Computational-Machine-Intelligence/LeetDecoding}{this GitHub repository}, and users can simply install LeetDecoding by the command \texttt{pip install leet-decoding}.

Is There Any Social Principle for LLM-Based Agents?

Aug 22, 2023

Abstract:Focus on Large Language Model based agents should involve more than "human-centered" alignment or application. We argue that more attention should be paid to the agent itself and discuss the potential of social sciences for agents.

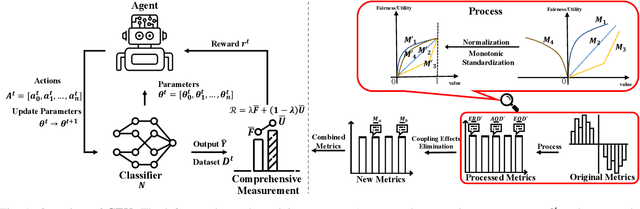

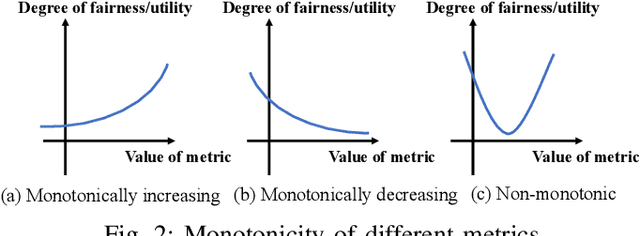

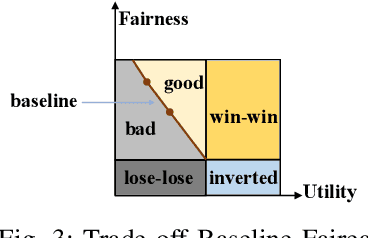

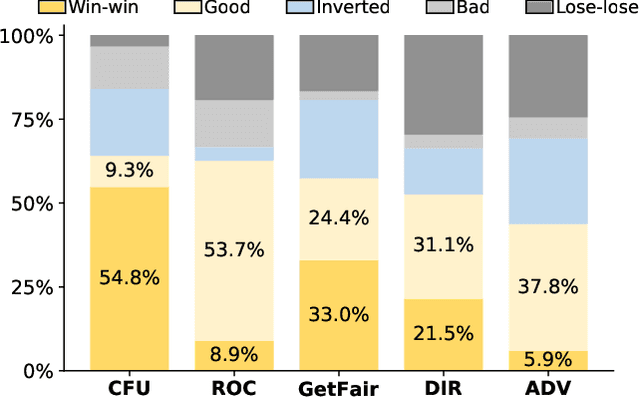

Towards Better Fairness-Utility Trade-off: A Comprehensive Measurement-Based Reinforcement Learning Framework

Jul 21, 2023

Abstract:Machine learning is widely used to make decisions with societal impact such as bank loan approving, criminal sentencing, and resume filtering. How to ensure its fairness while maintaining utility is a challenging but crucial issue. Fairness is a complex and context-dependent concept with over 70 different measurement metrics. Since existing regulations are often vague in terms of which metric to use and different organizations may prefer different fairness metrics, it is important to have means of improving fairness comprehensively. Existing mitigation techniques often target at one specific fairness metric and have limitations in improving multiple notions of fairness simultaneously. In this work, we propose CFU (Comprehensive Fairness-Utility), a reinforcement learning-based framework, to efficiently improve the fairness-utility trade-off in machine learning classifiers. A comprehensive measurement that can simultaneously consider multiple fairness notions as well as utility is established, and new metrics are proposed based on an in-depth analysis of the relationship between different fairness metrics. The reward function of CFU is constructed with comprehensive measurement and new metrics. We conduct extensive experiments to evaluate CFU on 6 tasks, 3 machine learning models, and 15 fairness-utility measurements. The results demonstrate that CFU can improve the classifier on multiple fairness metrics without sacrificing its utility. It outperforms all state-of-the-art techniques and has witnessed a 37.5% improvement on average.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge