Sibo Geng

Are We Hungry for 3D LiDAR Data for Semantic Segmentation?

Jun 08, 2020

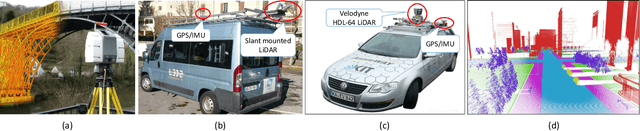

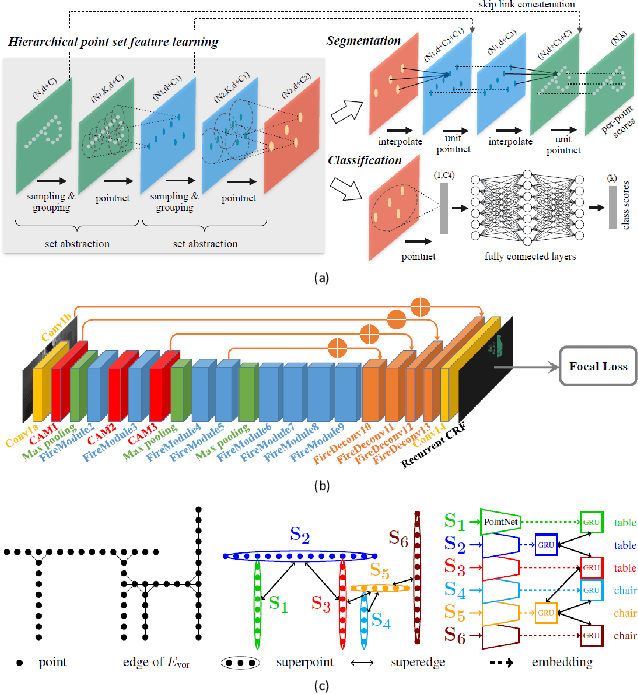

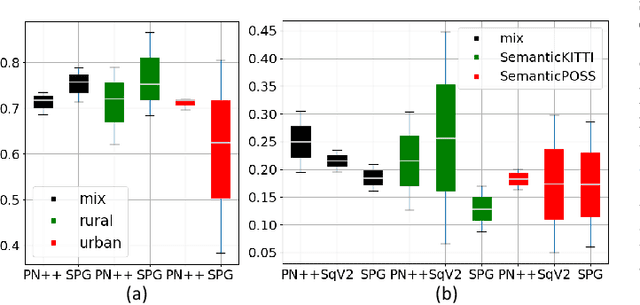

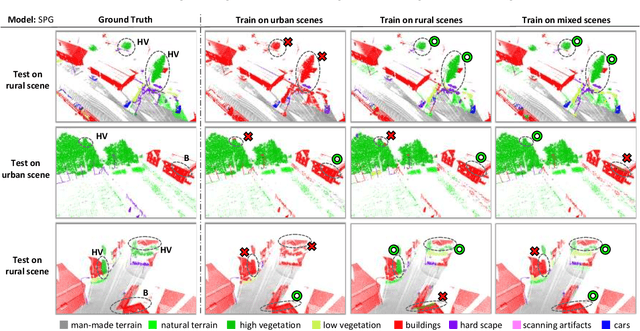

Abstract:3D LiDAR semantic segmentation is a pivotal task that is widely involved in many applications, such as autonomous driving and robotics. Studies of 3D LiDAR semantic segmentation have recently achieved considerable development, especially in terms of deep learning strategies. However, these studies usually rely heavily on considerable fine annotated data, while point-wise 3D LiDAR datasets are extremely insufficient and expensive to label. The performance limitation caused by the lack of training data is called the data hungry effect. This survey aims to explore whether and how we are hungry for 3D LiDAR data for semantic segmentation. Thus, we first provide an organized review of existing 3D datasets and 3D semantic segmentation methods. Then, we provide an in-depth analysis of three representative datasets and several experiments to evaluate the data hungry effects in different aspects. Efforts to solve data hungry problems are summarized for both 3D LiDAR-focused methods and general-purpose methods. Finally, insightful topics are discussed for future research on data hungry problems and open questions.

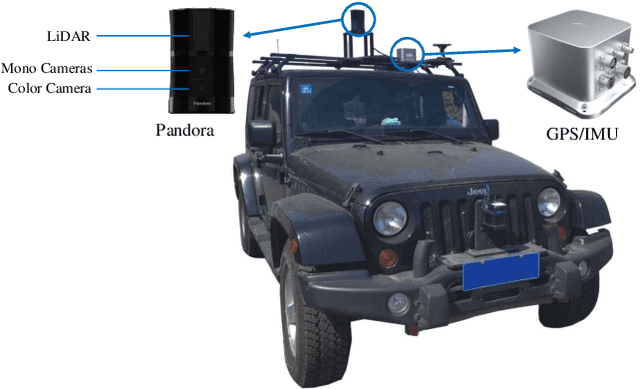

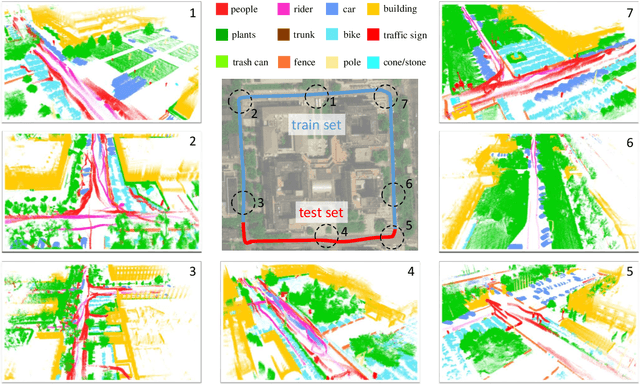

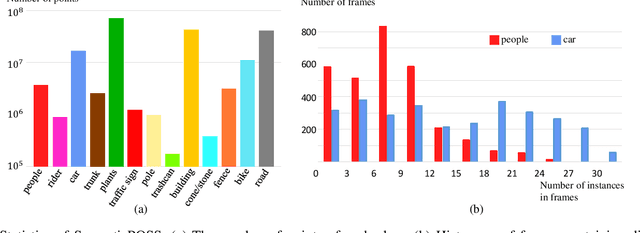

SemanticPOSS: A Point Cloud Dataset with Large Quantity of Dynamic Instances

Feb 21, 2020

Abstract:3D semantic segmentation is one of the key tasks for autonomous driving system. Recently, deep learning models for 3D semantic segmentation task have been widely researched, but they usually require large amounts of training data. However, the present datasets for 3D semantic segmentation are lack of point-wise annotation, diversiform scenes and dynamic objects. In this paper, we propose the SemanticPOSS dataset, which contains 2988 various and complicated LiDAR scans with large quantity of dynamic instances. The data is collected in Peking University and uses the same data format as SemanticKITTI. In addition, we evaluate several typical 3D semantic segmentation models on our SemanticPOSS dataset. Experimental results show that SemanticPOSS can help to improve the prediction accuracy of dynamic objects as people, car in some degree. SemanticPOSS will be published at \url{www.poss.pku.edu.cn}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge