Shushi Namba

Optimizing Facial Expressions of an Android Robot Effectively: a Bayesian Optimization Approach

Jan 13, 2023Abstract:Expressing various facial emotions is an important social ability for efficient communication between humans. A key challenge in human-robot interaction research is providing androids with the ability to make various human-like facial expressions for efficient communication with humans. The android Nikola, we have developed, is equipped with many actuators for facial muscle control. While this enables Nikola to simulate various human expressions, it also complicates identification of the optimal parameters for producing desired expressions. Here, we propose a novel method that automatically optimizes the facial expressions of our android. We use a machine vision algorithm to evaluate the magnitudes of seven basic emotions, and employ the Bayesian Optimization algorithm to identify the parameters that produce the most convincing facial expressions. Evaluations by naive human participants demonstrate that our method improves the rated strength of the android's facial expressions of anger, disgust, sadness, and surprise compared with the previous method that relied on Ekman's theory and parameter adjustments by a human expert.

Facial movement synergies and Action Unit detection from distal wearable Electromyography and Computer Vision

Aug 20, 2020

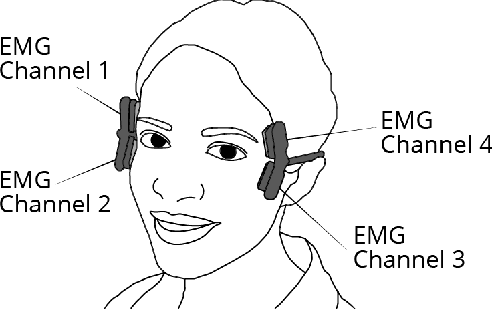

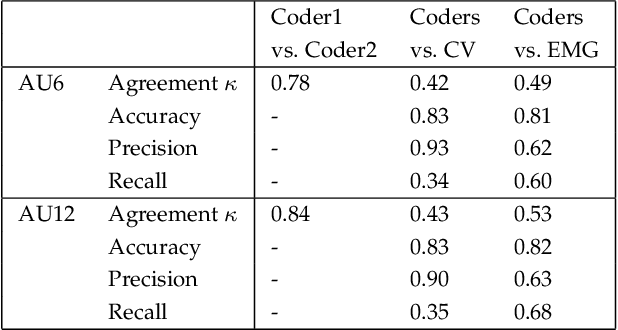

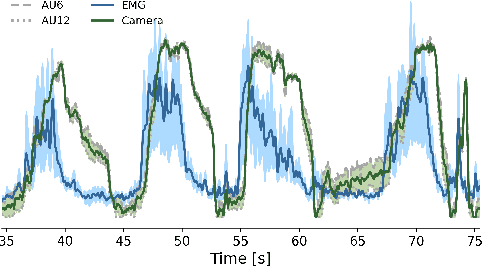

Abstract:Distal facial Electromyography (EMG) can be used to detect smiles and frowns with reasonable accuracy. It capitalizes on volume conduction to detect relevant muscle activity, even when the electrodes are not placed directly on the source muscle. The main advantage of this method is to prevent occlusion and obstruction of the facial expression production, whilst allowing EMG measurements. However, measuring EMG distally entails that the exact source of the facial movement is unknown. We propose a novel method to estimate specific Facial Action Units (AUs) from distal facial EMG and Computer Vision (CV). This method is based on Independent Component Analysis (ICA), Non-Negative Matrix Factorization (NNMF), and sorting of the resulting components to determine which is the most likely to correspond to each CV-labeled action unit (AU). Performance on the detection of AU06 (Orbicularis Oculi) and AU12 (Zygomaticus Major) was estimated by calculating the agreement with Human Coders. The results of our proposed algorithm showed an accuracy of 81% and a Cohen's Kappa of 0.49 for AU6; and accuracy of 82% and a Cohen's Kappa of 0.53 for AU12. This demonstrates the potential of distal EMG to detect individual facial movements. Using this multimodal method, several AU synergies were identified. We quantified the co-occurrence and timing of AU6 and AU12 in posed and spontaneous smiles using the human-coded labels, and for comparison, using the continuous CV-labels. The co-occurrence analysis was also performed on the EMG-based labels to uncover the relationship between muscle synergies and the kinematics of visible facial movement.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge