Felix Dollack

Ensemble Learning to Assess Dynamics of Affective Experience Ratings and Physiological Change

Dec 26, 2023Abstract:The congruence between affective experiences and physiological changes has been a debated topic for centuries. Recent technological advances in measurement and data analysis provide hope to solve this epic challenge. Open science and open data practices, together with data analysis challenges open to the academic community, are also promising tools for solving this problem. In this entry to the Emotion Physiology and Experience Collaboration (EPiC) challenge, we propose a data analysis solution that combines theoretical assumptions with data-driven methodologies. We used feature engineering and ensemble selection. Each predictor was trained on subsets of the training data that would maximize the information available for training. Late fusion was used with an averaging step. We chose to average considering a ``wisdom of crowds'' strategy. This strategy yielded an overall RMSE of 1.19 in the test set. Future work should carefully explore if our assumptions are correct and the potential of weighted fusion.

Facial movement synergies and Action Unit detection from distal wearable Electromyography and Computer Vision

Aug 20, 2020

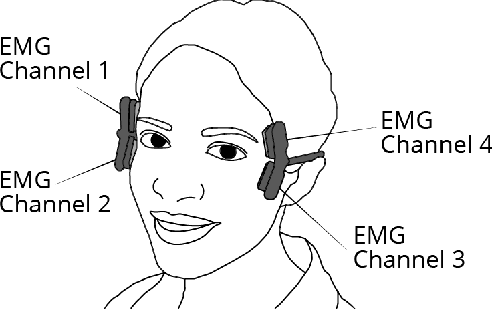

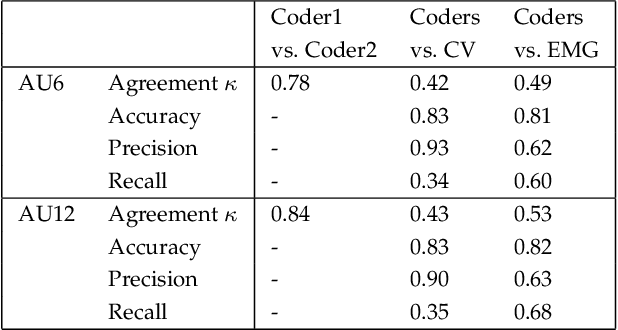

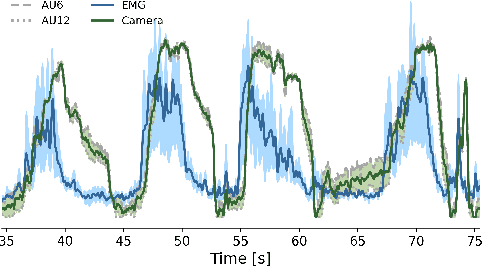

Abstract:Distal facial Electromyography (EMG) can be used to detect smiles and frowns with reasonable accuracy. It capitalizes on volume conduction to detect relevant muscle activity, even when the electrodes are not placed directly on the source muscle. The main advantage of this method is to prevent occlusion and obstruction of the facial expression production, whilst allowing EMG measurements. However, measuring EMG distally entails that the exact source of the facial movement is unknown. We propose a novel method to estimate specific Facial Action Units (AUs) from distal facial EMG and Computer Vision (CV). This method is based on Independent Component Analysis (ICA), Non-Negative Matrix Factorization (NNMF), and sorting of the resulting components to determine which is the most likely to correspond to each CV-labeled action unit (AU). Performance on the detection of AU06 (Orbicularis Oculi) and AU12 (Zygomaticus Major) was estimated by calculating the agreement with Human Coders. The results of our proposed algorithm showed an accuracy of 81% and a Cohen's Kappa of 0.49 for AU6; and accuracy of 82% and a Cohen's Kappa of 0.53 for AU12. This demonstrates the potential of distal EMG to detect individual facial movements. Using this multimodal method, several AU synergies were identified. We quantified the co-occurrence and timing of AU6 and AU12 in posed and spontaneous smiles using the human-coded labels, and for comparison, using the continuous CV-labels. The co-occurrence analysis was also performed on the EMG-based labels to uncover the relationship between muscle synergies and the kinematics of visible facial movement.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge